en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Gaeilge

Gaeilge  اردو

اردو  Indonesia

Indonesia  Polski

Polski When you need to gather information, parsing can help break down a website's complex structure into its component elements. It's important to understand the difference between web crawling and web scraping for effective parsing.

Let's start by defining these terms and exploring how web crawling and web scraping work:

Web crawling is an automated process where a bot (or spider) crawls web pages, collecting website links and building a network of data for storage and analysis.

Web scraping involves collecting specific information from a web page.

Web scraping and web crawling serve similar purposes but have distinct characteristics. Let's delve into their main uses first:

While their purposes align, they differ in several key aspects:

Scope: Web crawling systematically browses web pages by following links, covering a large volume of pages to index content for search engines. Web scraping, however, is more targeted, extracting specific data from particular web pages as per user requirements.

Frequency: Crawlers operate continuously to keep search engine indexes updated, regularly visiting websites to discover and update content. Scraping can be a one-time or periodic action based on specific goals.

Interaction with data: Crawlers download and index web page content without always interacting with it, focusing on data discovery and categorization. Scraping, on the other hand, involves extracting specific information, often requiring deeper interaction with the page structure, such as identifying and extracting data from specific HTML elements.

Web scraping is a valuable tool for data extraction, offering both advantages and disadvantages. Here's a breakdown of the main ones:

Advantages:

Disadvantages:

Web crawling, like web scraping, has its own set of advantages and disadvantages. Here's a breakdown of the main ones:

Advantages:

Disadvantages:

Web scraping with Python is a powerful way to gather information from websites. In this article, we'll walk through a step-by-step tutorial on how to set up a parser for web scraping using Python.

To create your own Python parser, follow these steps:

crawl_products(pages_count):

urls = [ ]

return urls

parse_products(urls):

data = [ ]

return data

def main():

urls = crawl_products(PAGES_COUNT)

data = parse_products(urls)

fmt = ‘https://site's url/?page={page}’

for page_n in range(1, 1 + pages_count):

page_url = fmt.format(page=page_n)

response = requests.get(page_url)

def get_soup(url, **kwargs):

response = requests.get(url, **kwargs)

if response.status_code = 200;

soup = BeautifulSoup(response.text, features=’html.parser’)

else:

soup = None

return soup

—---------

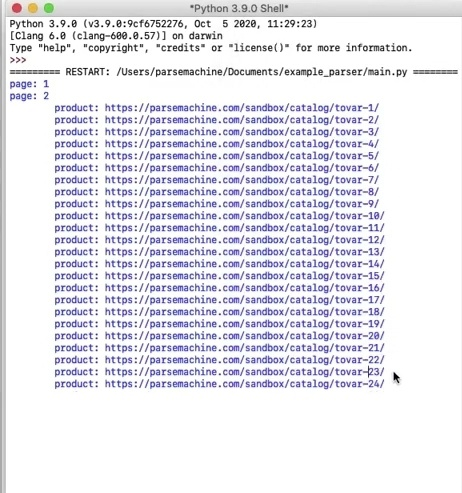

print(‘page: {}’.format(page_n))

page_url = fmt.format(page=page_n)

soup = get_soup(page_url)

if soup is None:

break

for tag in soup.select(‘.product-card .title’):

href = tag.attrs[‘href’]

url = ‘https://site's url.format(href)

urls.append(url)

return urls

def parse_products(urls):

data = [ ]

for url in urls:

soup = get_soup(url)

if soup is Non:

break

name = soup.select_one(‘#️product_name’).text.strip()

amount = soup.select_one(‘#️product_amount’).text.strip()

techs = {}

for row in soup.select(‘#️characteristics tbody tr’):

cols = row.select(‘td’)

cols = [c.text.strip() for c in cols]

techs[cols[0]] = cols[1]

item = {

‘name’: name,

‘amount’: amount,

‘techs’: techs,

)

data.append(item)

Let's also print the URL of the product currently being processed to see the parsing process: print(‘\product: {}’.format(url))

with open(OUT_FILENAME, ‘w’) as f:

json.dump(data, f, ensure_ascii=False, indent=1)

Python's web scraping capabilities are greatly enhanced by the use of specialized libraries. Whether you're new to scraping or an experienced developer, mastering these libraries is key to effective web scraping. Here's a closer look at three essential libraries: requests, Selenium, and BeautifulSoup.

The requests library is a cornerstone of many web scraping projects. It's a powerful HTTP library used to make requests to websites. Its simplicity and user-friendliness make it ideal for extracting HTML content from web pages. With just a few lines of code, you can send GET or POST requests and process the response data.

Selenium is a crucial tool for web scraping in Python, offering a versatile framework for automating browser interactions. It ensures cross-browser compatibility and is particularly useful for tasks like automated testing and exploring web pages. Selenium can be used to add functionality to web applications, extract data from websites, or automate repetitive tasks.

Beautiful Soup is another essential library for web scraping in Python. It allows you to extract and parse data from HTML or XML documents. By using features such as tag searching, navigating document structures, and content filtering based on common patterns, you can efficiently extract information from web pages. Beautiful Soup can also be used in conjunction with other Python libraries, such as requests, which adds to its flexibility.

When it comes to professional parsing, especially for sourcing purposes, you'll need additional web scraping services. The tools listed below are top-notch and will greatly simplify and optimize the information collection process, speeding up candidate searches or other data analysis tasks.

AutoPagerize is a browser extension that enhances your scraping capabilities by automating the often tedious process of navigating website content. What sets AutoPagerize apart is its ability to intelligently identify and process various data patterns across numerous web pages. This eliminates the need to customize scripts for each unique site structure, making it a versatile solution adaptable to different formats used by various sites.

Instant Data Scraper is another user-friendly tool designed for easy web scraping. With its intuitive interface, you can navigate the data collection process without complex coding or technical knowledge. The tool's versatility is notable, as it supports different websites and platforms, allowing you to extract information from various sources, from social networks to news sites. Instant Data Scraper also enables the extraction of various data types, including text, images, and links.

PhantomBuster offers a wide range of settings, allowing you to tailor it to your needs. From selecting data sources to defining output structures, you have complete control over the information collection process. PhantomBuster seamlessly integrates with various APIs, providing additional capabilities for data processing. This allows for smooth interoperability with other platforms, making it an excellent tool for web API scraping.

In conclusion, web scraping and web crawling are essential tools for implementing automation in information collection. These technologies enhance business projects, scientific research, or any other area requiring the processing and analysis of large amounts of data.

Comments: 0