en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Gaeilge

Gaeilge  اردو

اردو  Indonesia

Indonesia  Polski

Polski Scraping images from Yahoo Image Search is important when generating image data sets. This guide explains how to scrape images from Yahoo Image Search using Python as well as Requests library in combination with lxml for HTML parsing techniques. It also touches on proxy use in order to avoid getting caught by the Yahoo bot detection systems.

To scrape images from Yahoo Images, you will need the following Python libraries:

Ensure you have all the necessary libraries installed. Install them using pip:

pip install requests

pip install lxmlOr use a single command:

pip install requests lxmlFirst, we need to import the necessary libraries for our scraper.

import requests

from lxml import htmlNext, we'll perform a search on Yahoo Images.

Here we will define a search query as puppies and send a GET request to Yahoo Images search with the necessary headers. The request headers are important to mimic a browser request, which helps in bypassing some basic bot detection mechanisms.

# Define headers to mimic a browser request

headers = {

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"accept-language": "en-IN,en;q=0.9",

"cache-control": "max-age=0",

"dnt": "1",

"priority": "u=0, i",

"sec-ch-ua": '"Google Chrome";v="125", "Chromium";v="125", "Not.A/Brand";v="24"',

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": '"Linux"',

"sec-fetch-dest": "document",

"sec-fetch-mode": "navigate",

"sec-fetch-site": "none",

"sec-fetch-user": "?1",

"upgrade-insecure-requests": "1",

"user-agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36",

}

# Define the search query

search_query = "puppies"

# Make a GET request to the Yahoo Images search page

response = requests.get(

url=f"https://images.search.yahoo.com/search/images?p={search_query}",

headers=headers

)

After receiving the response from Yahoo, we need to parse the HTML to extract image URLs. We use lxml for this purpose.

# Parse the HTML response

parser = fromstring(response.text)

# Extract image URLs using XPath

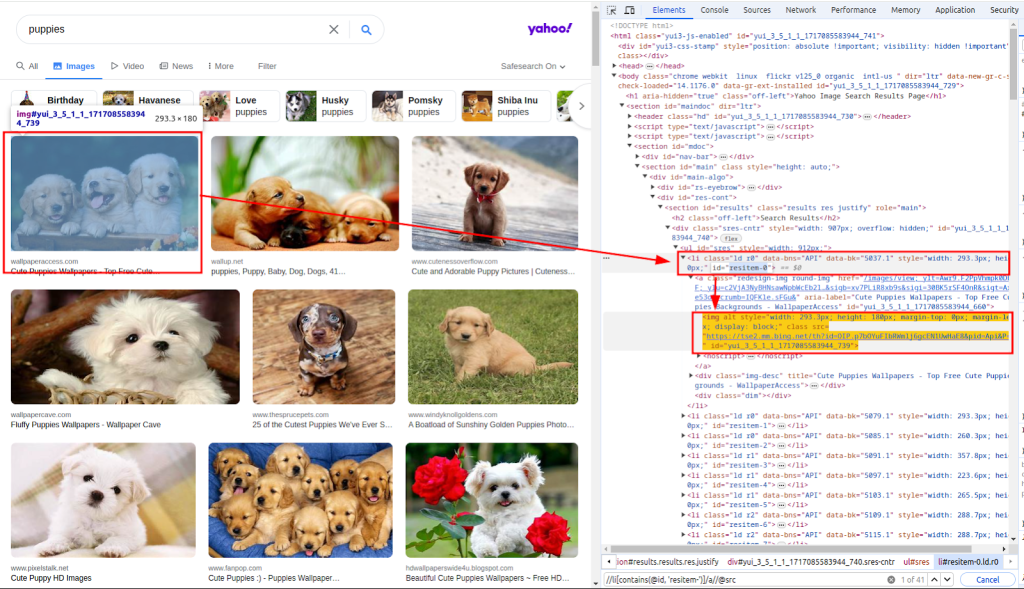

images_urls = parser.xpath("//li[contains(@id, 'resitem-')]/a//@src")Function fromstring is used to parse the HTML response text. Xpath is used to extract URLs of images. The below screenshot shows how the xpath was obtained.

With the list of image URLs, we now need to download each image.

Here, we will loop through the images_urls list to extract both the count(index) and the url(address). Each image will then be downloaded by sending a GET request to the respective url.

# Download each image and save it to a file

for count, url in enumerate(images_urls):

response = requests.get(url=url, headers=headers)Finally, we save the downloaded images to the local filesystem. We define a function download_file that handles the file saving process.

This function takes the count (to create unique filenames) and the response (containing the image data). It determines the file extension from the Content-Type header and saves the file in the ./images/ directory.

def download_file(count, response):

# Get the file extension from the Content-Type header

extension = response.headers.get("Content-Type").split("/")[1]

filename = "./images/" + str(count) + f".{extension}"

# Create the directory if it doesn't exist

os.makedirs(os.path.dirname(filename), exist_ok=True)

# Write the response content to the file

with open(filename, "wb") as f:

f.write(response.content)By calling this function within the loop, we save each downloaded image:

for count, url in enumerate(images_urls):

response = requests.get(url=url, headers=headers)

download_file(count, response)While scraping data from Yahoo, it’s important to be aware of Yahoo's bot detection mechanisms. Yahoo mainly uses these techniques to identify and block automated bots:

To avoid being blocked by Yahoo bot detection mechanism, especially when making multiple requests from the same IP, we use proxies to mask our IP address.

By routing our requests through different proxies, we can distribute our scraping activity across multiple IP addresses, hence reducing the probability being detected.

proxies = {

'http': 'http://USER:PASS@HOST:PORT',

'https': 'http://USER:PASS@HOST:PORT'

}

response = requests.get(url, headers=headers, proxies=proxies, verify=False)Here is the complete script for scraping images from Yahoo Images search results using proxies:

import os

import requests

from lxml.html import fromstring

def download_file(count, response):

"""

Saves the content of a response to a file in the ./images/ directory.

Args:

count (int): A unique identifier for the file.

response (requests.Response): The HTTP response containing the file content.

"""

# Get the file extension from the Content-Type header

extension = response.headers.get("Content-Type").split("/")[1]

filename = "./images/" + str(count) + f".{extension}"

# Create the directory if it doesn't exist

os.makedirs(os.path.dirname(filename), exist_ok=True)

# Write the response content to the file

with open(filename, "wb") as f:

f.write(response.content)

def main():

"""

Main function to search for images and download them.

This function performs the following steps:

1. Sets up the request headers.

2. Searches for images of puppies on Yahoo.

3. Parses the HTML response to extract image URLs.

4. Downloads each image and saves it to the ./images/ directory.

"""

# Define headers to mimic a browser request

headers = {

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"accept-language": "en-IN,en;q=0.9",

"cache-control": "max-age=0",

"dnt": "1",

"priority": "u=0, i",

"sec-ch-ua": '"Google Chrome";v="125", "Chromium";v="125", "Not.A/Brand";v="24"',

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": '"Linux"',

"sec-fetch-dest": "document",

"sec-fetch-mode": "navigate",

"sec-fetch-site": "none",

"sec-fetch-user": "?1",

"upgrade-insecure-requests": "1",

"user-agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36",

}

# Define proxies to bypass rate limiting

proxies = {"http": "http://USER:PASS@HOST:PORT", "https": "http://USER:PASS@HOST:PORT"}

# Define the search query

search_query = "puppies"

# Make a GET request to the Yahoo Images search page

response = requests.get(

url=f"https://images.search.yahoo.com/search/images?p={search_query}",

headers=headers,

proxies=proxies,

verify=False

)

# Parse the HTML response

parser = fromstring(response.text)

# Extract image URLs using XPath

images_urls = parser.xpath("//li[contains(@id, 'resitem-')]/a//@src")

# Download each image and save it to a file

for count, url in enumerate(images_urls):

response = requests.get(url=url, headers=headers, proxies=proxies, verify=False)

download_file(count, response)

if __name__ == "__main__":

main()Scraping images from Yahoo Image Search using Python is a powerful technique for automating data collection and analysis tasks. By utilising the Requests library for HTTP requests and the lxml library for HTML parsing, you can efficiently extract image URLs and download the images. Incorporating proxies helps you avoid detection and prevent IP bans during extensive scraping activities.

Comments: 0