en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski The process of scraping Telegram channels entails automated collection of information, whether it be from public groups or private communities. Through Python and the Telethon library, messages, metadata, media files, group members and much more could be retrieved.

Web scraping Telegram can be done for various reasons including studying detailed marketing research, analyzing content, or keeping track of discussion threads in a community.

Coming up next is a detailed guide on how to scrape Telegram data using Python. Before running scraping scripts, you need to prepare your environment, including Python libraries, Telegram credentials, and proxy access. At this stage, you can buy proxy solutions that match the scale and stability requirements of your scraping task.

So, what is telegram scraping the first step? One has to set up his or her Python environment including relevant libraries. The main API Telegram scraping tool is Telethon – an asynchronous library that allows interaction with the platform.

Use the command to install it:

pip install telethon

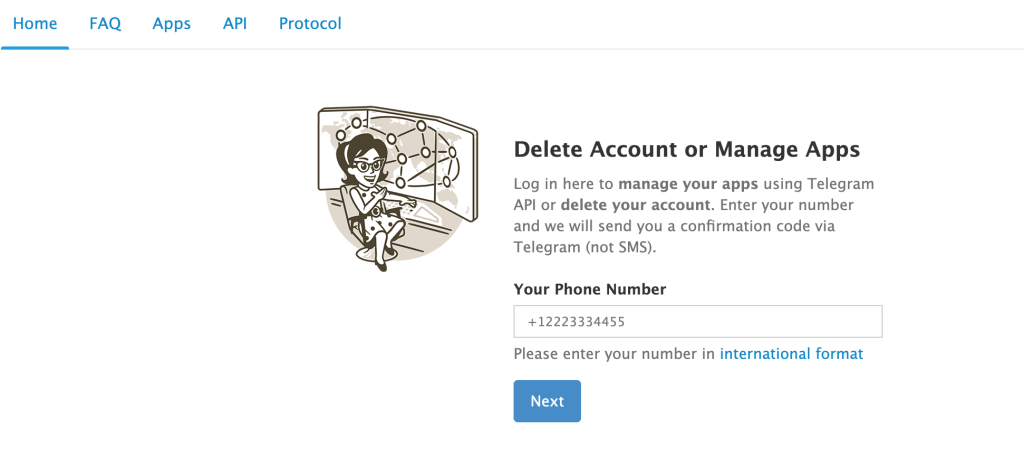

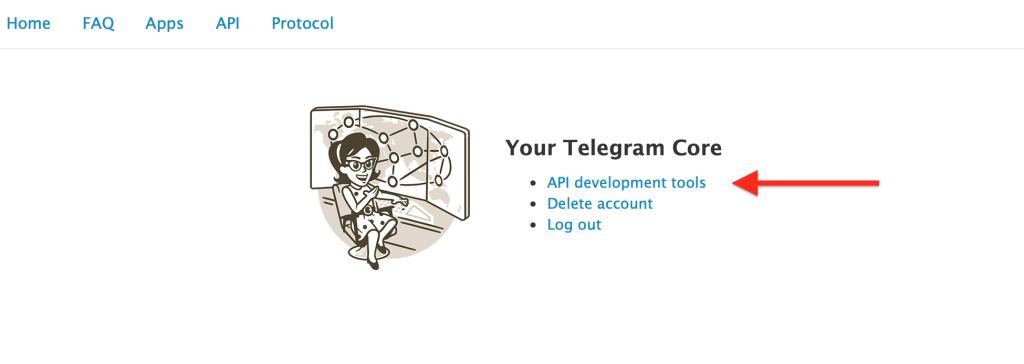

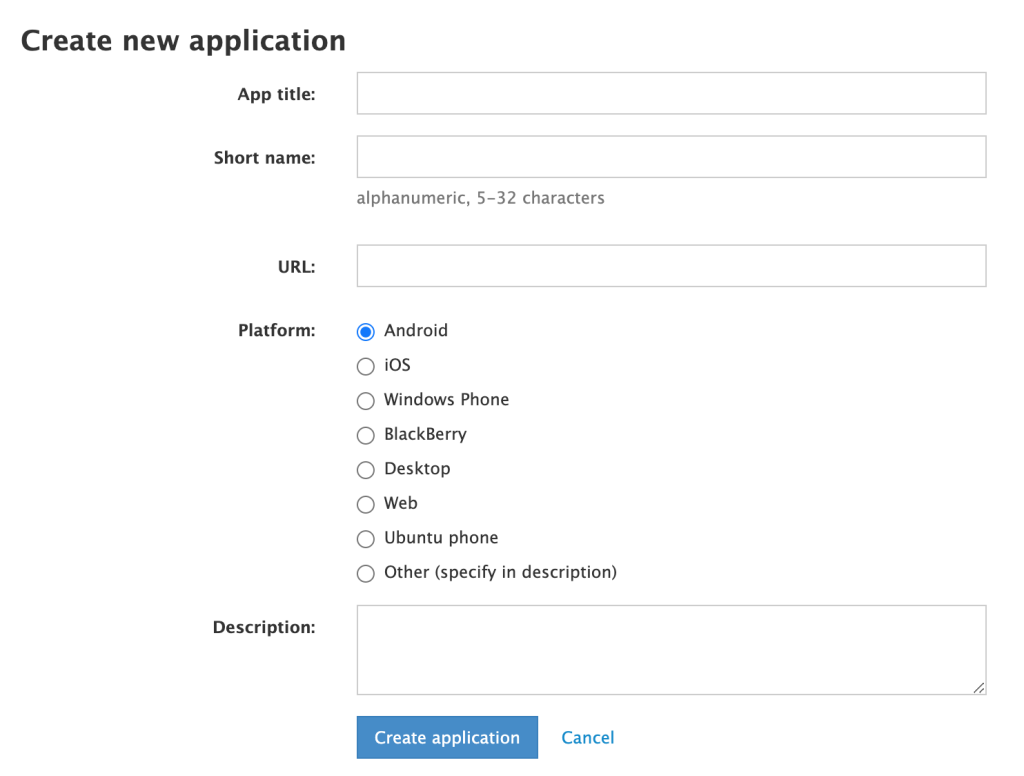

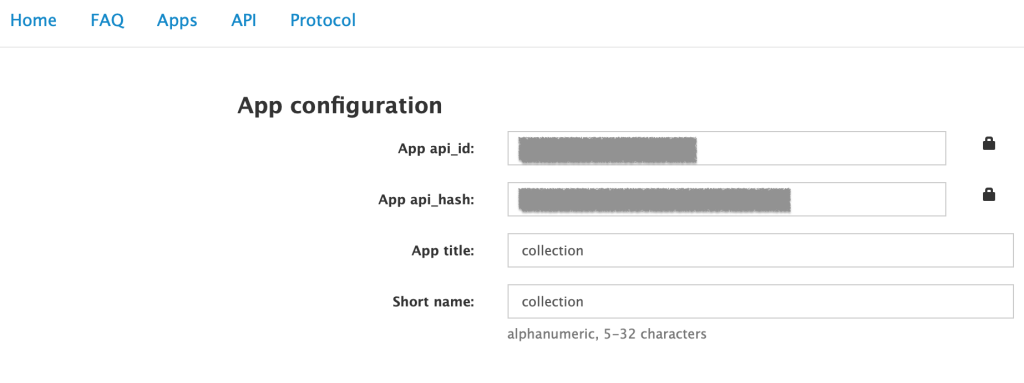

Before engaging with the web scraping Telegram API, there is a personal API ID and Hash which must be acquired.

Once you have the API and Hash, it’s possible to create a session to log into the account now.

Important! While web scraping Telegram script employing never to title your file telethon.py, as Python will attempt to import TelegramClient and it will fail due to the name conflict.

To log into your account, first write:

from telethon import TelegramClient

api_id = 12345678

api_hash = '0123456789abcdef0123456789abcdef'

with TelegramClient('anon', api_id, api_hash) as client:

client.loop.run_until_complete(client.send_message('me', 'hello'))

In the first line, we import the class name to create an instance of the class. Then we define variables to store our API and hash.

Later, we create a new instance of TelegramClient and call it client. Now you can use the client variable for anything, for example, sending yourself a message.

Prior to web scraping Telegram channels, one needs to pinpoint the data collection source. Within the platform, there are two primary community types: channels and groups.

If you need access to a private channel or group, you must be a member of it. Some communities have certain limitations and or require invites, and these complications must be taken into consideration prior to initiating a Telegram channel scraping.

Consider an example of how to select a channel or group to join and perform scraping telegram channel members and other data:

from telethon import TelegramClient

api_id = 12345678

api_hash = '0123456789abcdef0123456789abcdef'

client = TelegramClient('anon', api_id, api_hash)

async def main():

me = await client.get_me()

username = me.username

print(username)

print(me.phone)

async for dialog in client.iter_dialogs():

print(dialog.name, 'has ID - ', dialog.id)

with client:

client.loop.run_until_complete(main())

This illustrates how to login and obtain certain access pertaining to your account including all the conversations which contain the relevant IDs that are critical in terms of interfacing with the desired channel or group.

Setting a connection on the API gives you the freedom to collect messages relevant to the channel or group you set your sights on. Through Python Telegram scraping, you stand the chance of obtaining numerous text messages, timestamps, media including the metadata regarding the participants.

Here’s an example how to do it:

from telethon import TelegramClient

api_id = 12345678

api_hash = '0123456789abcdef0123456789abcdef'

client = TelegramClient('anon', api_id, api_hash)

async def main():

id_client = 32987309847

object = await client.get_entity(id_client)

print(object.title)

print(object.date)

if object.photo: # Check if there is a profile photo

await client.download_profile_photo(object, file="profile.jpg")

# Print all chat messages

async for message in client.iter_messages(id_client):

print(f'{message.id}//{message.date} // {message.text}')

# Save all chat photos

if message.photo:

path = await message.download_media()

print('File saved', path)

with client:

client.loop.run_until_complete(main())

Once we have the requisite ID, we can access the messages and the corresponding metadata of the group. After this, information such as the name of the group, its creation date, avatar (if available), messages and their corresponding IDs along with the dates of publication can be accessed. If the messages have images, they can be automatically fetched along with the messages for further processing.

Once all parameters have been set, Python Telegram scraper script can be activated. Some preliminary testing is advisable; running the script on a large set of messages will likely uncover numerous problems which should be fixed before proceeding to full-scale data harvesting.

While running, make sure to follow these guidelines:

If any issues arise, these can usually be dealt with by pausing between requests, or confirming that the channel or group is indeed available.

After basic data collection is automated, it becomes possible to expand the scraper feature set and add new data gathering capabilities.

from telethon import TelegramClient

api_id = 12345678

api_hash = '0123456789abcdef0123456789abcdef'

client = TelegramClient('session_name', api_id, api_hash)

async def main():

channel = await client.get_entity(-4552839301)

# Get the last 100 messages from the channel

messages = await client.get_messages(channel, limit=100)

# Filter messages by keywords

keyword = 'Hello'

filtered_messages = [msg for msg in messages if keyword.lower() in msg.text.lower()]

for message in filtered_messages:

print(f"Message: {message.text}")

print(f"Date: {message.date}")

print(f"Sender: {message.sender_id}")

# Get channel participants

participants = await client.get_participants(channel)

for participant in participants:

print(f"Participant ID: {participant.id}, Username: {participant.username}")

with client:

client.loop.run_until_complete(main())

While working with Telegram’s API, it’s vital to keep in mind that the number of calls that can be made during a specific duration of time is capped. Consistently sending too many messages will cause the platform to restrict access to the API or dial down server responsiveness. For large-scale scraping where Telegram may flag repeated requests, residential proxy helps rotate your IP addresses naturally to avoid temporary blocks and restrictions.

In order to mitigate these issues, the adoption of proxies is advised:

import random

import socks

from telethon import TelegramClient

# List of available proxies

proxy_list = [

("res.proxy-seller.com", 10000, socks.SOCKS5, True, "user1", "pass1"),

("res.proxy-seller.com", 10001, socks.SOCKS5, True, "user2", "pass2"),

("res.proxy-seller.com", 10002, socks.SOCKS5, True, "user3", "pass3"),

]

# Select a random proxy

proxy = random.choice(proxy_list)

try:

client = TelegramClient('anon', api_id, api_hash, proxy=proxy)

print(f"Successfully connected through proxy: {proxy}")

except (OSError, ConnectionError, TimeoutError, ValueError) as e:

print(f"Proxy error: {e}, trying without it")

client = TelegramClient('anon', api_id, api_hash)

An alternative approach is to use a pool of static IP addresses, such as IPv6 proxies, instead of relying on a single dynamic IP. This setup improves connection stability and reduces the likelihood of interruptions.

We implemented proxy chains where a new proxy server is utilized for each subsequent connection, effectively having established a connection. This strategy minimizes the chances of web scraping Telegram API access being restricted and eliminates the possibility of limited connection speed, ensuring that the scripts will run reliably.

Similar proxy configurations can also be applied to other messaging platforms that use API-based connections — for instance, you can see proxy solutions tailored for WhatsApp automation and data extraction. These proxies help maintain stable sessions and prevent access interruptions during large-scale scraping or account management.

For reliable request routing and rate-limit management when using Telethon at scale, consider purchasing a reputable buy proxy for telegram.

Telegram scraping with Python can be harnessed across a multitude of sectors as the platform hosts a varying bounty of one of a kind data which is not available anywhere else.

Key application areas include:

Indeed, using a Telegram channel scraper, you can automatically obtain the necessary data and simplify their further analysis.

This time, we tackled the challenge of scraping Telegram with Python and extracting content from chosen communities. Using proxies has been a major point of focus to provide uninterrupted execution. The Telethon library was installed, the API was enabled, required channels or groups were selected, and messages, media, and metadata were retrieved.

Employing a web scraping Telegram with Python allows for the automation of data collection for marketing research, content analysis, and even user activity monitoring. To circumvent restrictions imposed by platform, one can also incorporate account rotation, the use of proxies, VPNs, or dynamic timeouts between requests. It is additionally necessary to observe the law along with ethical boundaries to avoid breaching the platform’s policies.

Comments: 0