en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski JSON stands for JavaScript Object Notation. It is not only lightweight but also easy to read and write for humans. Likewise, machines find it simple to parse and generate. Parsing is crucial when dealing with content from APIs, config files, or other sources of stored info for any Python developer. This article walks you through the basics of parsing JSON using Python’s module, including how to use json.dump Python for saving content.

JSON structures details in key-value pairs. Here's a basic example of an object:

{

"name": "Alice",

"age": 30,

"is_student": false,

"courses": ["Math", "Science"]

}This Python JSON parsing example contains common elements: a string, a number, a boolean, and an array. Getting familiar with this structure makes it much easier to work in Python.

By implementing Python parse JSON string easily using the built-in module in Python. This module includes methods such as json.loads() for reading from a string and json.load() for reading from a file. Conversely, json.dumps() and json.dump Python are used for writing information to a string and a file, respectively.

Let's look at how to read JSON data, which we will discuss next.

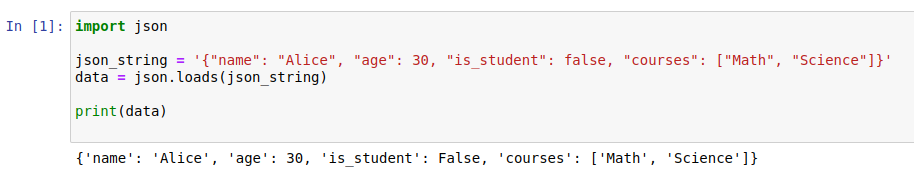

If you want to read JSON in Python, then use json.loads() to parse a string and convert it into a Python object:

import json

json_string = '{"name": "Alice", "age": 30, "is_student": false, "courses": ["Math", "Science"]}'

data = json.loads(json_string)

print(data)Output:

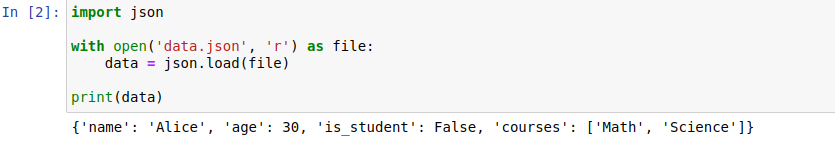

To extract information from a file, use this method: json.load() and open a JSON file in Python:

import json

with open('data.json', 'r') as file:

data = json.load(file)

print(data)Output:

Before writing, you often need to read or load existing info – and that’s where the Python load JSON file method becomes useful. Once the info is loaded properly, you can manipulate and write it back in various formats.

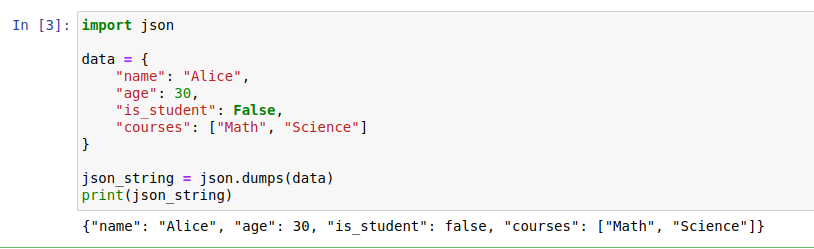

To write info to a string, use the json.dumps Python method:

import json

data = {

"name": "Alice",

"age": 30,

"is_student": False,

"courses": ["Math", "Science"]

}

json_string = json.dumps(data)

print(json_string)Output:

To write the details of this file, use the json.dump Python method:

import json

data = {

"name": "Alice",

"age": 30,

"is_student": False,

"courses": ["Math", "Science"]

}

with open('data.json', 'w') as file:

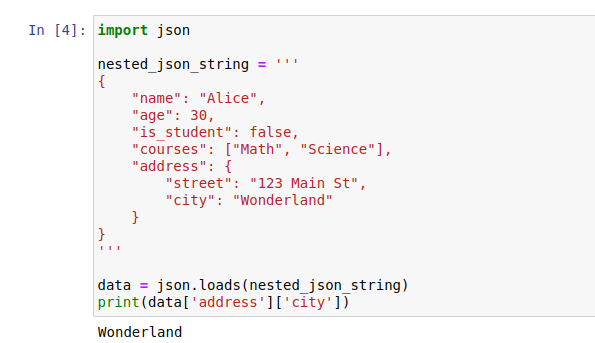

json.dump(data, file)Nested objects are common when working with more complex information structures, which can easily handle these nested structures.

import json

nested_json_string = '''

{

"name": "Alice",

"age": 30,

"is_student": false,

"courses": ["Math", "Science"],

"address": {

"street": "123 Main St",

"city": "Wonderland"

}

}

'''

data = json.loads(nested_json_string)

print(data['address']['city'])Output:

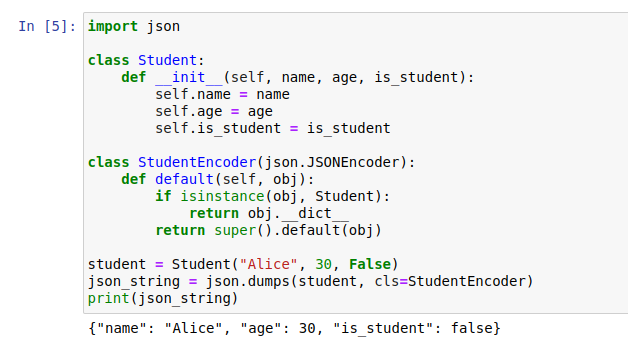

The language can’t automatically turn all custom objects into JSON. In those cases, you’ll need to create a custom encoder.

import json

class Student:

def __init__(self, name, age, is_student):

self.name = name

self.age = age

self.is_student = is_student

class StudentEncoder(json.JSONEncoder):

def default(self, obj):

if isinstance(obj, Student):

return obj.__dict__

return super().default(obj)

student = Student("Alice", 30, False)

json_string = json.dumps(student, cls=StudentEncoder)

print(json_string)Output:

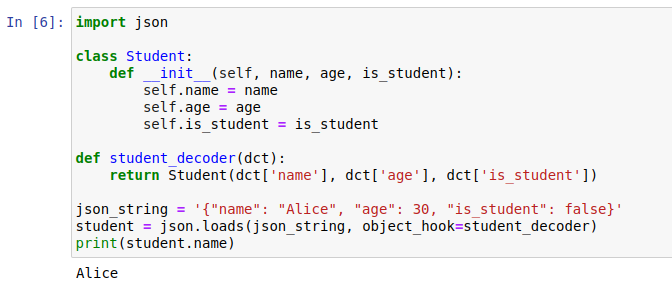

To deserialize into custom objects, you’ll need to implement a custom decoder that knows how to handle them.

import json

class Student:

def __init__(self, name, age, is_student):

self.name = name

self.age = age

self.is_student = is_student

def student_decoder(dct):

return Student(dct['name'], dct['age'], dct['is_student'])

json_string = '{"name": "Alice", "age": 30, "is_student": false}'

student = json.loads(json_string, object_hook=student_decoder)

print(student.name)Output:

You’ll learn practical ways to Python parse JSON from APIs. Almost all modern APIs deliver data in JSON format, so knowing how to retrieve and parse it is essential.

Use the requests library (pip install requests) to make GET or POST calls.

import requests

response = requests.get('https://api.proxy-seller.com/v1/proxies')Extract JSON directly using:

data = response.json()This method is preferred over manually parsing with json.loads(response.text) because it automatically handles decoding.

When working with APIs, authentication matters. You typically use API keys or OAuth tokens in request headers:

headers = {'Authorization': 'Bearer YOUR_TOKEN'}

response = requests.get(url, headers=headers)If the API paginates results, combine pages with a loop:

all_results = []

page = 1

while True:

response = requests.get(url, params={'page': page}, headers=headers)

data = response.json()

all_results.extend(data['items'])

if not data.get('next_page'):

break

page += 1Handle HTTP errors by checking response.status_code. Retry or log errors for 4xx or 5xx status codes to build resilient scripts.

For example, parsing Proxy-Seller API’s JSON responses fits smoothly into your Python workflows. It provides reliable, fast proxies ideal for scraping and API interactions that return JSON. The provider offers comprehensive API access supporting Python, making it easy to integrate proxy management into your automation. Their stable infrastructure ensures high uptime and speed, essential when dealing with many API requests or large paginated data. Use Proxy-Seller’s 24/7 support and detailed docs to solve errors quickly and optimize your proxy setup while parsing JSON from APIs.

You’ll learn how to Python parse JSON data more effectively using specialized tools. When dealing with complex or nested JSON, basic parsing isn’t enough. Let’s explore two powerful libraries: JMESPath and ChompJS.

JMESPath is a JSON query language designed to query deeply nested JSON data.

For example, to access a nested field, you write:

jmespath.search('store.book[0].title', json_data)Filtering lists is where jmespath shines. Suppose you want books priced less than $10:

jmespath.search('store.book[?price < `10`]', json_data)You can also project specific fields across lists:

jmespath.search('store.book[].author', json_data)JMESPath integrates well with API data retrieval and automation scripts, making complex extraction efficient without writing manual loops. When using JMESPath, handle errors gracefully. If the query is invalid, catch exceptions and test with simple queries first to debug.

ChompJS addresses another challenge: parsing JavaScript object literals that are not valid JSON. Sometimes, when you parse JSON with json.loads, you fail because of issues like single quotes, unquoted keys, trailing commas, or functions inside the data.

For example, after fetching a web page, use:

chompjs.parse_js_object(js_variable_string)This returns a Python dictionary you can work with as if it were parsed JSON. Using chompjs over json.loads gives you more flexibility and saves time in messy web scraping projects.

Here are key advantages of ChompJS when you parse JSON in Python from web data:

Combine jmespath and chompjs for efficient, robust parsing of complex JSON responses and embedded JavaScript data. This approach speeds up web scraping and automation tasks.

You’ll learn how to validate and clean JSON data before or after you parse json with Python. Validating JSON structure prevents runtime errors during parsing or processing.

Use the jsonschema library (pip install jsonschema) to enforce JSON format rules. Define a schema specifying required fields, data types, and constraints.

Here is a simple example of a validation schema:

schema = {

"type": "object",

"properties": {

"name": {"type": "string"},

"age": {"type": "integer"},

"email": {"type": "string", "format": "email"},

},

"required": ["name", "email"]

}Validate your JSON data with:

from jsonschema import validate, ValidationError

try:

validate(instance=data, schema=schema)

except ValidationError as e:

print(f"JSON validation error: {e.message}")After validation, clean JSON data by removing null values, fixing inconsistent formats, or converting types.

Use pandas for advanced cleaning:

import pandas as pd

import pandas as pd

df = pd.json_normalize(data)

df.dropna(inplace=True)

df['age'] = df['age'].astype(int)Common issues you’ll handle include missing keys, wrong data types, or malformed arrays.

Practical checklist for cleaning JSON data:

Validating and cleaning JSON upfront ensures your Python scripts run smoothly and the data you depend on is reliable. This is a critical step before parsing JSON files or streams in Python projects.

Working with data details can lead to several common errors, particularly when parsing, generating, or accessing structured content. Using a reliable Python JSON parser can help identify and fix these issues more efficiently. Here are some of the most common ones:

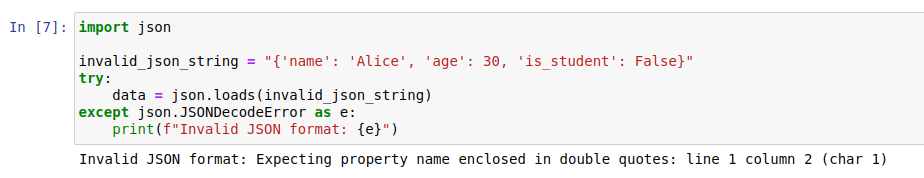

A common error when parsing JSON file is encountering an invalid format. It requires double quotes around keys and string values and proper nesting of brackets and braces.

import json

invalid_json_string = "{'name': 'Alice', 'age': 30, 'is_student': False}"

try:

data = json.loads(invalid_json_string)

except json.JSONDecodeError as e:

print(f"Invalid JSON format: {e}")Output:

Sometimes, content doesn't include all the keys you expect. Using the get() method lets you safely handle missing keys by returning a default value instead of raising an error.

import json

json_string = '{"name": "Alice", "age": 30}'

data = json.loads(json_string)

is_student = data.get('is_student', False)

print(is_student)import json

json_string = '{"name": "Alice", "age": 30}'

data = json.loads(json_string)

is_student = data.get('is_student', False)

print(is_student)

Use the pdb module to set breakpoints and debug your parsing code.

import json

import pdb

json_string = '{"name": "Alice", "age": 30, "is_student": false}'

pdb.set_trace()

data = json.loads(json_string)

print(data)

Web scraping usually involves retrieving content from services that return the information obtained. Below is an example that utilizes the requests library alongside the endpoint https://httpbin.org/anything.

Before we start, check that you’ve installed the requests package:

pip install requestsWhen working with structured content, you can use the requests library to make things easier. Simply issue a GET request and the URL via requests.get(url). Then, you can parse the response with response.json(). From there, it’s simple to access specific pieces of information like headers, the user agent, the origin, or the request URL and print them out as needed.

The code contains strong error handling; it captures json.JSONDecodeError when a decoding error occurs and KeyError when a specific key is absent, consequently making the program safeguarded from ‘no data’ crashes. Such code robustness therefore enables it to handle real web scraping tasks perfectly.

import requests

import json

url = 'https://httpbin.org/anything'

response = requests.get(url)

try:

data = response.json()

# Extracting specific data from the JSON response

headers = data['headers']

user_agent = headers.get('User-Agent', 'N/A')

origin = data.get('origin', 'N/A')

url = data.get('url', 'N/A')

print(f"User Agent: {user_agent}")

print(f"Origin: {origin}")

print(f"URL: {url}")

except json.JSONDecodeError:

print("Error decoding JSON response")

except KeyError as e:

print(f"Key error: {e} not found in the JSON response")

Every developer must know how to parse a JSON file in Python. With the module and the best way to do it highlighted by this manual, you’ll be able to read, write, and debug fast enough. This means testing your code regularly and using the right tools and the latest features to perform better. This guide focuses on parsing JSON data in Python to help you master these tasks efficiently.

When doing web scraping, parsing becomes essential since content from web APIs often comes in this format. Being skilled in processing and manipulating it allows you to extract valuable information from a variety of sources efficiently, especially when combined with proxies for web scraping, which help maintain stability, anonymity, and uninterrupted data collection.

Parsing is a key skill for any developer working with web APIs, config files, or external sources. Handling information – whether reading from a string or file, writing structured output, or dealing with nested and custom objects – is simplified through the built-in module in Python. Developers can unlock the json.load(), json.loads(), json.dump(), json.dumps() Python methods and learn how to deal with common problems, implement custom encoders or decoders, and make tailored applications for information interactions that are robust and dependable.

Given that this format is a web standard for exchanging information, these skills are valuable in scraping, manipulating content, and integrating multiple services via APIs. Using simple Python code to read JSON file makes parsing a JSON file in Python accessible for any project, while json.dump Python allows saving material back efficiently.

Comments: 0