en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Gaeilge

Gaeilge  اردو

اردو  Indonesia

Indonesia  Polski

Polski Monitoring search results (SERP) is the backbone of accurate SEO analytics and budget planning for websites . After Google changed its algorithms, familiar data collection methods became more expensive and slower. Teams now need a technical foundation that keeps data collection stable and accurate at scale. In this context, SERP proxy has become a core tool for consistent rank tracking and competitive analysis.

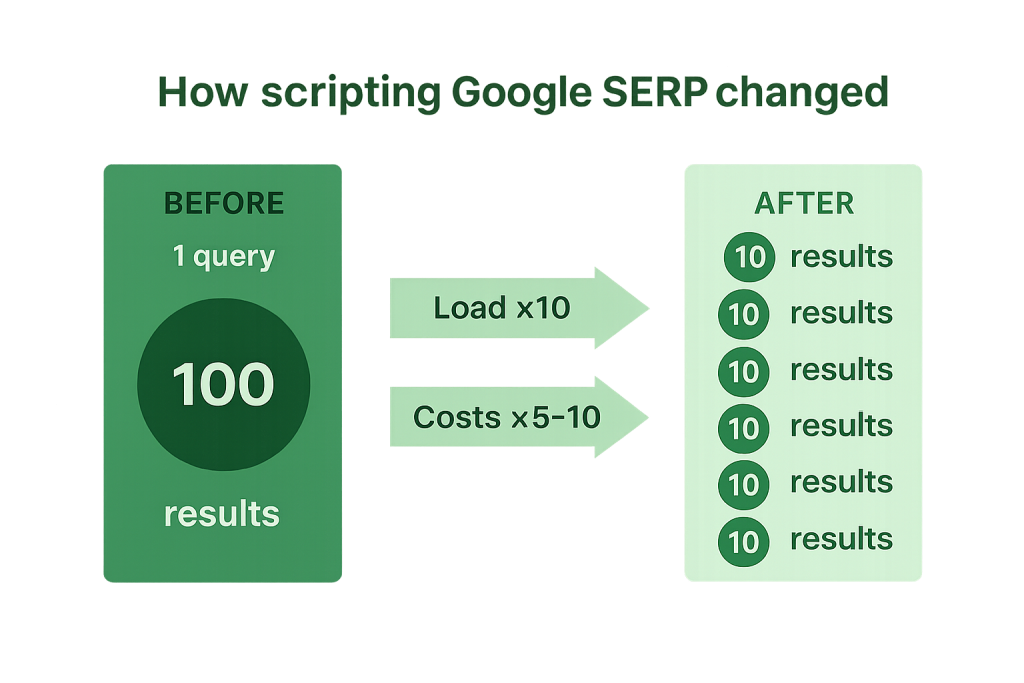

Google removed support for the &num=100 parameter. The results page now returns no more than 10 results per request, ignoring the num parameter. To get the top 100, you must send 10 separate calls. This leads to more requests overall, higher load on parsers, extra spending on IP pools and server capacity, and a more complex setup for SERP monitoring at the enterprise level.

What used to be solved with a single request now requires a whole chain of calls. For large agencies, SaaS platforms, and in-house SEO teams this is not just “more load” – it fundamentally changes the cost of data.

Every additional call increases network load, expands the required IP pool, and consumes extra traffic.

If you previously needed 10,000 requests to analyze 10,000 keywords, now you need 100,000. This 10× difference directly affects:

Many SEO services have already seen infrastructure costs go up by 30–50%, and companies that rely on third-party SERP data APIs report their expenses growing 2–3×.

Google has become more sensitive to high-volume access. When you send many calls from the same IPs, you risk:

As a result, analytics becomes distorted: some data is missing, outdated, or duplicated.

Previously, SEO parsers often followed a simple pattern: “one request — one result”. That architecture no longer holds up. Teams are moving to asynchronous and batch-oriented pipelines:

On top of that, teams introduce a new prioritization logic for keywords:

This approach can cut the total number of calls by 25–40% while preserving analytical depth.

Errors in SERP data collection lead to distorted reports and, consequently, poor business decisions, highlighting the need for a secure data collection proces. As the load grows, stability becomes more important than raw speed.

SEO platforms now evaluate not only position accuracy, but also availability metrics when using a private search engine: the share of successful calls, response time, percentage of CAPTCHAs and blocks.

Many companies create their own monitoring quality dashboards that track:

These dashboards help teams tune request frequency and volume in time and optimize their IP pools.

This situation clearly showed how critical such infrastructure is: whoever controls it, controls their data.

For businesses, proxies are no longer a “supporting utility” — they are part of the operating model of SEO analytics.

Well-designed infrastructure enables:

Many large agencies now allocate separate budgets for IP infrastructure and proxy services — just like they previously did for content and backlinks.

| Metric | Before Google Update | After Google Update |

|---|---|---|

| Requests needed to get top-100 per keyword | 1 | 10 |

| Average parser load | Low | 5–10× higher |

| Monitoring resilience | Stable | Depends on IP rotation |

| Speed of SEO decision-making | Higher | Lower without optimization |

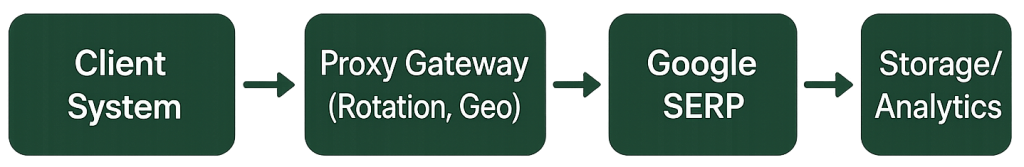

A search engine with proxy is a managed pool of IP addresses (residential, mobile, ISP, or datacenter) that your SEO system uses to send requests to the search results page and retrieve videos. This architecture solves three key tasks:

Google SERP proxy servers are a set of IP addresses and auxiliary tools (gateway, rotation rules, geo-targeting, rate limits) that are optimized for search engine access. They help you collect data correctly under multiple calls while staying within technical constraints.

Types of intermediaries and where they fit:

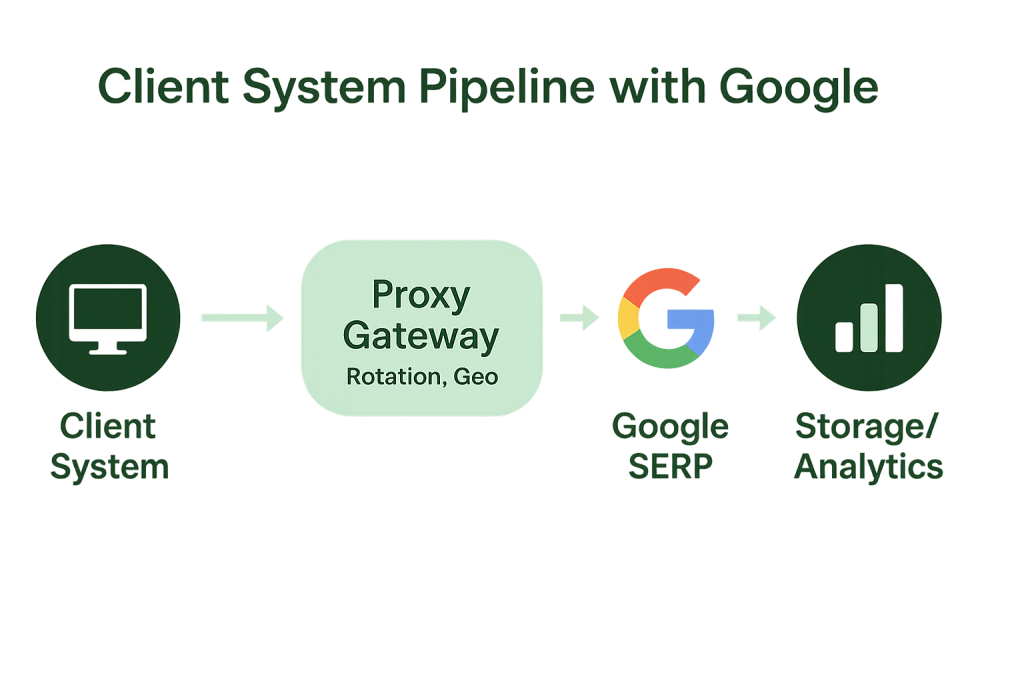

After the Google update, companies started looking for ways to optimize rank tracking and reduce their new infrastructure costs. The main goal is to preserve data accuracy and processing speed without inflating the infrastructure budget. The solution usually revolves around well-designed data pipelines plus a managed SERP proxy setup.

In SEO monitoring, a pipeline is the technical sequence that every request passes through: from scheduling and sending to the search engine, to receiving and processing the response. A robust pipeline includes:

This internet setup helps distribute load evenly, keep results consistent, and scale operations without downtime.

Modern work with SERP is best described as managed data collection: instead of just firing requests, the SEO team builds a controlled system with analytics, automation, and metrics. This reduces load, improves accuracy, and keeps monitoring costs predictable.

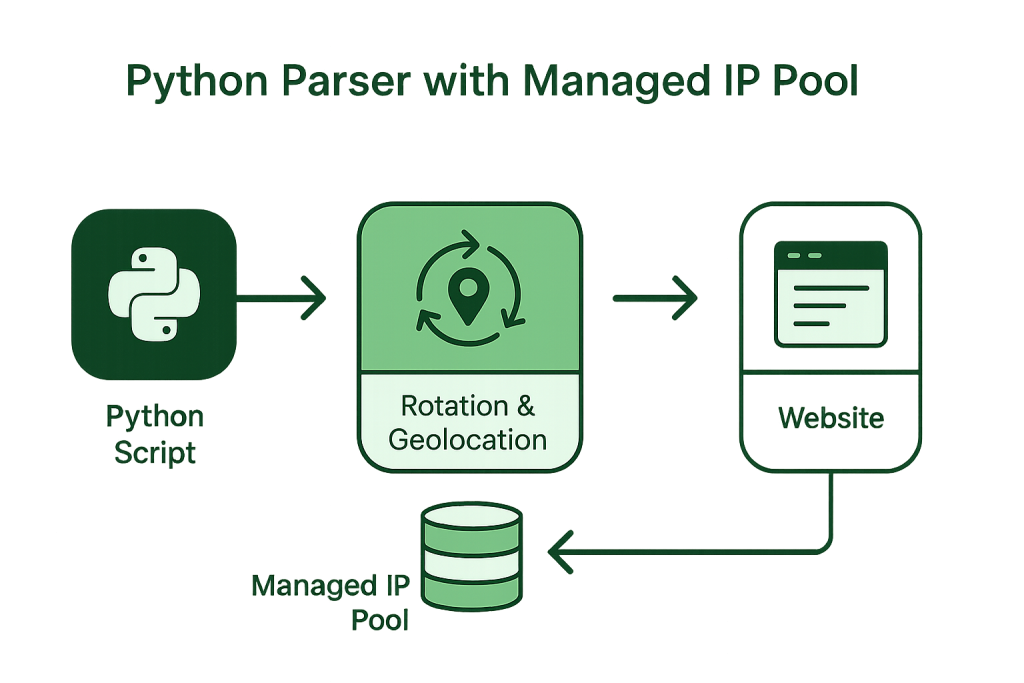

One of the most flexible options is to build a custom Python parser that works with SERPs via a managed IP pool. This type of tool can:

In practice, this approach lets you collect top-100 results for thousands of queries without interruptions and IP blocks, while keeping load predictable. Similar setups are described in SerpApi Blog, where they note that integrating Python parsers with IP rotation cuts server load by 2–3× and raises data accuracy by up to 40%.

They are now a standard component of modern SEO tools. For example, proxy in GSA, you can clearly see how a properly configured IP pool helps automate rank tracking and avoid disruptions in data collection. The same logic applies to other SERP monitoring systems – the key is to keep connections stable and distribute requests evenly across IPs.

| Scenario | Problem | Solution via proxies & pipelines |

|---|---|---|

| Large-scale SERP monitoring for thousands of keywords | Overload and temporary limits | Pool of 100+ IPs, rotation every 5–10 minutes, batched requests |

| Regional position checks | Inaccurate results when using a single geo | Residential or ISP solutions with the right geo and consistent throughput |

| SERP & ads on different devices | Differences vs desktop SERPs | Mobile IPs, tuned user-agents and timing |

| Cost control | Rising costs due to many requests | Caching, TTL 24–48h, pay-as-you-go model |

| Integrations with external SEO tools | API rate limits | Proxy gateway + adaptive request windows and backoff mechanisms |

Many teams choose a hybrid model:

Integrating these approaches with providers like Proxy-Seller helps companies save up to 30% of their budget while maintaining high accuracy and resilience. The business gains control over every stage: from planning request frequency to distributing traffic across IP pools and regions.

Real-world examples show how companies and SEO platforms adapted to the new Google SERP behavior and implemented proxy-based solutions to increase data accuracy and reduce costs. Below are cases from corporate B2B projects, SEO services, and agencies that optimized their data collection, rebuilt pipelines, and achieved stable results.

Initial setup: 40,000 keywords × 12 regions × weekly top-100 updates. After the Google changes, the number of requests grew by an order of magnitude. The infrastructure ran into bottlenecks: CPU spikes, growing queues, and more timeouts.

Solution: move to a hybrid model — critical keyword clusters are handled via an external API, the rest through an in-house Python script. The team introduced: regional residential solutions, rotation every 3–5 minutes, soft rate windows, and exponential backoff on retries.

Result: pipeline stability increased, timeouts dropped by 37%, and monitoring costs fell by 23% due to caching and smarter scheduling.

Initial setup: the product targets mobile traffic, so mobile rankings have priority over desktop.

Solution: dynamic mobile SERP proxy pool, custom user-agent lists, device-based session separation, and controlled request frequency.

Result: data now aligns better with real mobile SERPs, and report refresh time dropped by 28%.

Initial setup: several business lines, multiple regions, and the need for fast comparative analytics on top-10/top-20 results.

Solution: combination of datacenter proxies (for fast, cost-efficient snapshots) and residential ones (for deep checks on sensitive keywords and precise regional accuracy).

Result: time to complete initial competitive analysis shrank by 2.1× while keeping report depth intact.

The right provider is critical for stability and controlled costs.

Key evaluation criteria:

Providers at the level of Proxy-Seller meet these requirements: multiple proxy types, flexible rotation, clear pricing, an intuitive dashboard, and support for popular integrations. For “collect top-100 without degradation” tasks, this balance of latency, cost, and stability matters more than raw speed alone.

Pricing for a single IPv4 address starts from $1.60, with custom terms available for larger IP pools.

Split keywords into batches, align processing windows with IP rotation, and apply adaptive throttling when you see error spikes.

Cache stable positions; refresh volatile keywords and “borderline” pages more frequently.

Track success rate, CAPTCHA frequency, average response time, and ranking stability.

Combine residential and mobile solutions to make results closer to real user experience and to support ad auditing.

Stay within search engines’ technical limits, configure rates and intervals carefully, and use retries with exponential backoff.

Logs, metrics, dashboards, and alerts are mandatory if you work under corporate SLAs and need to investigate incidents quickly.

Google’s decision to remove the &num=100 parameter made SERP monitoring significantly more complex. Collecting a top-100 list now requires more resources, more requests, and a more thoughtful infrastructure. Companies that rely on robust proxy search engine Google keep their SEO processes stable, minimize infrastructure costs, and maintain high-quality analytics.

High-quality SERP proxy servers are not just an auxiliary component — they are part of strategic data management. They support parser resilience, flexible processes, and a competitive edge in a landscape where search algorithms change constantly.

SERP proxies are servers that help you browse, collect data from Google’s search results without overload or temporary limits. They allow you to analyze rankings, retrieve top-100 results, and automate SERP monitoring.

Focus on speed, stability, rotation support, and geographic coverage. In many cases, residential or mobile solutions with pay-as-you-go pricing are a good starting point.

Yes, but for large-scale workloads it’s better to use an IP pool with rotation to avoid temporary restrictions and improve data accuracy.

Yes. Residential solutions tend to deliver the most natural results because they use IP addresses of real users, which makes them especially suitable for SERP monitoring.

For intensive workloads, it’s recommended to rotate IPs every 5–10 minutes or use automatic rotation to keep connections stable.

Comments: 0