en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Gaeilge

Gaeilge  اردو

اردو  Indonesia

Indonesia  Polski

Polski Scrapy is a robust, high-level framework designed for web scraping and data extraction, making it ideal for tasks like data parsing, price monitoring, user behavior analysis, social media insights, and SEO analysis. This framework is equipped to handle large volumes of data efficiently. It includes built-in mechanisms for managing HTTP requests, error handling, and ensuring compliance with robots.txt, which are essential for navigating complex and large-scale web data collection projects. This review will delve into what Scrapy is, how it functions, and the features it offers to users, providing a comprehensive understanding of its capabilities and applications.

The Scrapy framework is a powerful open-source web scraping tool written in Python, designed for high-efficiency crawling and extracting structured data from websites. Scrapy can:

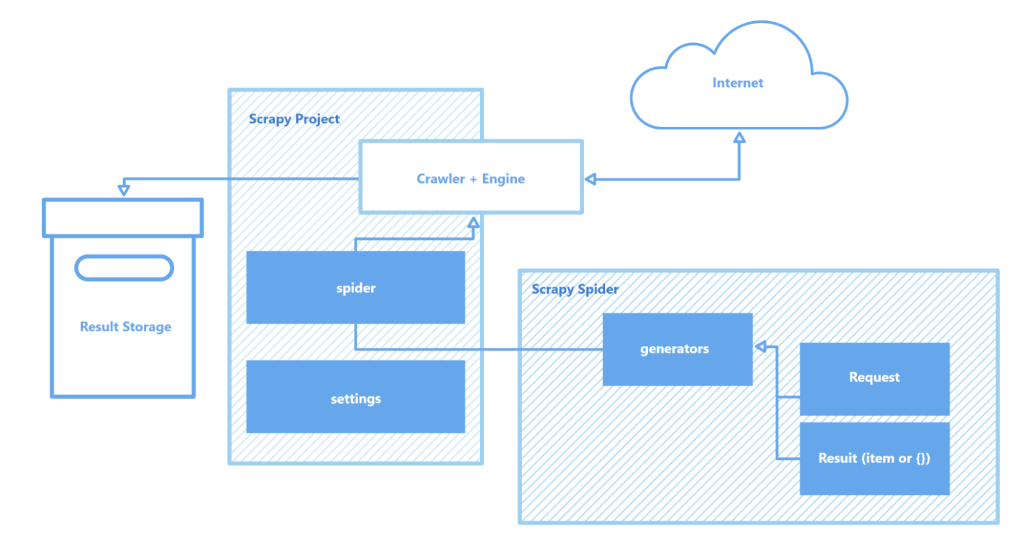

Scrapy operates through “spiders” – specialized crawlers that follow defined instructions to navigate web pages and harvest data. These spiders are essentially scripts that identify and capture specific types of objects like text, images, or links. An interactive crawling shell provided by Scrapy allows for real-time testing and debugging of these spiders, greatly enhancing the setup and optimization process of the crawler.

Key components of the Scrapy architecture include:

Overall, Scrapy stands out as one of the most robust and flexible web scraping tools available, suitable for everything from simple data extraction tasks to complex large-scale web mining projects.

This section highlights the key features of the Scrapy framework:

These attributes differentiate Scrapy from its competitors and establish it as a popular choice in the web scraping arena.

Scrapy is powered by Twisted, an asynchronous open-source network engine. Unlike synchronous operations, where one task must be completed before another begins, Twisted allows for tasks to be executed in parallel. This means Scrapy spiders can send multiple requests and process responses simultaneously, enhancing speed and efficiency in data collection, particularly for large-scale projects or when scanning multiple sites simultaneously.

The speed of Scrapy is further boosted by several factors:

Together, these features establish Scrapy as one of the fastest tools (read more about other various tools) available for efficiently scraping and collecting data from a multitude of websites, making it an invaluable resource for tasks such as product price monitoring, job listings, news gathering, social media analysis, and academic research.

Scrapy’s modular architecture enhances its adaptability and extensibility, making it well-suited for a variety of complex data collection tasks. Its support for integration with various data stores such as MongoDB, PostgreSQL, and Elasticsearch, as well as queue management systems like Redis and RabbitMQ, allows for the effective handling of large data volumes. Additionally, Scrapy can integrate with monitoring or logging platforms like Prometheus or Logstash, enabling scalable and customizable scraper configurations for projects ranging from machine learning data collection to search engine development.

Scrapy’s architecture consists of:

Furthermore, Scrapy’s ability to support custom modules for API interactions provides a robust framework for scaling and tailoring solutions to meet the demands of large-scale data processing and complex project requirements.

Another significant advantage of Scrapy is its portability. The framework supports multiple operating systems, including Windows, macOS, and Linux, making it versatile for use across various development environments. Installation is straightforward using the Python package manager (pip), and thanks to Scrapy's modular structure and flexible configuration, projects can easily be transferred between machines without significant changes.

Furthermore, Scrapy supports virtual environments, which isolate project dependencies and avoid conflicts with other installed packages. This feature is particularly valuable when working on multiple projects simultaneously or when deploying applications to a server, ensuring a clean and stable development environment.

For more effective work with Scrapy, it is recommended to use a code editor like Visual Studio Code (VS Code) or its analogs, since interaction with the framework is carried out via the command line (CLI). This allows for managing projects, scanning sites, and configuring spiders more efficiently. Additionally, using virtual environments to manage dependencies can help avoid conflicts between libraries and package versions, ensuring a smoother workflow.

Creating and running a project in Scrapy involves a series of straightforward steps:

pip install scrapyscrapy startproject myprojectmyproject/

scrapy.cfg # Project settings

myproject/

__init__.py

items.py # Data model definitions

middlewares.py # Middlewares

pipelines.py # Data processing

settings.py # Scrapy settings

spiders/ # Spiders folder

__init__.py

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = ['http://quotes.toscrape.com/']

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small::text').get(),

}

scrapy crawl quotesHere, “quotes” is the name of the spider defined in the QuotesSpider class. Scrapy will execute the spider to crawl the specified URL and extract data according to your defined settings.

scrapy crawl quotes -o quotes.jsonScrapy is a robust, free web scraping framework designed to give developers comprehensive tools for automated data extraction and processing from web pages. Its asynchronous architecture and modular structure ensure high speed and excellent scalability, facilitating the expansion of functionality as needed. Additionally, Scrapy's seamless integration with various libraries and data storage solutions, along with support for custom protocols, simplifies the customization of the crawler to meet specific project requirements. This makes the web scraping process not only more efficient but also more adaptable and user-friendly.

Scrapy is 100% open source and free to use. It operates under the BSD license, allowing you to use, modify, and distribute it without any cost. There are no hidden fees or subscriptions attached to Scrapy itself.

However, Scrapy does not list any pricing for advanced features or enterprise support within its core offerings. If you need professional support, additional tools, or cloud services, you should consider contacting Scrapy or Zyte directly.

Zyte, the company behind Scrapy, offers paid plans and subscription services through Scrapy Cloud (formerly Scrapinghub). The platform provides benefits like:

Here’s what to keep in mind:

Knowing this helps you decide if you want to build your Scrapy projects solely in your local environment or leverage Zyte’s cloud tools for easier scaling and management.

When choosing a web scraping tool, it's essential to understand how Scrapy compares to alternatives. The Best Web Scrapers directory highlights various scraping frameworks, but you need to assess their strengths based on your task.

Here’s a quick breakdown of popular competitors and when to pick them:

Scrapy shines in several areas:

But Scrapy also has limitations:

Understanding these differences helps you pick the scraper that fits your project scope and skill level. You can also explore additional information about web scraping vs web crawling to get a clearer picture of how each approach works.

While this article does not cover explicit pros and cons, here are typical advantages and drawbacks that matter when working with Scrapy Python projects.

Pros:

Cons:

One excellent proxy service to complement the Scrapy proxy setup is Proxy-Seller. It offers a broad range of proxy options, including residential, ISP, data center, and mobile proxies. These proxies support SOCKS5 and HTTP(S) with authentication via username/password or IP whitelisting.

Key practical features of Proxy-Seller for Scrapy users include:

Integrating Proxy-Seller with your Scrapy projects helps you avoid IP bans, bypass geo-restrictions, and overcome rate limits, improving your scraping’s reliability and scale.

Proxy-Seller sources IPs ethically, following official agreements to provide clean, non-blacklisted proxies compliant with GDPR and CCPA regulations. Their competitive pricing includes bulk and long-term rental discounts, plus flexible packages designed specifically for web scraping needs such as Scrapy proxy integration.

Using Proxy-Seller alongside Scrapy streamlines proxy management, reduces operational overhead, and enhances overall scraping performance. This makes it a practical solution for beginners and experienced developers aiming to build robust, large-scale Scrapy projects.

Comments: 0