en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski For systematic data collection from websites, a web scraping bot is used. This is a program that automatically extracts the necessary information from pages. Such software is essential in cases where the volume of data is too large for manual processing or when regular updates are required – for example, for price monitoring, review analysis, or tracking positions in search engine results.

A web scraping bot allows automation of tasks such as: accessing a website, retrieving the content of a page, extracting the required fragments, and saving them in the needed format. It is a standard tool in e-commerce, SEO, marketing, and analytics — wherever speed and accuracy of data processing are critical.

A scraper bot is a software agent that automatically extracts content from web pages for further processing. It can be part of a corporate system, run as a standalone script, or be deployed through a cloud platform. Its main purpose is to collect large-scale structured data available in open access.

To better understand the concept, let’s look at the classification of tools used as scraper bots.

By access method to content:

By adaptability to website structure:

By purpose and architecture:

Read also: Best Web Scraping Tools in 2026.

Scraping bots are applied across various industries and tasks where speed, scalability, and structured information are critical.

Each of these directions requires a specific level of data extraction depth and protection bypass. Therefore, web scraping bots are adapted to the task — from simple HTTP scripts to full-scale browser-based solutions with proxy support and anti-detection features.

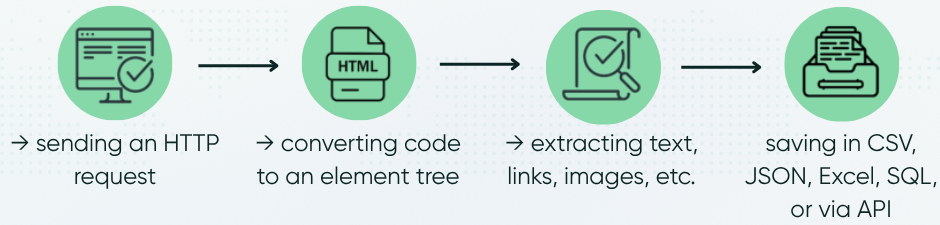

Web scraper bots operate according to a step-by-step scenario, where each stage corresponds to a specific technical action. Despite differences in libraries and programming languages, the basic logic is almost always the same.

Below is a more detailed step-by-step description with Python examples.

At the first stage, a web scraping bot initiates an HTTP request to the target URL and retrieves the HTML document. It’s important to set the correct User-Agent header to imitate the behavior of a regular browser.

import requests

headers = {'User-Agent': 'Mozilla/5.0'}

url = 'https://books.toscrape.com/'

response = requests.get(url, headers=headers)

html = response.text

Here, the bot connects to the site and receives the raw HTML code of the page, as if it were opened in a browser.

To analyze the content, the HTML must be parsed — converted into a structure that is easier to work with. For this, libraries such as BeautifulSoup or lxml are typically used.

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.prettify()[:1000]) # Display the first 1000 characters of formatted HTML

Now, the HTML can be viewed as a tag tree, making it easy to extract the necessary elements.

Next, the web scraping bot identifies the fragments that need to be extracted: product names, prices, images, links, and more. Usually, CSS selectors or XPath are used.

books = soup.select('.product_pod h3 a')

for book in books:

print(book['title'])

This code finds all book titles and outputs their names.

At this stage, the web scraping bot cleans and structures the data: removes unnecessary symbols, formats text, extracts attributes (for example, href or src), and compiles everything into a unified table.

data = []

for book in books:

title = book['title']

link = 'https://books.toscrape.com/' + book['href']

data.append({'Title': title, 'Link': link})

The data is transformed into a list of dictionaries, which is convenient for further analysis.

After extraction, the data is saved in the required format — CSV, JSON, Excel, a database, or transferred via API.

import pandas as pd

df = pd.DataFrame(data)

df.to_csv('books.csv', index=False)

The collected info sets can then be easily analyzed in Excel or uploaded into a CRM.

If the required data is spread across multiple pages, the scraper bot implements crawling: it follows links and repeats the process.

next_page = soup.select_one('li.next a')

if next_page:

next_url = 'https://books.toscrape.com/catalogue/' + next_page['href']

print('Next page:', next_url)

When working with websites where the content loads dynamically (via JavaScript), browser engines such as Selenium or Playwright are used. They allow the bot to interact with the DOM, wait for the required elements to appear, and perform actions — for example, clicking buttons or entering data into forms.

DOM (Document Object Model) is the structure of a web page formed by the browser from HTML code. It represents a tree where each element — a header, block, or image — is a separate node that can be manipulated programmatically.

Despite the efficiency of scraping, when interacting with real websites, technical and legal obstacles often arise.

To prevent automated access, websites implement different systems:

It is recommended to check out material that describes in detail how ReCaptcha bypassing works and which tools are best suited for specific tasks.

When scraping is accompanied by a high frequency of requests from a single source, the server may:

To handle such technical restrictions, platforms use rotating proxies, traffic distribution across multiple IPs, and request throttling with configured delays.

Some resources load data using JavaScript after the initial HTML has already been delivered, or based on user actions such as scrolling.

In such cases, browser engines are required — for example:

These allow interaction with the DOM in real time: waiting for elements to appear, scrolling pages, executing scripts, and extracting data from an already rendered structure.

Website developers may change:

Such updates can render previous parsing logic inoperative or cause extraction errors.

To maintain stability, developers implement flexible extraction schemes, fallback algorithms, reliable selectors (e.g., XPath), and regularly test or update their parsers.

Automated data collection may conflict with a website’s terms of service. Violating these rules poses particular risks in cases of commercial use or redistribution of collected data.

Before starting any scraping activity, it is important to review the service’s terms. If an official API is available, its use is the preferred and safer option.

The legality of using scraping bots depends on jurisdiction, website policies, and the method of data extraction. Three key aspects must be considered:

A detailed breakdown of the legal side can be found in the article: Is Web Scraping Legal?

Creating a scraping bot starts with analyzing the task. It is important to clearly understand what data needs to be extracted, from where, and how frequently.

Python is the most popular language for web scraping due to its ready-to-use libraries, concise syntax, and convenience for working with data. Therefore, let’s consider the general process using Python as an example.

Commonly used libraries:

A finished solution can be implemented as a CLI tool or as a cloud-based service.

Essential components include:

The process of how to build a web scraping bot is explained in detail in this article.

A scraping bot as a solution for automated data collection allows quick access to information from external sources, scalable monitoring, and real-time analytics processes. It is important to comply with platform restrictions, properly distribute the workload, and consider the legal aspects of working with data.

We offer a wide range of proxies for web scraping. Our selection includes IPv4, IPv6, ISP, residential, and mobile solutions.

For large-scale scraping of simple websites, IPv4 is sufficient. If stability and high speed are required, use ISP proxies. For stable performance under geolocation restrictions and platform technical limits, residential or mobile proxies are recommended. The latter provides maximum anonymity and resilience against ReCaptcha by using real mobile operator IPs.

A parser processes already loaded HTML, while a scraping bot independently loads pages, manages sessions, repeats user actions, and automates the entire cycle.

Yes. They help distribute requests across different IP addresses, which improves scalability, enables data collection from multiple sites in parallel, and ensures stable operation within platform-imposed technical restrictions.

It is recommended to use IP rotation, delays between requests, proper User-Agent settings, and session management to reduce detection risks.

The most popular is Python with libraries such as requests, BeautifulSoup, Scrapy, Selenium. Node.js (Puppeteer) and Java (HtmlUnit) are also commonly used.

Comments: 0