en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski ParseHub is a web scraping tool designed to efficiently extract data from websites, even for users without prior programming skills. It employs advanced machine learning algorithms to navigate and interpret dynamic websites that use JavaScript and AJAX. ParseHub offers the flexibility to handle various data types and can manage sites that require user authentication or specific inputs to access information. Now let's take a closer look at the browser's features, interface, pricing, and use cases.

The versatility of ParseHub makes it a popular choice across multiple industries:

Moreover, ParseHub's applications extend to other sectors like SEO, e-commerce, and reputation management, showcasing its broad utility.

ParseHub is equipped with a robust array of features, making it highly versatile for executing virtually any web scraping task. Notably, it integrates machine learning algorithms that recognize patterns in data and web page structures, simplifying the configuration of scraping tasks and enhancing the precision of data extraction. Additionally, ParseHub offers a visual interface that allows users to easily create and configure projects, further adding to its user-friendly appeal. Next, we will explore the key features of ParseHub in more detail.

Automation in ParseHub is comprised of two main components: the API and the task scheduler.

Together, these features create a robust automation system within ParseHub, empowering users to efficiently scale and optimize their data collection efforts.

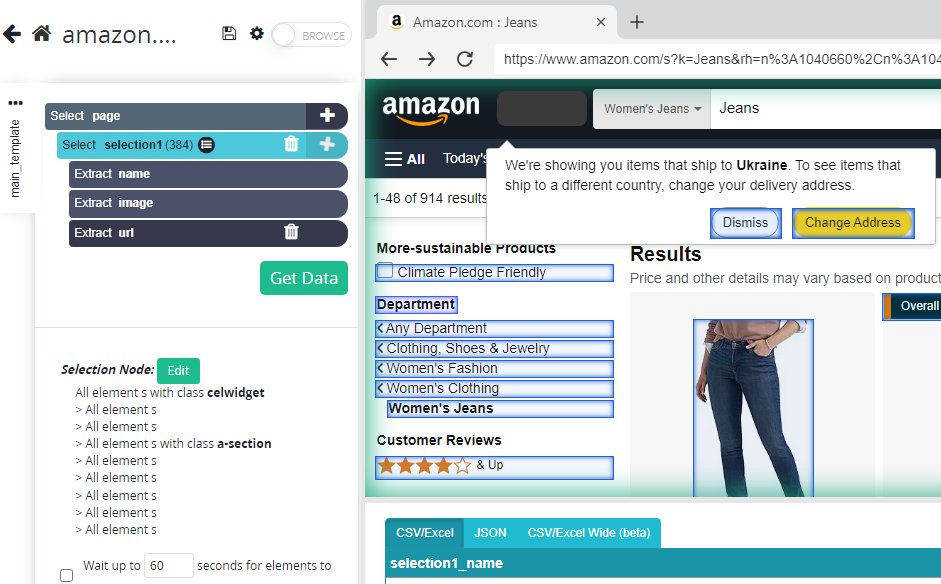

ParseHub is equipped with sophisticated tools designed for scalable and efficient data collection from web pages linked together. This platform enables users to set up scraping projects that automatically navigate through a website’s internal links, methodically extracting data from each page encountered and consolidating it into a unified dataset. The platform is adept at handling dynamically generated web pages that use JavaScript and AJAX, making it possible to scrape data from complex websites effectively.

Additionally, ParseHub allows users to configure various interactions on the site, including:

These advanced automation features enable a thorough and accurate analysis of data structures. This capability ensures not only the effective extraction of content but also its detailed structuring and classification, which is vital for comprehensive data analysis.

ParseHub supports exporting data in several popular formats to accommodate various user needs, including Excel, JSON, and via an API.

Together, these export mechanisms significantly streamline the integration and analysis of scraped data, enhancing the overall utility of the ParseHub platform for a wide range of professional applications.

The pricing structure for the parser is quite comprehensive, accommodating users with varying budget constraints. Additionally, a free version of the tool is available, making it accessible to a broader audience. We will now examine in more detail all the subscription options available.

The free plan offers access to the basic features of the parser but comes with certain limitations: it allows parsing of only 200 pages, which takes about 40 minutes, and the extracted data is stored for just 14 days. This plan is ideal for those looking to evaluate the tool’s capabilities.

This plan enables parsing up to 10,000 pages within a single project. Starting from this tier, users gain the ability to integrate third-party services such as Dropbox and Amazon S3. It also includes features like IP address configuration and rotation, as well as the execution of deferred tasks. The cost of the “Standard” plan is $189 per month.

Geared toward more advanced requirements, this plan includes all the features of the Standard plan and allows an unlimited number of pages per project. Additional benefits include fast scraping capabilities (200 pages in 2 minutes) and priority online support. The “Professional” plan is priced at $599 per month.

Designed for corporate clients and handling complex, large-scale tasks, the “ParseHub Plus” plan offers full customization of the parser to meet specific needs, along with premium online support available at any time. Pricing and terms for this plan are negotiated directly with a ParseHub manager.

| Plan | Everyone | Standard | Professional | ParseHub Plus |

|---|---|---|---|---|

| Price | $0 | $189 | $599 | Negotiable |

| Number of pages for parsing in one project | 200 | 10,000 | Unlimited | Unlimited |

| Parsing data storage | 14 days | 14 days | 30 days | Unlimited |

| DropBox and Amazon S3 integration | – | + | + | + |

| Proxy integration | – | + | + | + |

| Task scheduler | – | + | + | + |

It's also important to mention that a 15% discount is applied when placing an order for a period of 3 months or more.

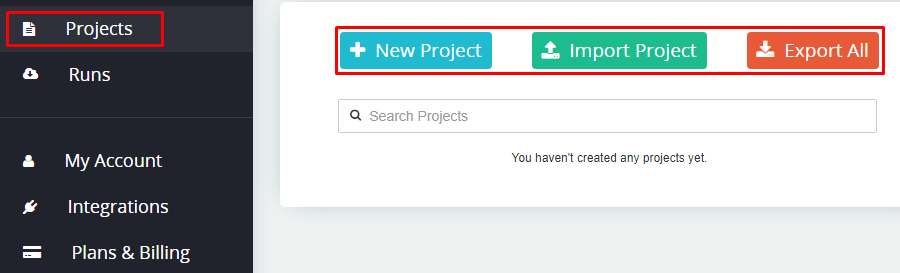

The ParseHub interface is designed to be minimalistic, focusing on simplified management and project execution. All controls are conveniently positioned on the left panel. We will explore the available tabs in more detail below.

In this tab, users are presented with several interactive options:

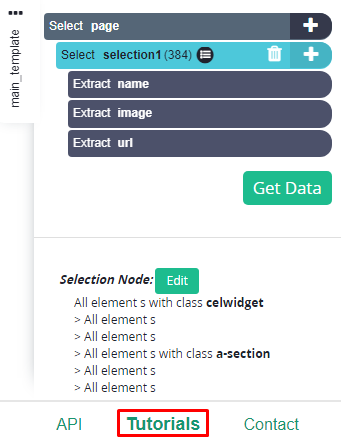

Upon selecting “New Project”, a new workspace will open where the target site's link can be inserted to begin the project setup.

Additionally, at the bottom of the page, users can find the “Tutorials” button which provides access to detailed instructions on how to use the tool effectively. There is also an option to contact online support for any immediate assistance or queries.

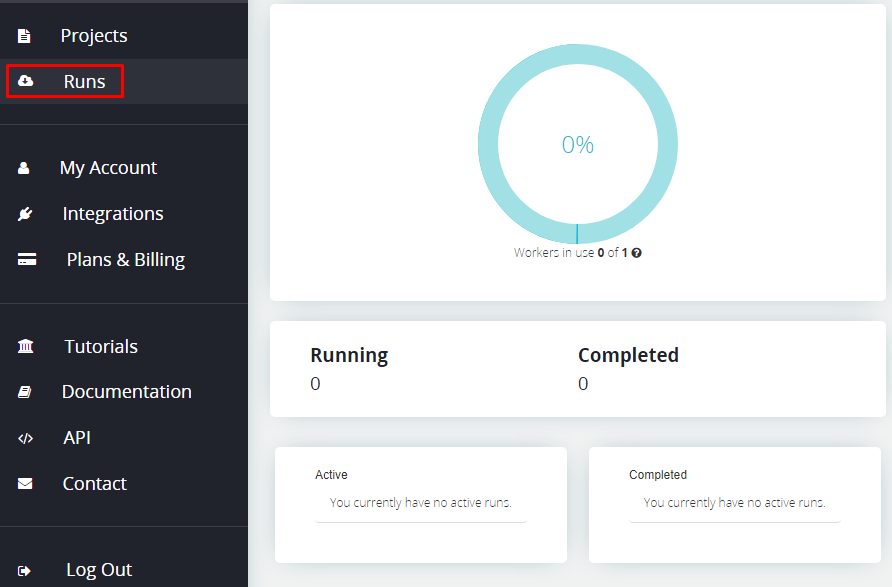

This tab allows users to monitor the status of their projects, showing both the number of projects launched and those that have been successfully completed.

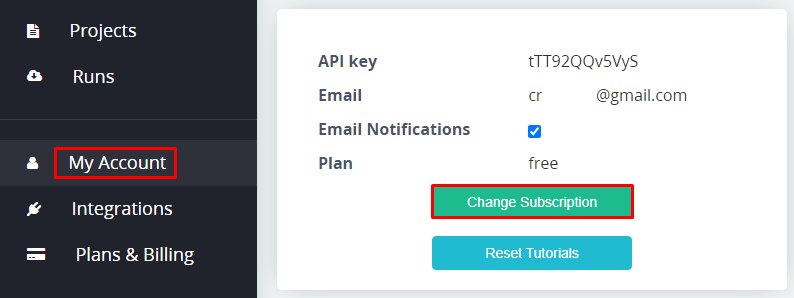

This section displays details about the user's account, including the active subscription and API key. Users can also change their subscription plan, activate email notifications, and reset built-in tips from here.

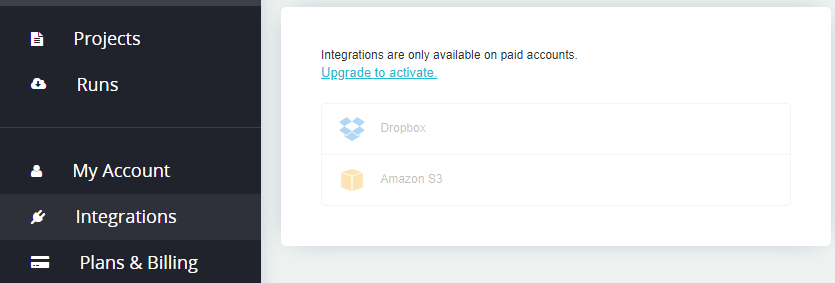

This tab provides options to manage integrations with third-party services like Dropbox and Amazon S3, which are available only with paid subscription plans.

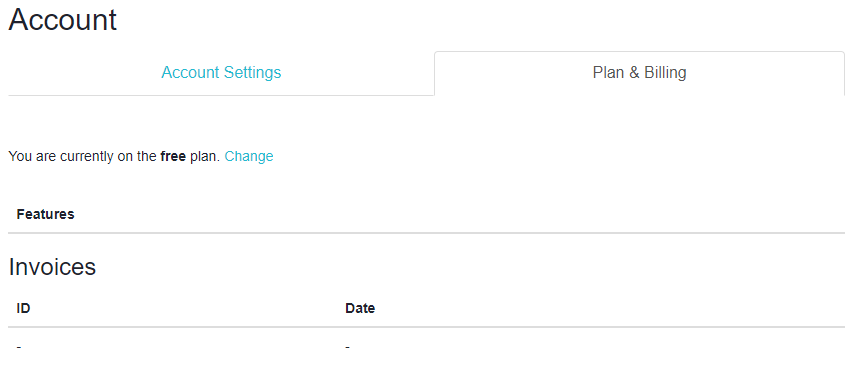

Clicking on this item redirects users to the ParseHub website, where they can modify their subscription plan and view payment history.

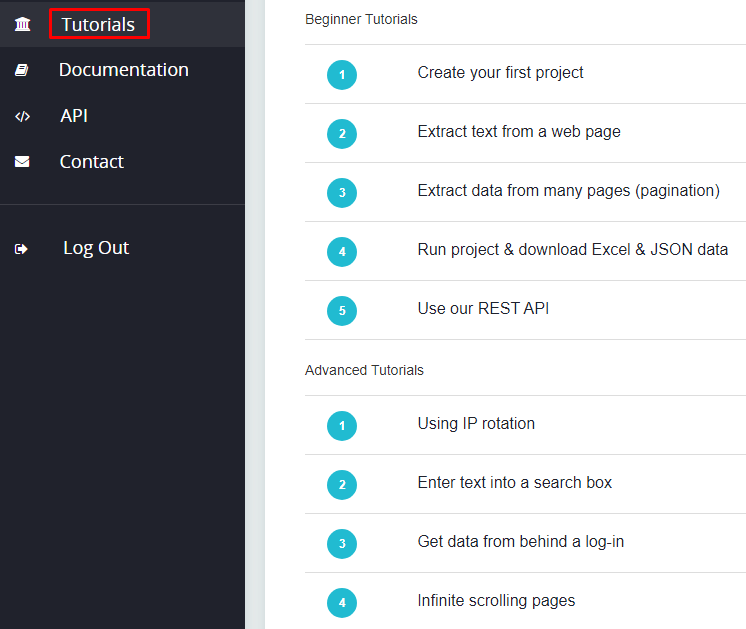

The “Tutorials” section is a valuable resource that houses a comprehensive collection of guides. These tutorials cover a range of topics from project creation to advanced settings like proxy server rotation.

Selecting this tab will redirect users to a page filled with various documents related to using the tools within the parser, including detailed API documentation.

Similar to the “Documentation” tab, clicking on API directs the user to a database containing detailed information about API functionalities.

This tab allows users to reach out to support with any queries by filling out a contact form on the site. Responses are typically sent via email, facilitating direct communication with the support team.

Using proxy servers during the data parsing process is crucial for several reasons:

It is advisable to use only private proxy servers when working with parsers. Private proxies tend to be more reliable and are generally more trusted by target websites. Here’s a detailed guide on how to integrate proxies into ParseHub.

You’ll find ParseHub effective across many industries:

Common projects include:

ParseHub suits both small projects and enterprise workloads. It handles simple tasks on your desktop but scales up with cloud-based scraping and API integration. This flexibility lets you automate repetitive scraping workflows at scale. For marketers, you might run projects to track competitors or leads. Analysts can automate data collection for reports, while developers integrate ParseHub outputs into larger systems. Case studies show users saving hours weekly by automating otherwise manual data gathering.

To balance data freshness with server load, schedule scraping runs based on how often your target data changes. Avoid running scrapes more frequently than needed.

Manage proxies and IP rotation to reduce blocks and CAPTCHAs. Use Proxy-Seller’s proxy integration with ParseHub for robust options like datacenter, residential, ISP, and mobile proxies. Take advantage of flexible rotation and geo-targeting to mimic natural user behavior and stay stable.

Minimize resource consumption by:

Before full runs, use ParseHub’s live preview and debugging tools to test and refine extraction logic. This reduces errors and wasted runs.

After scraping, combine outputs with data cleaning tools like OpenRefine or Python’s Pandas. These tools help you remove duplicates, fix formatting, and organize data for better usability.

Follow this checklist for efficient scraping with ParseHub:

Applying these steps ensures smoother operations and higher quality data at scale.

If ParseHub doesn’t fit your needs, consider these alternatives:

Here’s a practical pros and cons table based on your skills, project complexity, and budget:

| Category | Tools (Examples) | Pros | Cons |

|---|---|---|---|

| No-code Tools | ParseHub, Octoparse, WebHarvy | Easy for beginners, faster setup | Limited deep customization |

| Enterprise Tools | Content Grabber | Powerful, suited for complex workflows and large scale | Expensive |

| RPA Platforms | UiPath, Automation Anywhere | Great for integrating scraping with business processes | Need automation knowledge |

| Coding Frameworks | Scrapy, Selenium | Free/open-source, high customization | Steep learning curve |

Choose ParseHub if you want a no-code, beginner-friendly scraper with cloud support. Pick code-based tools when your projects require custom logic or full control. ParseHub pricing often appeals to small-mid users; consider alternatives when you need specific features or larger-scale automation.

This comparison helps you pick the best tool for your scraping goals without wasting time or budget.

It's worth noting the simplicity and ease of configuring the parser. Setting up a new project in ParseHub is a quick process, often taking just a few minutes. Moreover, the ability to integrate with third-party resources can greatly enhance the quality of data collection, while the proper configuration of proxies can help avoid potential blocks.

Comments: 0