en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski As good as this approach to data gathering seems, it is frowned upon by many websites, and there are consequences for following through with scraping, like a ban on our IP.

On a positive note, proxy services help avoid this consequence. They allow us to take on a different IP while gathering data online, and as secure as this seems, using multiple proxies is better. Using multiple proxies while scraping makes interaction with the website appear random and enhances security.

The target website (source) for this guide is an online bookstore. It imitates an e-commerce website for books. On it are books with a name, price and availability. Since this guide focuses not on organizing the data returned but on rotating proxies, the data returned will only be presented in the console.

Install and import some Python modules into our file before we can begin coding the functions that would aid in rotating the proxies and scraping the website.

pip install requests beautifulSoup4 lxml

3 of the 5 Python modules needed for this scraping script can be installed using the command above. Requests allows us to send HTTP requests to the website, BeautifulSoup4 allows us to extract the information from the page’s HTML provided by requests, and LXML is an HTML parser.

In addition, we also need the built-in threading module to allow multiple testing of the proxies to see if they work and JSON to read from a JSON file.

import requests

import threading

from requests.auth import HTTPProxyAuth

import json

from bs4 import BeautifulSoup

import lxml

import time

url_to_scrape = "https://books.toscrape.com"

valid_proxies = []

book_names = []

book_price = []

book_availability = []

next_button_link = ""Building a scraping script that rotates proxies means we need a list of proxies to choose from during rotation. Some proxies require authentication, and others do not. We must create a list of dictionaries with proxy details, including the proxy username and password if authentication is needed.

The best approach to this is to put our proxy information in a separate JSON file organized like the one below:

[

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

},

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

},

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

},

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

}

]

In the “proxy_address” field, enter the IP address and port, separated by a colon. In the “proxy_username” and “proxy_password” fields, provide the username and password for authorization.

Above is the content of a JSON file with 4 proxies for the script to choose from. The username and password can be empty, indicating a proxy that requires no authentication.

def verify_proxies(proxy:dict):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

res = requests.get(

url_to_scrape,

auth = proxy_auth,

proxies={

"http" : proxy['proxy_address']

}

)

else:

res = requests.get(url_to_scrape, proxies={

"http" : proxy['proxy_address'],

})

if res.status_code == 200:

valid_proxies.append(proxy)

print(f"Proxy Validated: {proxy['proxy_address']}")

except:

print("Proxy Invalidated, Moving on")As a precaution, this function ensures that the proxies provided are active and working. We can achieve this by looping through each dictionary in the JSON file, sending a GET request to the website, and if a status code of 200 is returned, then add that proxy to the list of valid_proxies - a variable we created earlier to house the proxies that work from the list in the file. If the call is not successful, execution continues.

Since BeautifulSoup needs the website's HTML code to extract the data we need, we have created request_function(), which takes the URL and proxy of choice and returns the HTML code as text. The proxy variable enables us to route the request through different proxies, hence rotating the proxy.

def request_function(url, proxy):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

response = requests.get(

url,

auth = proxy_auth,

proxies={

"http" : proxy['proxy_address']

}

)

else:

response = requests.get(url, proxies={

"http" : proxy['proxy_address']

})

if response.status_code == 200:

return response.text

except Exception as err:

print(f"Switching Proxies, URL access was unsuccessful: {err}")

return Nonedata_extract() extracts the data we need from the HTML code provided. It gathers the HTML element housing the book information, like the book name, price and availability. It also extracts the link for the next page.

This is particularly tricky because the link is dynamic, so we had to account for the dynamism. Finally, it looks through the books and extracts the name, price and availability, then returns the next button link that we would use to retrieve the HTML code of the next page.

def data_extract(response):

soup = BeautifulSoup(response, "lxml")

books = soup.find_all("li", class_="col-xs-6 col-sm-4 col-md-3 col-lg-3")

next_button_link = soup.find("li", class_="next").find('a').get('href')

next_button_link=f"{url_to_scrape}/{next_button_link}" if "catalogue" in next_button_link else f"{url_to_scrape}/catalogue/{next_button_link}"

for each in books:

book_names.append(each.find("img").get("alt"))

book_price.append(each.find("p", class_="price_color").text)

book_availability.append(each.find("p", class_="instock availability").text.strip())

return next_button_link

To link everything together, we have to:

with open("proxy-list.json") as json_file:

proxies = json.load(json_file)

for each in proxies:

threading.Thread(target=verify_proxies, args=(each, )).start()

time.sleep(4)

for i in range(len(valid_proxies)):

response = request_function(url_to_scrape, valid_proxies[i])

if response != None:

next_button_link = data_extract(response)

break

else:

continue

for proxy in valid_proxies:

print(f"Using Proxy: {proxy['proxy_address']}")

response = request_function(next_button_link, proxy)

if response is not None:

next_button_link = data_extract(response)

else:

continue

for each in range(len(book_names)):

print(f"No {each+1}: Book Name: {book_names[each]} Book Price: {book_price[each]} and Availability {book_availability[each]}")import requests

import threading

from requests.auth import HTTPProxyAuth

import json

from bs4 import BeautifulSoup

import time

url_to_scrape = "https://books.toscrape.com"

valid_proxies = []

book_names = []

book_price = []

book_availability = []

next_button_link = ""

def verify_proxies(proxy: dict):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

res = requests.get(

url_to_scrape,

auth=proxy_auth,

proxies={

"http": proxy['proxy_address'],

}

)

else:

res = requests.get(url_to_scrape, proxies={

"http": proxy['proxy_address'],

})

if res.status_code == 200:

valid_proxies.append(proxy)

print(f"Proxy Validated: {proxy['proxy_address']}")

except:

print("Proxy Invalidated, Moving on")

# Retrieves the HTML element of a page

def request_function(url, proxy):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

response = requests.get(

url,

auth=proxy_auth,

proxies={

"http": proxy['proxy_address'],

}

)

else:

response = requests.get(url, proxies={

"http": proxy['proxy_address'],

})

if response.status_code == 200:

return response.text

except Exception as err:

print(f"Switching Proxies, URL access was unsuccessful: {err}")

return None

# Scraping

def data_extract(response):

soup = BeautifulSoup(response, "lxml")

books = soup.find_all("li", class_="col-xs-6 col-sm-4 col-md-3 col-lg-3")

next_button_link = soup.find("li", class_="next").find('a').get('href')

next_button_link = f"{url_to_scrape}/{next_button_link}" if "catalogue" in next_button_link else f"{url_to_scrape}/catalogue/{next_button_link}"

for each in books:

book_names.append(each.find("img").get("alt"))

book_price.append(each.find("p", class_="price_color").text)

book_availability.append(each.find("p", class_="instock availability").text.strip())

return next_button_link

# Get proxy from JSON

with open("proxy-list.json") as json_file:

proxies = json.load(json_file)

for each in proxies:

threading.Thread(target=verify_proxies, args=(each,)).start()

time.sleep(4)

for i in range(len(valid_proxies)):

response = request_function(url_to_scrape, valid_proxies[i])

if response is not None:

next_button_link = data_extract(response)

break

else:

continue

for proxy in valid_proxies:

print(f"Using Proxy: {proxy['proxy_address']}")

response = request_function(next_button_link, proxy)

if response is not None:

next_button_link = data_extract(response)

else:

continue

for each in range(len(book_names)):

print(

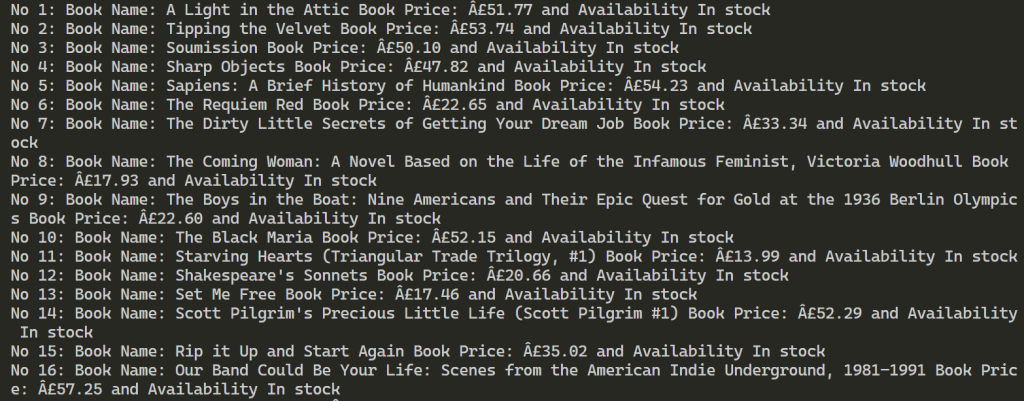

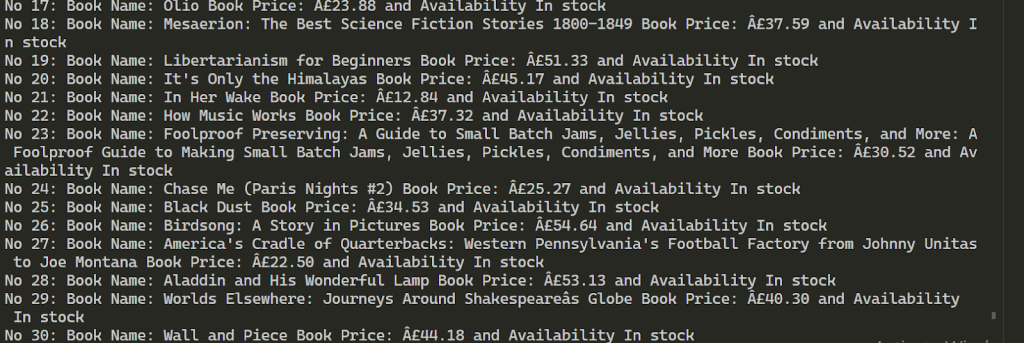

f"No {each + 1}: Book Name: {book_names[each]} Book Price: {book_price[each]} and Availability {book_availability[each]}")After a successful execution, the results look like the below. This goes on to extract information on over 100 books using the 2 proxies provided.

Using multiple proxies for web scraping enables an increase in the number of requests to the target resource and helps bypass blocking. To maintain the stability of the scraping process, it is advisable to use IP addresses that offer high speed and a strong trust factor, such as static ISP and dynamic residential proxies. Additionally, the functionality of the provided script can be readily expanded to accommodate various data scraping requirements.

When you rotate proxies, a common naive approach is to select them randomly without considering their network subnets. This seems simple but can lead to problems. To understand why, you need to understand the subnet concept in IPv4 addressing. IPv4 addresses are divided into four parts called octets, like 192.168.1.1. The third octet usually represents the subnet. For example, 192.168.1.x belongs to one subnet, while 192.168.2.x belongs to another.

When you use proxies consecutively from the same subnet, websites can detect this pattern and trigger rate limits or block your requests. This happens because many sites treat traffic from the same subnet as coming from a single user. So, if you want to rotate proxies effectively, avoid using multiple proxies from the same subnet one after another.

A better strategy is to rotate proxies by subnet to maximize diversity. This means you pick proxies from different subnets in sequence, reducing the chance of hitting rate limits. You can improve this further by considering factors like the Autonomous System Number (ASN), which identifies the network provider, as well as the location and origin of each proxy.

Here’s what you should do when you rotate proxies in web scraping:

Below is a simple Python snippet to help you avoid consecutive proxies from the same subnet when you rotate proxies Python:

def get_subnet(ip):

return '.'.join(ip.split('.')[:3])

def rotate_proxies(proxies):

last_subnet = None

for proxy in proxies:

current_subnet = get_subnet(proxy['ip'])

if current_subnet == last_subnet:

continue # skip to avoid subnet repetition

last_subnet = current_subnet

yield proxy

# Example usage

proxies = [{'ip': '192.168.1.2'}, {'ip': '192.168.1.5'}, {'ip': '192.168.2.3'}, {'ip': '10.0.0.4'}]

for proxy in rotate_proxies(proxies):

print(proxy['ip'])

This approach makes sure that when you rotate proxies Python, you reduce the risk of getting blocked due to subnet-based rate limits.

You must track proxy performance to keep your scraping stable. Monitoring proxy health lets you know which proxies work well and which fail. If you ignore this, you’ll waste time and risk bans.

Mark proxies as dead or failing based on response status codes or connection errors. For example, if a proxy returns timeouts or HTTP 403 errors repeatedly, mark it as dead. Then apply a recovery period before retrying the proxy – don’t use dead proxies immediately to avoid repeated failures.

A practical way to track proxies is using a dictionary that holds proxy addresses alongside timestamps of failures. When a proxy fails, record the current time. Before reusing this proxy, check if enough time has passed since the last failure.

Key tips when tracking proxy performance:

Here’s a concise Python example that manages dead proxies and retry logic when you rotate proxies in web scraping:

import time

dead_proxies = {}

def is_proxy_dead(proxy):

if proxy in dead_proxies:

retry_after = 300 # seconds to wait before retry

if time.time() - dead_proxies[proxy] < retry_after:

return True

else:

del dead_proxies[proxy]

return False

def mark_proxy_dead(proxy):

dead_proxies[proxy] = time.time()

def get_working_proxy(proxies):

for proxy in proxies:

if not is_proxy_dead(proxy):

return proxy

return None # no proxy available

# Example usage

proxies = ['192.168.1.2', '192.168.2.3', '10.0.0.4']

proxy = get_working_proxy(proxies)

if proxy is None:

print("No proxies available")

else:

# Use the proxy and check the response here

# Simulate failure

mark_proxy_dead(proxy)

This approach cuts down on failed attempts and keeps your scraping more efficient.

Weighted random proxy rotation improves your scraping by choosing proxies based on their quality and history, not just randomly. You assign weights to proxies to favor the best ones.

Python has a built-in function random.choices() that helps pick items with assigned weights. You can use it to rotate proxies Python by giving higher weights to proxies that are healthy, residential, or less recently used.

How to assign weights:

Check this example to rotate proxies in web scraping with weight assignment:

import random

import time

proxies = [

{'ip': '192.168.1.2', 'type': 'residential', 'failures': 0, 'last_used': 0},

{'ip': '192.168.2.3', 'type': 'datacenter', 'failures': 2, 'last_used': 10},

{'ip': '10.0.0.4', 'type': 'residential', 'failures': 1, 'last_used': 50},

]

def calculate_weight(proxy):

base_weight = 10 if proxy['type'] == 'residential' else 5

weight = base_weight / (1 + proxy['failures'])

# Penalize recently used proxies (e.g., last used under 60 seconds ago)

if time.time() - proxy['last_used'] < 60:

weight /= 2

return weight

weights = [calculate_weight(p) for p in proxies]

chosen_proxy = random.choices(proxies, weights=weights, k=1)[0]

print("Selected proxy:", chosen_proxy['ip'])

Weighted rotation lets you keep the best proxies in circulation longer, reducing failures and improving scraping speed.

Why choose Proxy-Seller as your proxy provider when you can implement weighted random rotation? Proxy-Seller offers:

Using Proxy-Seller, you can tailor your proxy sets, mixing types and locations to best fit your rotation rules. This flexibility helps you optimize how you rotate proxies Python, minimize failures, and keep your scraping workflow healthy and productive.

In summary, by applying a weighted random proxy rotation strategy, you improve proxy selection intelligently. Proxy-Seller’s reliable and diverse proxy offerings make it easier to implement these strategies effectively for your scraping projects.

Comments: 0