en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski Python stands out as a top choice for web scraping due to its robust libraries and straightforward syntax. In this Python web scraping tutorial, we'll explore the fundamentals of extracting data and guide you through setting up your Python environment to create your first web scraper. We'll introduce you to key libraries suited for scraping tasks, including Beautiful Soup, Playwright, and lxml.

There are a lot of Python web scraping libraries that simplify the process. Below are some of the most popular ones:

If you're wondering how to scrape a website using Python, it starts with understanding how HTTP requests work.

HTTP (HyperText Transfer Protocol) is an application layer protocol for the transfer of data across the web. You type a URL in the browser, and it generates an HTTP request and sends it to the web server. The server sends back the response to the browser which it renders as a web page. For web scraping, you need to mimic this process and generate HTTP requests from your script to get the content of web pages programmatically.

First, ensure you have Python installed on your system. You can download it from Python's official website.

A virtual environment helps manage dependencies. Use these commands to create and activate a virtual environment:

python -m venv scraping_env

source scraping_env/bin/activate

Next, install the required packages using the following commands:

pip install requests

pip install beautifulsoup4

pip install lxml

Let’s start with a simple example of how to use Python to scrape a website using the requests library to extract static content.

The most common type of HTTP request is the GET request, which is used to retrieve data from a specified URL. Here is a basic Python web scraping example of how to perform a GET request to http://example.com.

import requests

url = 'http://example.com'

response = requests.get(url)

The requests library provides several ways to handle and process the response:

Check status code: ensure the request was successful.

if response.status_code == 200:

print('Request was successful!')

else:

print('Request failed with status code:', response.status_code)

Extracting content: extract the text or JSON content from the response.

# Get response content as text

page_content = response.text

print(page_content)

# Get response content as JSON (if the response is in JSON format)

json_content = response.json()

print(json_content)

HTTP and network errors may occur when a resource is not reachable, a request timed out, or the server returns an error HTTP status (e.g. 404 Not Found, 500 Internal Server Error). We can use the exception objects raised by requests to handle these situations.

import requests

url = 'http://example.com'

try:

response = requests.get(url, timeout=10) # Set a timeout for the request

response.raise_for_status() # Raises an HTTPError for bad responses

except requests.exceptions.HTTPError as http_err:

print(f'HTTP error occurred: {http_err}')

except requests.exceptions.ConnectionError:

print('Failed to connect to the server.')

except requests.exceptions.Timeout:

print('The request timed out.')

except requests.exceptions.RequestException as req_err:

print(f'Request error: {req_err}')

else:

print('Request was successful!')

To extract structured data, libraries like Beautiful Soup and lxml help identify and parse specific parts of the page.

Web pages consist of nested elements represented by tags, such as <div>, <p>, <a>, etc. Each tag can have attributes and contain text, other tags, or both.

XPath and CSS selectors provide a way to target elements based on their attributes or position in the document.

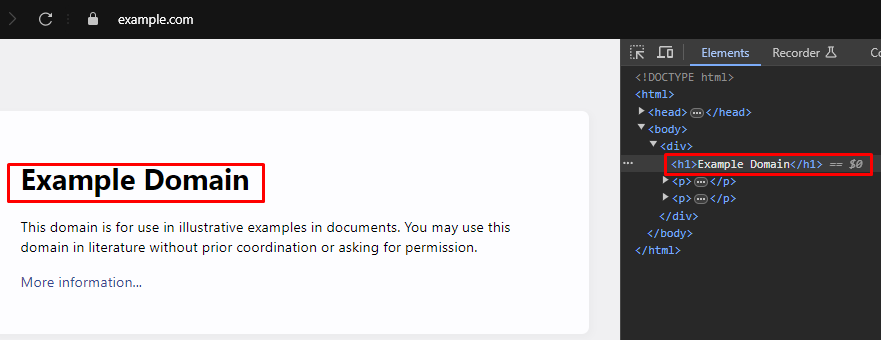

When scraping, extracting specific data often requires identifying the correct XPath or CSS selectors. Here's how to find them efficiently:

Most modern web browsers come with built-in developer tools that allow you to inspect the HTML structure of web pages. Here’s a step-by-step guide on how to use these tools:

XPath: /html/body/div/h1

CSS Selector: body > div > h1

Beautiful Soup is a Python library for parsing page content. It provides methods and attributes to navigate and search through the page structure.

from bs4 import BeautifulSoup

import requests

# URL of the webpage to scrape

url = 'https://example.com'

# Send an HTTP GET request to the URL

response = requests.get(url)

# Parse the HTML content of the response using Beautiful Soup

soup = BeautifulSoup(response.content, 'html.parser')

# Use the CSS selector to find all <h1> tags that are within <div> tags

# that are direct children of the <body> tag

h1_tags = soup.select('body > div > h1')

# Iterate over the list of found <h1> tags and print their text content

for tag in h1_tags:

print(tag.text)

Like Beautiful Soup, lxml allows you to catch and handle issues gracefully using exceptions such as lxml.etree.XMLSyntaxError.

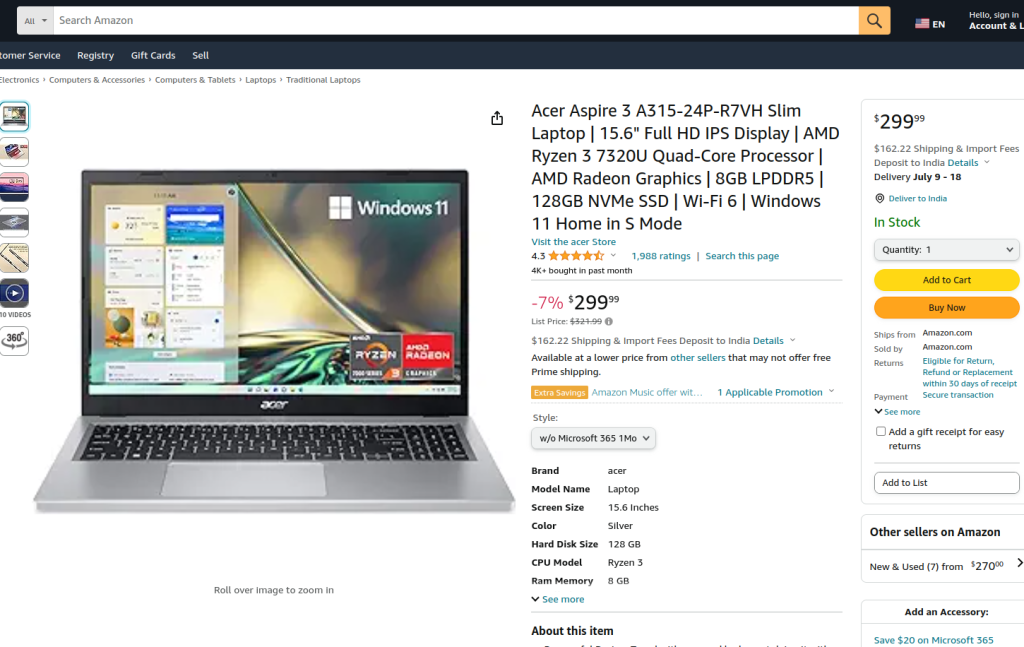

The provided “Python web scraping code” performs data extraction on an Amazon product page using Playwright and lxml. It sets up a proxy and launches a browser, navigates to the product page, interacts with elements, waits for full page load, and captures the page source.

This content is parsed using lxml to create an element tree, and XPath is used to extract the product title. The script handles cases where the element isn’t found or parsing fails. After scraping, it properly closes the browser session.

from bs4 import BeautifulSoup

import requests

# URL of the webpage to scrape

url = 'https://example.com'

# Send an HTTP GET request to the URL

response = requests.get(url)

try:

# Parse the HTML content of the response using Beautiful Soup

soup = BeautifulSoup(response.content, 'html.parser')

# Use the CSS selector to find all <h1> tags that are within <div> tags

# that are direct children of the <body> tag

h1_tags = soup.select('body > div > h1')

# Iterate over the list of found <h1> tags and print their text content

for tag in h1_tags:

print(tag.text)

except AttributeError as attr_err:

# Handle cases where an AttributeError might occur (e.g., if the response.content is None)

print(f'Attribute error occurred: {attr_err}')

except Exception as parse_err:

# Handle any other exceptions that might occur during the parsing

print(f'Error while parsing HTML: {parse_err}')

Another popular library for parsing content is lxml. While BeautifulSoup is user-friendly, lxml is faster and more flexible, making it ideal for performance-critical tasks.

from lxml.html import fromstring

import requests

# URL of the webpage to scrape

url = 'https://example.com'

# Send an HTTP GET request to the URL

response = requests.get(url)

# Parse the HTML content of the response using lxml's fromstring method

parser = fromstring(response.text)

# Use XPath to find the text content of the first <h1> tag

# that is within a <div> tag, which is a direct child of the <body> tag

title = parser.xpath('/html/body/div/h1/text()')[0]

# Print the title

print(title)

Similar to Beautiful Soup, lxml allows you to handle parsing errors gracefully by catching exceptions like lxml.etree.XMLSyntaxError.

from lxml.html import fromstring

from lxml import etree

import requests

# URL of the webpage to scrape

url = 'https://example.com'

# Send an HTTP GET request to the URL

response = requests.get(url)

try:

# Parse the HTML content of the response using lxml's fromstring method

parser = fromstring(response.text)

# Use XPath to find the text content of the first <h1> tag

# that is within a <div> tag, which is a direct child of the <body> tag

title = parser.xpath('/html/body/div/h1/text()')[0]

# Print the title

print(title)

except IndexError:

# Handle the case where the XPath query does not return any results

print('No <h1> tag found in the specified location.')

except etree.XMLSyntaxError as parse_err:

# Handle XML syntax errors during parsing

print(f'Error while parsing HTML: {parse_err}')

except Exception as e:

# Handle any other exceptions

print(f'An unexpected error occurred: {e}')

Once you have successfully extracted data, the next step is to save this data. Python provides several options for saving scraped data, including saving to CSV files, JSON files, and databases. Here’s an overview of how to save extracted data using different formats:

CSV (Comma-Separated Values) is a simple and widely used format for storing tabular data. The CSV module in Python makes it easy to write data to CSV files.

import csv

# Sample data

data = {

'title': 'Example Title',

'paragraphs': ['Paragraph 1', 'Paragraph 2', 'Paragraph 3']

}

# Save data to a CSV file

with open('scraped_data.csv', mode='w', newline='', encoding='utf-8') as file:

writer = csv.writer(file)

writer.writerow(['Title', 'Paragraph'])

for paragraph in data['paragraphs']:

writer.writerow([data['title'], paragraph])

print('Data saved to scraped_data.csv')

JSON (JavaScript Object Notation) is a lightweight data-interchange format that is easy to read and write. The JSON module in Python provides methods to save data in JSON format.

import json

# Sample data

data = {

'title': 'Example Title',

'paragraphs': ['Paragraph 1', 'Paragraph 2', 'Paragraph 3']

}

# Save data to a JSON file

with open('scraped_data.json', mode='w', encoding='utf-8') as file:

json.dump(data, file, ensure_ascii=False, indent=4)

print('Data saved to scraped_data.json')

Playwright is a powerful tool for scraping dynamic content and interacting with web elements. It can handle JavaScript-heavy websites that static HTML parsers cannot.

Install Playwright and set it up:

pip install playwright

playwright install

Playwright allows you to interact with web elements like filling out forms and clicking buttons. It can wait for AJAX requests to complete before proceeding, making it ideal for scraping dynamic content.

The provided Python web scraping code performs data extraction on an Amazon product page using Playwright and lxml. Initially, the necessary modules are imported. A run function is defined to encapsulate the scraping logic. The function begins by setting up a proxy server and launching a new browser instance with the proxy and in non-headless mode, allowing us to observe the browser actions. Within the browser context, a new page is opened and navigated to the specified Amazon product URL, with a timeout of 60 seconds to ensure the page fully loads.

The script then interacts with the page to select a specific product style from a dropdown menu and a product option by using locators and text matching. Once these interactions are complete and the page is fully loaded, the page content is captured.

This content is then parsed using the fromstring method in lxml to create an element tree. An XPath query is used to extract the text content of the product title from a specific element with the ID productTitle. The script is designed to handle situations where the XPath query might fail to return results, where issues with XML syntax occur during parsing, or when something else goes wrong unexpectedly. In the end, the product title extracted with lxml is printed, and both the browser context and the browser itself are properly closed to finish the session

The run function is executed within a Playwright session started by sync_playwright, ensuring that the entire process is managed and executed within a controlled environment. This structure ensures robustness and error resilience while performing the web scraping task.

from playwright.sync_api import Playwright, sync_playwright

from lxml.html import fromstring, etree

def run(playwright: Playwright) -> None:

# Define the proxy server

proxy = {"server": "https://IP:PORT", "username": "LOGIN", "password": "PASSWORD"}

# Launch a new browser instance with the specified proxy and in non-headless mode

browser = playwright.chromium.launch(

headless=False,

proxy=proxy,

slow_mo=50,

args=['--ignore-certificate-errors'],

)

# Create a new browser context

context = browser.new_context(ignore_https_errors=True)

# Open a new page in the browser context

page = context.new_page()

# Navigate to the specified Amazon product page

page.goto(

"https://www.amazon.com/A315-24P-R7VH-Display-Quad-Core-Processor-Graphics/dp/B0BS4BP8FB/",

timeout=10000,

)

# Wait for the page to fully load

page.wait_for_load_state("load")

# Select a specific product style from the dropdown menu

page.locator("#dropdown_selected_style_name").click()

# Select a specific product option

page.click('//*[@id="native_dropdown_selected_style_name_1"]')

page.wait_for_load_state("load")

# Get the HTML content of the loaded page

html_content = page.content()

try:

# Parse the HTML content using lxml's fromstring method

parser = fromstring(html_content)

# Use XPath to extract the text content of the product title

product_title = parser.xpath('//span[@id="productTitle"]/text()')[0].strip()

# Print the extracted product title

print({"Product Title": product_title})

except IndexError:

# Handle the case where the XPath query does not return any results

print('Product title not found in the specified location.')

except etree.XMLSyntaxError as parse_err:

# Handle XML syntax errors during parsing

print(f'Error while parsing HTML: {parse_err}')

except Exception as e:

# Handle any other exceptions

print(f'An unexpected error occurred: {e}')

# Close the browser context and the browser

context.close()

browser.close()

# Use sync_playwright to start the Playwright session and run the script

with sync_playwright() as playwright:

run(playwright)

Web scraping with Python is a powerful method for harvesting data from websites. The tools discussed facilitate the extraction, processing, and storage of web data for various purposes. In this process, the use of proxy servers to alternate IP addresses and implementing delays between requests are crucial for circumventing blocks. Beautiful Soup is user-friendly for beginners, while lxml is suited for handling large datasets thanks to its efficiency. For more advanced scraping needs, especially with dynamically loaded JavaScript websites, Playwright proves to be highly effective.

This Python web scraping tutorial outlines practical methods for automated data extraction using libraries like Beautiful Soup, lxml, and Playwright. In technical terms, what is web scraping Python refers to the use of Python-based tools to systematically retrieve and process structured data from websites.

Whether you're working with static content or JavaScript-rendered pages, there’s a Python tool for the job. By learning HTTP requests, mastering parsing, and understanding data extraction, you’re ready to build reliable scrapers.

As you advance, remember to follow ethical scraping practices – respect robots.txt, avoid overwhelming servers, and always handle data responsibly. With a solid foundation in place, you can now confidently start developing your own scraping projects and unlock the full potential of web data.

Comments: 0