en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski According to Reputation X, more than 86% of consumers lose trust in a brand when negative materials appear at the top of search results. For businesses, this translates into direct losses: lower conversion rates and higher marketing costs, which makes SERM activities a critical part of a reputation strategy. Implementation, however, has become far more complex: query limits, CAPTCHAs, and Google’s discontinuation of the &num=100 parameter have sharply reduced the capabilities of standard data collection systems. In this context, using proxies in SERM is not just a technical tweak, but a strategic layer for protecting reputation and a company’s financial resilience.

This article covers:

Search Engine Reputation Management (SERM) is a systematic process for shaping the brand’s information environment in search engines – sometimes referred to as a brand’s search‑engine reputation. The goal is to build a results structure where positive and neutral materials consistently hold the top positions.

Unlike SEO, which focuses exclusively on promoting a specific site, reputation management operates across the broader ecosystem of information sources: search results, review platforms, the press, blogs, and social media – everything that shapes how a brand is perceived online.

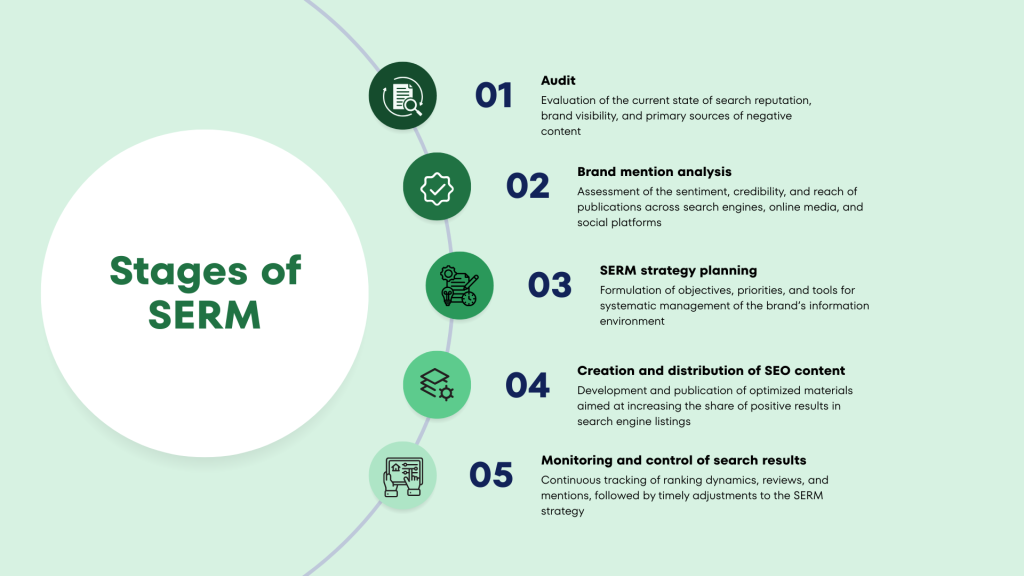

Execution follows staged steps: an audit, brand‑mention analysis, SERM strategy planning, creation and placement of SEO content, and ongoing monitoring and control of search results.

To do this, teams rely on SERM tools such as Google Alerts, Ahrefs, Semrush, Sistrix, Serpstat, Topvisor, media‑monitoring systems, and more. Using these tools is far less costly than dealing with a full‑blown reputation crisis. At the same time, outside factors can make the job substantially harder. In 2024, for example, Google tightened Search Console API usage by introducing per‑second and daily quotas. Even with technical access, companies ran into barriers when they tried to scale data collection. Then, in 2025, Google dropped the &num=100 parameter – confirming that you cannot base brand‑reputation control solely on current search‑engine conditions.

After Google’s algorithm changes, analytics tools and SERM service platforms could extract a maximum of 10 links per request instead of the previous 100. This constraint multiplied the number of calls required to the search engine. As a result, infrastructure load, quota consumption, and analytical costs all increased.

The effects were immediate. Tyler Gargula (LOCOMOTIVE Agency) reported that 87.7% of sites saw impressions decline in Google Search Console, and 77.6% lost unique search queries.

For businesses, that means higher operating expenses and new technical risks: frequent requests to search engines trigger CAPTCHAs and can lead to temporary access restrictions. Budgets for SEO and SERM rise, and monitoring itself gets harder. Search‑result reputation management has shifted from a supporting activity into a fully fledged operational challenge.

In these conditions, companies need to retool processes:

Only those who adapt to the new rules will keep control over how they appear in search.

Effective search‑reputation work requires stable access to data and the ability to scale monitoring without sacrificing accuracy. Proxies have become a cornerstone of SERM infrastructure.

They help solve several problems at once:

In short, proxies & SERM services are a foundational element of the technical stack for reputation management. Without them, companies run up against search‑engine limits, access restrictions, and the inability to observe local markets accurately.

Below are SERM services and their counterparts that integrate well with proxy servers and enable businesses to maintain monitoring accuracy, control the SERP, and achieve durable results even under strict limits and evolving search‑engine policies.

Ahrefs, Semrush, Sistrix, Serpstat, and Topvisor continue to provide comprehensive analytics on rankings, mentions, and snippet dynamics. However, after &num=100 was removed, the effectiveness of these tools depends heavily on their ability to perform repeated requests without running into CAPTCHAs or other friction.

A practical adjustment is to revisit the depth of monitoring. For companies that already rank near the top, tracking the Top‑10 or Top‑30 is often sufficient, since over 90% of users don’t go past the third page.

When an analysis must span deeper result sets, it’s more effective to combine residential and mobile proxies (with authentication, IP rotation, and geo‑selection) with custom solutions. This approach scales data collection and yields a representative SERP picture with these advantages:

Custom solutions may be your own parsers or open‑source frameworks, detailed below.

For teams with limited budgets that still need complete search results, open‑source scripts and frameworks are often the best fit.

Choosing the right intermediary server has a direct impact on analytical quality and stability. SERM typically uses four proxy types: residential, mobile, ISP, and datacenter. At Proxy‑Seller, we work with all of them and tailor configurations to specific SERM tasks–from local monitoring to large‑scale reputation programs.

Modern SERM analysis goes far beyond reviews and SEO. With constant algorithm changes, API constraints, and stricter anti‑bot policies, process resilience depends directly on technical infrastructure. Proxy networks aren’t just an auxiliary tool–they’re the backbone of a reliable, successful strategy.

For specialists handling large data volumes across geographically distributed markets, the optimal setup is a mix of residential and mobile dynamic proxies. Together, they keep analytics accurate, operate within platform conditions, and allow you to scale monitoring.

Integrating proxies into SERM workflows is an investment in resilient analytics and the ability to get a complete, trustworthy view of the SERP – even as search‑engine rules keep changing.

Use parsers and frameworks such as Playwright, Puppeteer, Scrapy, and se‑scraper. Configure proxy rotation for 10 sequential requests (pages 1–10). Employ clustering and asynchronous scripts for automation.

Use proxies from the target region so the SERP matches what a local user sees. This is critical when negative materials are pushed in specific countries or cities.

Work within platform limits by rotating IPs and User‑Agents, adding request delays, and distributing load across an IP pool.

Comments: 0