en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski Web scraping is an effective method for extracting data from the web. Many developers prefer to use the Python requests library to carry out web scraping projects, as it’s simple and effective. However, great as it is, the request library has its limitations. One typical problem we may encounter in web scraping is failed requests, which often lead to unstable data extraction. In this article, we will go through the process of implementing request retries in Python so you can handle the HTTP errors and keep your web scraping scripts stable and reliable.

Retrying failed requests means automatically trying an HTTP request again when it fails due to temporary problems. You’ll learn how this prevents data loss or interruptions in your programs caused by brief network glitches or server issues.

Not all errors deserve retries. Retry requests Python mainly benefits from temporary errors.

To manage retry timing, Python requests retry backoff uses exponential backoff delays. This means waiting longer between each retry to reduce overload on the server and avoid rapid repeated requests.

The backoff formula is:

backoff_factor * (2 ** (retry_count - 1))

Here are practical examples using different backoff factors:

This gradual increase protects the server and improves your success chances.

Practical advice for retry requests Python:

Let’s set up our environment first. Make sure you have Python installed and any IDE of your choice. Then install the requests library if you don’t have it already.

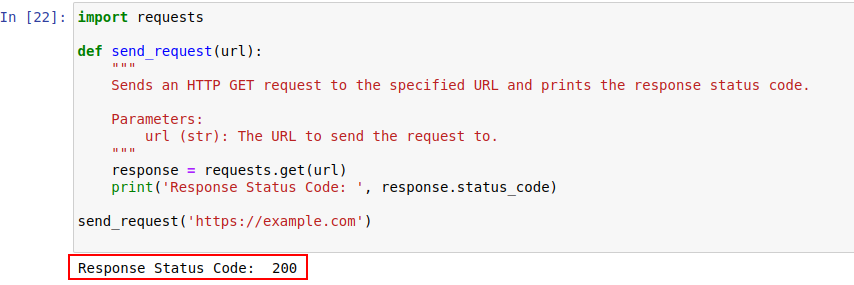

pip install requestsOnce installed, let's send a request to example.com using Python's requests module. Here's a simple function that does just that:

import requests

def send_request(url):

"""

Sends an HTTP GET request to the specified URL and prints the response status code.

Parameters:

url (str): The URL to send the request to.

"""

response = requests.get(url)

print('Response Status Code: ', response.status_code)

send_request('https://example.com')The code output is shown below:

Let's take a closer look at HTTP status codes to understand them better.

The server responds to an HTTP request with a status code indicating the request's outcome. Here's a quick rundown:

In our example, the status code 200 means the request to https://example.com was completed. It's the server's way of saying, "Everything's good here, your request was a success".

These status codes can also play a role in bot detection and indicate when access is restricted due to bot-like behavior.

Below is a quick rundown of HTTP error codes that mainly occur due to bot detection and authentication issues.

Let’s now write a simple retry mechanism in Python to make HTTP GET requests with the requests library. There are times when network requests fail because of some network problem or server overload. So if our request fails, we should retry these requests.

The function send_request_with_basic_retry_mechanism makes HTTP GET requests to a given URL with a basic retry mechanism in place, which would only retry if a network or request exception like a connection error is encountered. It would retry the request max_retries times maximum. If all tries fail with such an exception, it raises the last encountered exception.

import requests

import time

def send_request_with_basic_retry_mechanism(url, max_retries=2):

"""

Sends an HTTP GET request to a URL with a basic retry mechanism.

Parameters:

url (str): The URL to send the request to.

max_retries (int): The maximum number of times to retry the request.

Raises:

requests.RequestException: Raises the last exception if all retries fail.

"""

for attempt in range(max_retries):

try:

response = requests.get(url)

print('Response status: ', response.status_code)

break # Exit loop if request successful

except requests.RequestException as error:

print(f"Attempt {attempt+1} failed:", error)

if attempt < max_retries - 1:

print(f"Retrying...")

time.sleep(delay) # Wait before retrying

else:

print("Max retries exceeded.")

# Re-raise the last exception if max retries reached

raise

send_request_with_basic_retry_mechanism('https://example.com')Let’s now adapt the basic retry mechanism to handle scenarios where the website we’re trying to scrape implements bot detection mechanisms that may result in blocking. To address such scenarios, we need to retry the request diligently multiple times, as they may not be just bot detection blocks but also could be because of network or server problems.

The following function, send_request_with_advance_retry_mechanism, sends an HTTP GET request to the provided URL with optional retry attempts and retry delay.

import requests

import time

def send_request_with_advance_retry_mechanism(url, max_retries=3, delay=1):

"""

Sends an HTTP GET request to the specified URL with an advanced retry mechanism.

Parameters:

url (str): The URL to send the request to.

max_retries (int): The maximum number of times to retry the request. Default is 3.

delay (int): The delay (in seconds) between retries. Default is 1.

Raises:

requests.RequestException: Raises the last exception if all retries fail.

"""

for attempt in range(max_retries):

try:

response = requests.get(url)

# Raise an exception for 4xx or 5xx status codes

response.raise_for_status()

print('Response Status Code:', response.status_code)

except requests.RequestException as e:

# Print error message and attempt number if the request fails

print(f"Attempt {attempt+1} failed:", e)

if attempt < max_retries - 1:

# Print the retry message and wait before retrying

print(f"Retrying in {delay} seconds...")

time.sleep(delay)

else:

# If max retries exceeded, print message and re-raise exception

print("Max retries exceeded.")

raise

# Example usage

send_request_with_advance_retry_mechanism('https://httpbin.org/status/404')The function implements the retry logic as follows:

The delay parameter is important as it avoids bombarding the server with multiple requests at a close interval. Instead, it waits for the server to have enough time to process the request, making the server think that a human and not a bot is making the requests. So, the retry mechanism should be delayed to avoid server overload or slow server response, which may trigger anti-bot mechanisms.

Here's the corrected code:

import requests

import time

def send_request_with_advance_retry_mechanism(url, max_retries=3, delay=1, min_content_length=10):

"""

Sends an HTTP GET request to the specified URL with an advanced retry mechanism.

Parameters:

url (str): The URL to send the request to.

max_retries (int): The maximum number of times to retry the request. The default is 3.

delay (int): The delay (in seconds) between retries. Default is 1.

min_content_length (int): The minimum length of response content to consider valid. The default is 10.

Raises:

requests.RequestException: Raises the last exception if all retries fail.

"""

for attempt in range(max_retries):

try:

response = requests.get(url)

# Raise an exception for 4xx or 5xx status codes

response.raise_for_status()

# Check if response status code is 404

if response.status_code == 404:

print("404 Error: Not Found")

break # Exit loop for 404 errors

# Check if length of the response text is less than the specified minimum content length

if len(response.text) < min_content_length:

print("Response text length is less than specified minimum. Retrying...")

time.sleep(delay)

continue # Retry the request

print('Response Status Code:', response.status_code)

# If conditions are met, break out of the loop

break

except requests.RequestException as e:

print(f"Attempt {attempt+1} failed:", e)

if attempt < max_retries - 1:

print(f"Retrying in {delay} seconds...")

time.sleep(delay)

else:

print("Max retries exceeded.")

# Re-raise the last exception if max retries reached

raise

# Example usage

send_request_with_advance_retry_mechanism('https://httpbin.org/status/404')You’ll learn how to implement Python requests retry example using Python requests library combined with urllib3’s Retry and HTTPAdapter tools. This method gives you a clean, efficient retry strategy.

Implementation steps:

Example code snippet:

import requests

from requests.adapters import HTTPAdapter

from urllib3.util.retry import Retry

retry_strategy = Retry(

total=5,

status_forcelist=[500, 502, 503, 504],

backoff_factor=1

)

adapter = HTTPAdapter(max_retries=retry_strategy)

session = requests.Session()

session.mount("http://", adapter)

session.mount("https://", adapter)

response = session.get("https://example.com")

print(response.status_code)Benefits of this approach:

This makes it a reliable solution for managing unstable network conditions or flaky servers.

You’ll build your own retry function wrapping requests.get to control retry behavior manually. This gives you full flexibility over retries and error handling.

Here’s what your function will do:

Example code:

import time

import requests

def retry_requests_python(url, total_retries=3, status_forcelist=None, backoff_factor=1, **kwargs):

if status_forcelist is None:

status_forcelist = [500, 502, 503, 504]

for attempt in range(1, total_retries + 1):

try:

response = requests.get(url, **kwargs)

if response.status_code not in status_forcelist:

return response

else:

if attempt == total_retries:

return response

sleep_time = backoff_factor * (2 ** (attempt - 1))

time.sleep(sleep_time)

except requests.ConnectionError:

if attempt == total_retries:

raise

sleep_time = backoff_factor * (2 ** (attempt - 1))

time.sleep(sleep_time)Using this custom function, you control every aspect of Python requests retry logic. You can tune delays, retry codes, and handle exceptions inline.

To further improve retry success, integrate Proxy-Seller proxies.

This combo prevents IP bans, increases scraping efficiency, and ensures smoother retry handling. By combining your manual retry wrapper with Proxy-Seller’s proxy services, you build a robust, flexible Python requests retry solution ready for challenging network environments.

For certain errors like 429 Too Many Requests, using rotating proxies can help distribute your requests and avoid rate limiting.

The code below implements an advanced retry strategy along with the use of proxies. This way, we can implement a Python requests retry mechanism. Using high-quality web scraping proxies is also important. These proxies should have a good algorithm for proxy rotation and a reliable pool.

import requests

import time

def send_request_with_advance_retry_mechanism(url, max_retries=3, delay=1, min_content_length=10):

"""

Sends an HTTP GET request to the specified URL with an advanced retry mechanism.

Parameters:

url (str): The URL to send the request to.

max_retries (int): The maximum number of times to retry the request. Default is 3.

delay (int): The delay (in seconds) between retries. The default is 1.

Raises:

requests.RequestException: Raises the last exception if all retries fail.

"""

proxies = {

"http": "http://USER:PASS@HOST:PORT",

"https": "https://USER:PASS@HOST:PORT"

}

for attempt in range(max_retries):

try:

response = requests.get(url, proxies=proxies, verify=False)

# Raise an exception for 4xx or 5xx status codes

response.raise_for_status()

# Check if the response status code is 404

if response.status_code == 404:

print("404 Error: Not Found")

break # Exit loop for 404 errors

# Check if the length of the response text is less than 10 characters

if len(response.text) < min_content_length:

print("Response text length is less than 10 characters. Retrying...")

time.sleep(delay)

continue # Retry the request

print('Response Status Code:', response.status_code)

# If conditions are met, break out of the loop

break

except requests.RequestException as e:

print(f"Attempt {attempt+1} failed:", e)

if attempt < max_retries - 1:

print(f"Retrying in {delay} seconds...")

time.sleep(delay)

else:

print("Max retries exceeded.")

# Re-raise the last exception if max retries reached

raise

send_request_with_advance_retry_mechanism('https://httpbin.org/status/404')Request retries in Python are crucial for effective web scraping. The methods we've discussed to manage retries can help prevent blocking and enhance the efficiency and reliability of data collection. Implementing these techniques will make your web scraping scripts more robust and less susceptible to detection by bot protection systems.

Comments: 0