en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski Business intelligence, research, and analysis are just a few of the endless possibilities made available through web scraping. A fully-fledged business entity like Walmart provides a perfect structure for us to collect the necessary information. We can easily scrape Walmart data, such as name, price, and review info, from their multitude of websites using various scraping techniques.

In this article, we are going to break down the process of how to scrape Walmart data. We will be using requests to send HTTP requests and lxml to parse the returned HTML documents.

When you scrape Walmart data, you tap into a wealth of information crucial for various business needs. Here's what you can scrape and how each data type serves your goals:

| Data Type | Key Details Scraped | Business Goal |

|---|---|---|

| Product details | Name, brand, SKU, UPC, specifications, and category path. | Catalog enrichment and accurate product listings. |

| Pricing info | Current and previous prices, unit prices, promo labels. | Dynamic pricing and margin analysis. |

| Stock status | Inventory availability and store-level stock. | Supply chain insights and demand forecasting. |

| Customer reviews | Text, star rating, verified badges, images, and review counts. | Sentiment analysis and understanding customer experience. |

| Media assets | Images, 360° views, spec videos. | Content creation and better merchandising. |

| Seller details | Seller name/ID, ratings, shipping rates, return policies, and fulfillment type. | Vendor assessment and partnership decisions. |

You’ll want to scrape product data from Walmart regularly but tailored to the data type:

To keep your data clean and reliable, use Python tools like pandas for data manipulation and cleaning. Validate JSON responses with jsonschema to make sure your data structure remains consistent. Custom Python scripts can remove duplicates, normalize formats, and handle missing values effectively.

When it comes to scraping product data on multiple retail sites, Python is among the most effective options available. Here’s how it integrates seamlessly into extraction projects:

Using such language for projects in retail not only decomplicates the technical aspect but also increases the efficiency as well as the scope of analysis, making it the prime choice for experts aiming to gain profound knowledge of the market. These aspects might be especially useful when one decides to scrape Walmart data.

Now, let’s begin with building a Walmart web scraping tool.

To start off, make sure Python is installed on your computer. The required libraries can be downloaded using pip:

pip install requests

pip install lxml

pip install urllib3Now let`s import such libraries as:

import requests

from lxml import html

import csv

import random

import urllib3

import sslList of product URLs to scrape Walmart data can be added like this.

product_urls = [

'link with https',

'link with https',

'link with https'

]When web scraping Walmart, it is crucial to present the correct HTTP headers, especially the User-Agent header, in order to mimic an actual browser. Moreover, the site's anti-bot systems can also be circumvented by using rotating proxy servers. In the example below, User-Agent strings are presented along with instructions for adding proxy server authorization by IP address.

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

]

proxy = [

'<ip>:<port>',

'<ip>:<port>',

'<ip>:<port>',

]Request headers should be set in a manner that disguises them as coming from a user’s browser. It will help a lot when trying to scrape Walmart data. Here’s an example of how it would look:

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-IN,en;q=0.9',

'dnt': '1',

'priority': 'u=0, i',

'sec-ch-ua': '"Not/A)Brand";v="8", "Chromium";v="126", "Google Chrome";v="126"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Linux"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'none',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

}The primary step is to create a structure that will accept product information.

product_details = []Enumerating URL pages works in the following way: For every URL page, a GET request is initiated with a randomly chosen User-Agent and a proxy. After an HTML response is returned, it is parsed for the product details, including the name, price, and reviews. The relevant details are saved in the dictionary data structure, which is later added to the list previously created.

for url in product_urls:

headers['user-agent'] = random.choice(user_agents)

proxies = {

'http': f'http://{random.choice(proxy)}',

'https': f'http://{random.choice(proxy)}',

}

try:

# Send an HTTP GET request to the URL

response = requests.get(url=url, headers=headers, proxies=proxies, verify=False)

print(response.status_code)

response.raise_for_status()

except requests.exceptions.RequestException as e:

print(f'Error fetching data: {e}')

# Parse the HTML content using lxml

parser = html.fromstring(response.text)

# Extract product title

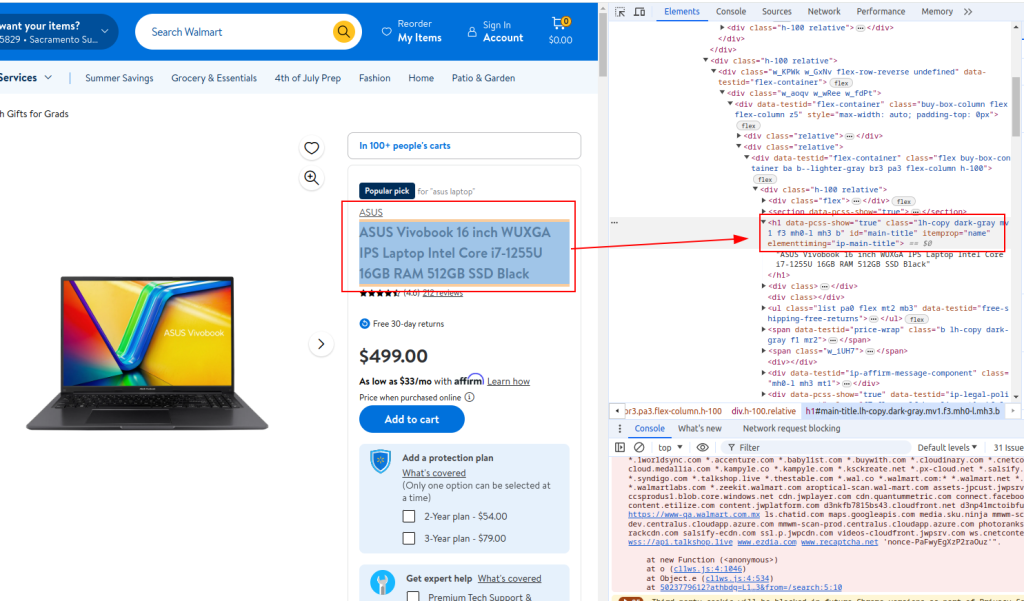

title = ''.join(parser.xpath('//h1[@id="main-title"]/text()'))

# Extract product price

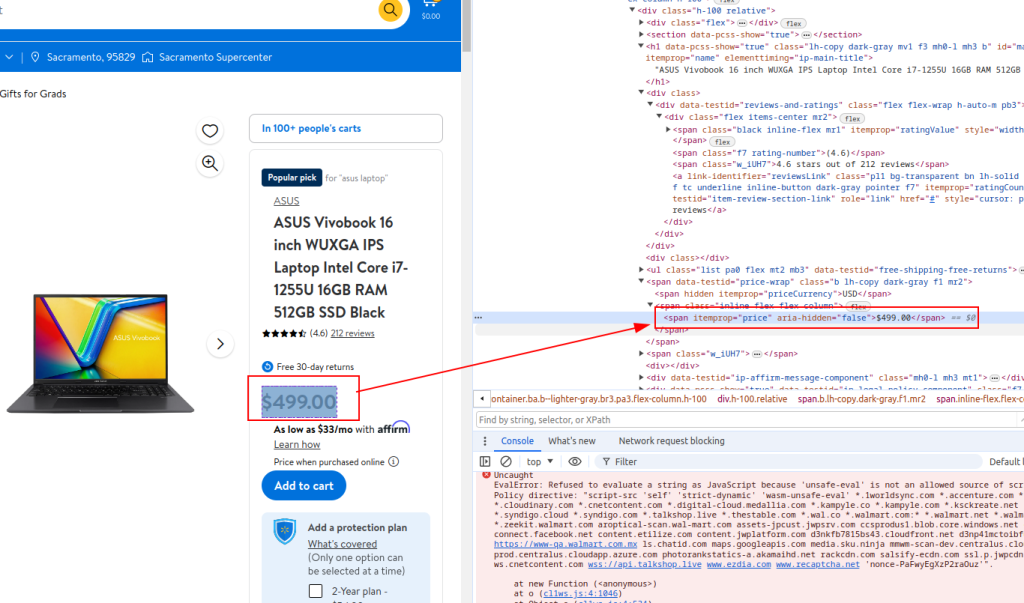

price = ''.join(parser.xpath('//span[@itemprop="price"]/text()'))

# Extract review details

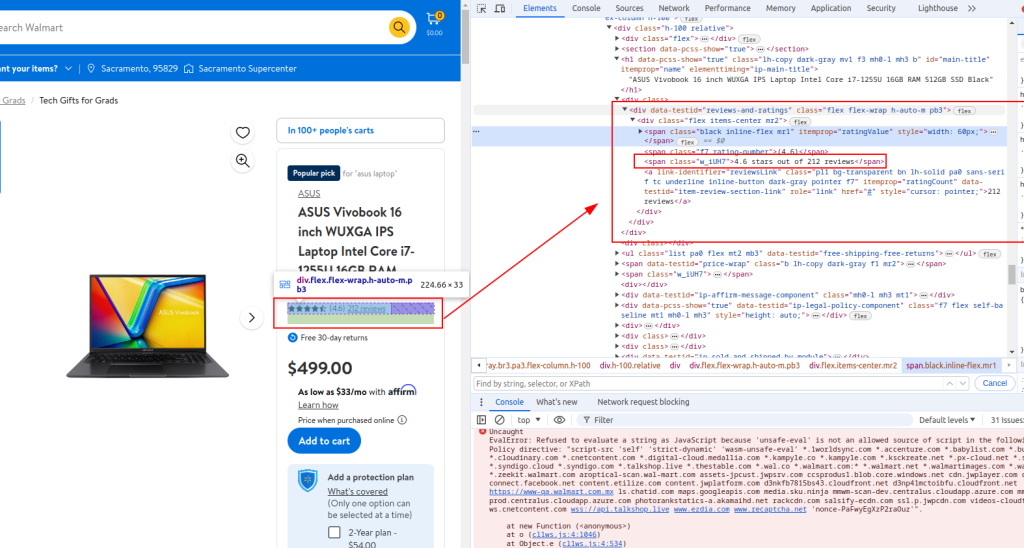

review_details = ''.join(parser.xpath('//div[@data-testid="reviews-and-ratings"]/div/span[@class="w_iUH7"]/text()'))

# Store extracted details in a dictionary

product_detail = {

'title': title,

'price': price,

'review_details': review_details

}

# Append product details to the list

product_details.append(product_detail)Title:

Price:

Review detail:

with open('walmart_products.csv', 'w', newline='') as csvfile:

fieldnames = ['title', 'price', 'review_details']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

for product_detail in product_details:

writer.writerow(product_detail)When web scraping Walmart, the complete Python script will be looking like that provided below. Here are also some comments to make it easier for you to understand each section.

import requests

from lxml import html

import csv

import random

import urllib3

import ssl

ssl._create_default_https_context = ssl._create_stdlib_context

urllib3.disable_warnings()

# List of product URLs to scrape Walmart data

product_urls = [

'link with https',

'link with https',

'link with https'

]

# Randomized User-Agent strings for anonymity

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

]

# Proxy list for IP rotation

proxy = [

'<ip>:<port>',

'<ip>:<port>',

'<ip>:<port>',

]

# Headers to mimic browser requests

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-IN,en;q=0.9',

'dnt': '1',

'priority': 'u=0, i',

'sec-ch-ua': '"Not/A)Brand";v="8", "Chromium";v="126", "Google Chrome";v="126"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Linux"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'none',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

}

# Initialize an empty list to store product details

product_details = []

# Loop through each product URL

for url in product_urls:

headers['user-agent'] = random.choice(user_agents)

proxies = {

'http': f'http://{random.choice(proxy)}',

'https': f'http://{random.choice(proxy)}',

}

try:

# Send an HTTP GET request to the URL

response = requests.get(url=url, headers=headers, proxies=proxies, verify=False)

print(response.status_code)

response.raise_for_status()

except requests.exceptions.RequestException as e:

print(f'Error fetching data: {e}')

# Parse the HTML content using lxml

parser = html.fromstring(response.text)

# Extract product title

title = ''.join(parser.xpath('//h1[@id="main-title"]/text()'))

# Extract product price

price = ''.join(parser.xpath('//span[@itemprop="price"]/text()'))

# Extract review details

review_details = ''.join(parser.xpath('//div[@data-testid="reviews-and-ratings"]/div/span[@class="w_iUH7"]/text()'))

# Store extracted details in a dictionary

product_detail = {

'title': title,

'price': price,

'review_details': review_details

}

# Append product details to the list

product_details.append(product_detail)

# Write the extracted data to a CSV file

with open('walmart_products.csv', 'w', newline='') as csvfile:

fieldnames = ['title', 'price', 'review_details']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

for product_detail in product_details:

writer.writerow(product_detail)For those using Python to interface with the Walmart scraping API, it's crucial to develop robust methods that effectively scrape Walmart prices and Walmart reviews results. This API provides a direct pipeline to extensive product data, facilitating real-time analytics on pricing and customer feedback.

Employing these specific strategies enhances the precision and scope of the information collected, allowing businesses to adapt quickly to market changes and consumer trends. Through strategic application of the Walmart API in Python, companies can optimize their data-gathering processes, ensuring comprehensive market analysis and informed decision-making.

Scraping Walmart data is far from simple because of robust anti-bot defenses. Walmart uses advanced technologies like Akamai Bot Manager and PerimeterX. They detect automated traffic through several methods:

Walmart’s modern site uses Next.js with SSR, hydration, lazy loading, and obfuscated CSS class names. This complicates DOM parsing and requires adaptive scraping logic.

Session management is another hurdle. Use Python libraries like requests.Session or httpx.AsyncClient to maintain cookies and session persistence across requests.

When JavaScript-heavy pages block you, consider headless browsers such as Playwright or Puppeteer (via Pyppeteer). These tools can render pages fully and execute JavaScript, making scraping more reliable.

Here is a quick checklist to handle Walmart scraping challenges effectively:

When scaling your Walmart scraping, avoid using just one IP. Multiple requests from a single IP trigger rate limits and blacklists. Residential proxies solve this by offering real ISP-assigned IPs that look like normal users. You can rotate these IPs to stay under Walmart’s radar.

Benefits of Residential IPs:

Data center proxies are faster but easier to block. Mobile proxies are harder to get but offer high anonymity. Residential proxies strike a good balance for Walmart scraping.

Let’s see how to integrate residential proxies like Decodo:

Proxy-Seller proxies offer 115 million+ ethically sourced residential IPs across 195+ locations. This lets you geo-target Walmart content globally. Built-in IP rotation and session stickiness keep your scraping smooth.

Here’s an example of rotating proxies with requests and curl-cffi:

from dotenv import load_dotenv

import os

import random

import requests

load_dotenv()

proxy_list = os.getenv("PROXY_LIST").split(",") # List of proxies from env

def get_proxy():

return random.choice(proxy_list)

session = requests.Session()

proxy = get_proxy()

proxies = {"http": f"http://{proxy}", "https": f"http://{proxy}"}

response = session.get("https://www.walmart.com", proxies=proxies, headers={"User-Agent": "Mozilla/5.0"})Proxy health monitoring and switching after failures is essential. Also, keep an eye on concurrency limits and use connection pooling to optimize performance.

Proxy-Seller leads in proxy solutions for scraping Walmart data at scale because they provide:

With Proxy-Seller, you can securely configure your proxy credentials, automate proxy rotation, and maintain stable Walmart data extraction at any scale. Their expert support helps you tackle setup or integration issues fast, making them an ideal choice whether you scrape product data from Walmart as an individual developer or run enterprise-level projects.

In this tutorial, we explained how to use the Python libraries to scrape Walmart data and save them into a CSV file for later analysis. The script given is basic, and it serves as a starting point that you can modify to improve the efficiency of the scraping process. Improvements may include adding random time intervals between requests to simulate human browsing, using user-agents and proxies to mask the bot, and implementing advanced error handling to tackle scraping interruptions or failures.

Comments: 0