en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Gaeilge

Gaeilge  اردو

اردو  Indonesia

Indonesia  Polski

Polski An advertisement platform like Craigslist remains relevant in the digital age. The collector helps automate the extraction of information from advertisements, especially by obtaining data directly from websites. Thanks to flexible and powerful libraries such as BeautifulSoup and Requests, data collection can be done efficiently. This tutorial focuses on craigslist scraping with python, highlighting BeautifulSoup, Requests, and proxy rotation to avoid bot detection.

Now, we are going to break down how to scrape Craigslist step-by-step.

Now, we are going to go through the web scraping Craigslist steps in more detail starting with sending HTTP requests to a specific webpage, sectioning the required page, collecting the desired data and saving it in a predetermined format.

The following packages must be downloaded and installed:

pip install beautifulsoup4

pip install requests

To grab data from web pages, you first need to send HTTP requests to the URLs you want to scrape. Using the requests library, you can send GET requests to fetch the HTML content, which you can then process to extract the information you need.

import requests

# List of Craigslist URLs to scrape

urls = [

"link",

"link"

]

for url in urls:

# Send a GET request to the URL

response = requests.get(url)

# Check if the request was successful (status code 200)

if response.status_code == 200:

# Extract HTML content from the response

html_content = response.text

else:

# If the request failed, print an error message with the status code

print(f"Failed to retrieve {url}. Status code: {response.status_code}")

With BeautifulSoup, you can go through the HTML and pick out the parts you need from Craigslist. It lets you find tags, get text, and grab things like links or prices. It’s a simple way to pull useful info from messy web pages.

from bs4 import BeautifulSoup

# Iterate through each URL in the list

for url in urls:

# Send a GET request to the URL

response = requests.get(url)

# Check if the request was successful (status code 200)

if response.status_code == 200:

# Extract HTML content from the response

html_content = response.text

# Parse the HTML content using BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

else:

# If the request failed, print an error message with the status code

print(f"Failed to retrieve {url}. Status code: {response.status_code}")

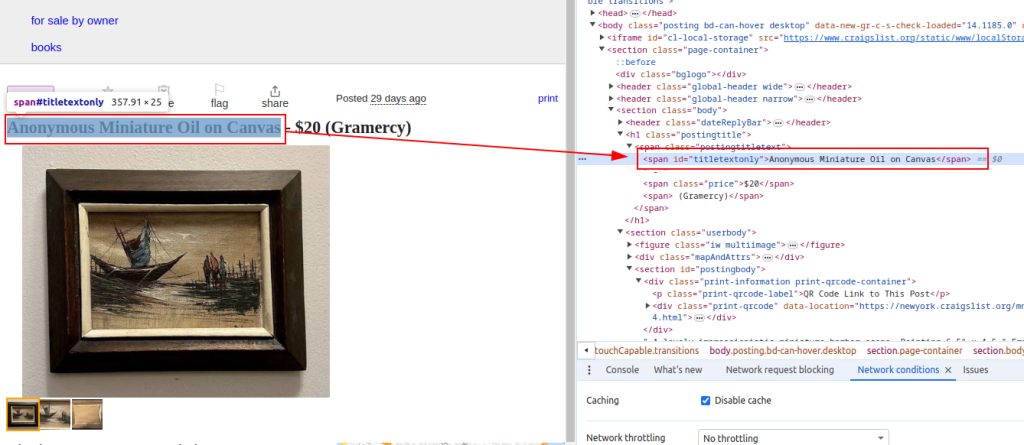

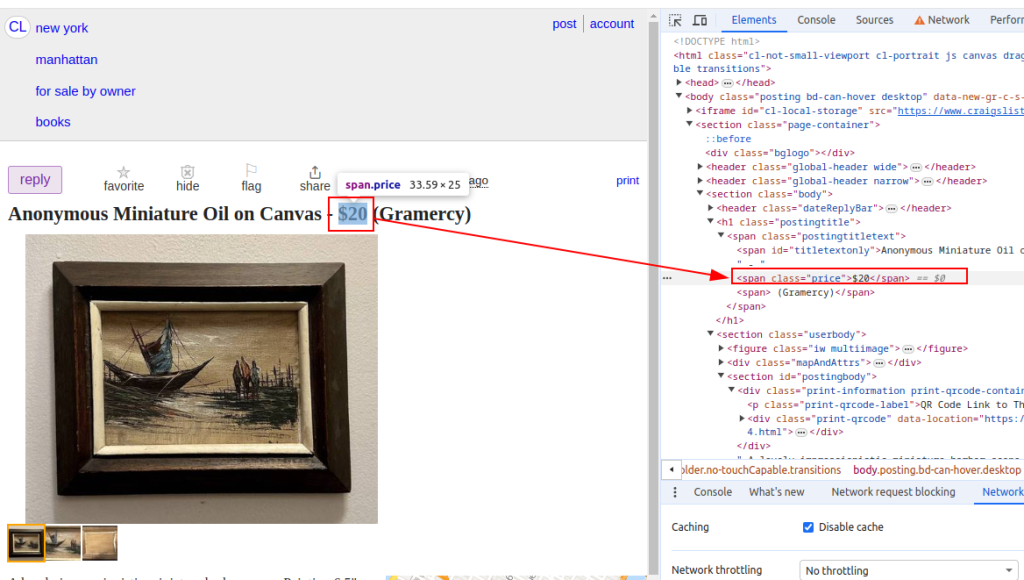

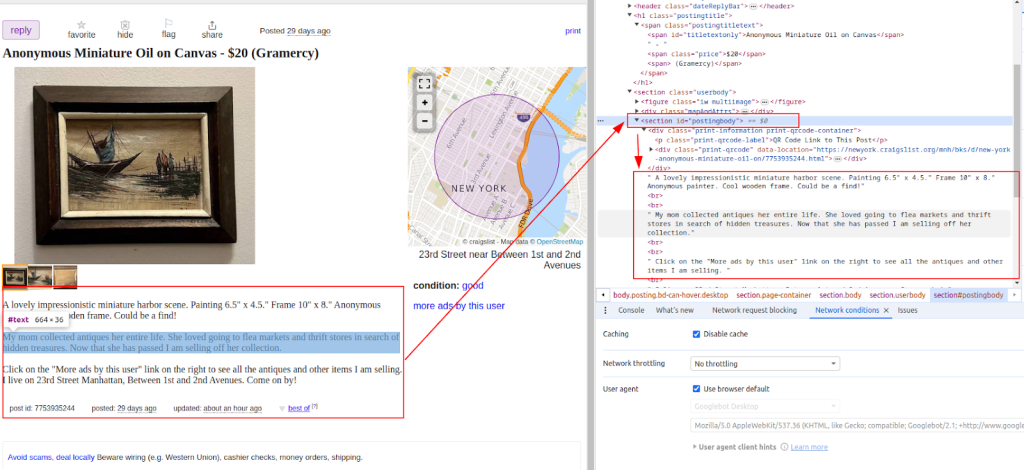

After fetching the HTML content, the next step is to parse it using the BeautifulSoup library. Using functions we perform Craigslist data scraping such as listings like item titles and prices. It’s like having a tool that helps you sift through the messy code to find the useful bits quickly and efficiently.

from bs4 import BeautifulSoup

# Iterate through each URL in the list

for url in urls:

# Send a GET request to the URL

response = requests.get(url)

# Check if the request was successful (status code 200)

if response.status_code == 200:

# Extract HTML content from the response

html_content = response.text

# Parse the HTML content using BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

# Extracting specific data points

# Find the title of the listing

title = soup.find('span', id='titletextonly').text.strip()

# Find the price of the listing

price = soup.find('span', class_='price').text.strip()

# Find the description of the listing (may contain multiple paragraphs)

description = soup.find('section', id='postingbody').find_all(text=True, recursive=False)

# Print extracted data (for demonstration purposes)

print(f"Title: {title}")

print(f"Price: {price}")

print(f"Description: {description}")

else:

# If the request fails, print an error message with the status code

print(f"Failed to retrieve {url}. Status code: {response.status_code}")

Title:

Price:

Description:

After we extract Craigslist data, ensure that it is stored in the CSV format to ease future use or analysis and for interoperability with other applications.

import csv

# Define the CSV file path and field names

csv_file = 'craigslist_data.csv'

fieldnames = ['Title', 'Price', 'Description']

# Writing data to CSV file

try:

# Open the CSV file in write mode with UTF-8 encoding

with open(csv_file, mode='w', newline='', encoding='utf-8') as file:

# Create a CSV DictWriter object with the specified fieldnames

writer = csv.DictWriter(file, fieldnames=fieldnames)

# Write the header row in the CSV file

writer.writeheader()

# Iterate through each item in the scraped_data list

for item in scraped_data:

# Write each item as a row in the CSV file

writer.writerow(item)

# Print a success message after writing data to the CSV file

print(f"Data saved to {csv_file}")

except IOError:

# Print an error message if an IOError occurs while writing to the CSV file

print(f"Error occurred while writing data to {csv_file}")

If you want to automatically collect listings from the Craigslist website, one of the easiest ways is to use the unofficial API via a library. It lets you query the site, filter results by category, city, price, keywords, and more.

Start by installing the library:

pip install python-craigslist

Here’s a simple example to search for apartment rentals in New York:

from craigslist import CraigslistHousing

cl_h = CraigslistHousing(site='newyork', category='apa', filters={'max_price': 2000})

for result in cl_h.get_results(limit=10):

print(result['name'], result['price'], result['url'])

This code fetches the first 10 listings from the apartments/housing for rent section in New York City where the price is under $2000.

The library also supports categories like jobs, cars, for sale items, and more — along with a wide range of filters. It's a great tool for quickly building Python-based tools, such as bots, listing trackers, or market analytics.

There are a number of additional challenges that one might face when web scraping, especially with Craigslist. It implements IP blocks and CAPTCHA challenges to prevent scraping attempts. To avoid these problems, you can implement proxies alongside user agent rotation.

Using proxies:

Using proxies and rotating user-agents together is a smart way to keep scraping without getting caught.

proxies = {

'http': 'http://your_proxy_ip:your_proxy_port',

'https': 'https://your_proxy_ip:your_proxy_port'

}

response = requests.get(url, proxies=proxies)

User-agent rotation means changing the browser identity your scraper sends with each request. If you always use the same user-agent, it looks suspicious. Switching between different user-agents makes your scraper seem more like normal users, helping avoid blocks:

import random

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

# Add more user agents as needed

]

headers = {

'User-Agent': random.choice(user_agents)

}

response = requests.get(url, headers=headers)

Integrating all the modules discussed throughout this tutorial enables the development of a fully functional Python Craigslist scraper. This program can extract, parse, and navigate through a number of URLs and retrieve the needed data.

import requests

import urllib3

from bs4 import BeautifulSoup

import csv

import random

import ssl

ssl._create_default_https_context = ssl._create_stdlib_context

urllib3.disable_warnings()

# List of Craigslist URLs to scrape

urls = [

"link",

"link"

]

# User agents and proxies

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

]

proxies = [

{'http': 'http://your_proxy_ip1:your_proxy_port1', 'https': 'https://your_proxy_ip1:your_proxy_port1'},

{'http': 'http://your_proxy_ip2:your_proxy_port2', 'https': 'https://your_proxy_ip2:your_proxy_port2'},

]

# List to store scraped data

scraped_data = []

# Loop through each URL in the list

for url in urls:

# Rotate user agent for each request to avoid detection

headers = {

'User-Agent': random.choice(user_agents)

}

# Use a different proxy for each request to avoid IP blocking

proxy = random.choice(proxies)

try:

# Send GET request to the Craigslist URL with headers and proxy

response = requests.get(url, headers=headers, proxies=proxy, timeout=30, verify=False)

# Check if the request was successful (status code 200)

if response.status_code == 200:

# Parse HTML content of the response

html_content = response.text

soup = BeautifulSoup(html_content, 'html.parser')

# Extract data from the parsed HTML

title = soup.find('span', id='titletextonly').text.strip()

price = soup.find('span', class_='price').text.strip()

description = soup.find('section', id='postingbody').get_text(strip=True, separator='\n') # Extracting description

# Append scraped data as a dictionary to the list

scraped_data.append({'Title': title, 'Price': price, 'Description': description})

print(f"Data scraped for {url}")

else:

# Print error message if request fails

print(f"Failed to retrieve {url}. Status code: {response.status_code}")

except Exception as e:

# Print exception message if an error occurs during scraping

print(f"Exception occurred while scraping {url}: {str(e)}")

# CSV file setup for storing scraped data

csv_file = 'craigslist_data.csv'

fieldnames = ['Title', 'Price', 'Description']

# Writing scraped data to CSV file

try:

with open(csv_file, mode='w', newline='', encoding='utf-8') as file:

writer = csv.DictWriter(file, fieldnames=fieldnames)

# Write header row in the CSV file

writer.writeheader()

# Iterate through scraped_data list and write each item to the CSV file

for item in scraped_data:

writer.writerow(item)

# Print success message if data is saved successfully

print(f"Data saved to {csv_file}")

except IOError:

# Print error message if there is an Error while writing to the CSV file

print(f"Error occurred while writing data to {csv_file}")

Now that you get how web scraping works, it’s easy to see why it’s so handy — whether you’re analyzing markets or hunting for leads. Websites are packed with valuable info, and tools like BeautifulSoup and Requests make pulling that data pretty simple. This guide also touched on important tips, like dealing with dynamic content and using rotating proxies to stay under the radar. When done right, scraping with Python can really help businesses and people make smarter decisions in all kinds of areas.

Comments: 0