en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski Scraping Amazon reviews with Python can be useful — whether you're analyzing competitors, checking what real customers are saying, or digging into market trends. If you’re wondering how to scrape Amazon reviews using Python, this brief tutorial walks you through a hands-on process that uses the Requests package and BeautifulSoup to programmatically grab review content.

Before anything else, you’ll need to install a couple of libraries. The two core dependencies, Requests for network calls and BeautifulSoup for HTML tree traversal, can both be secured in a single terminal line:

pip install requests

pip install beautifulsoup4We will focus on amazon reviews with python and examine each stage of the scraping process step-by-step.

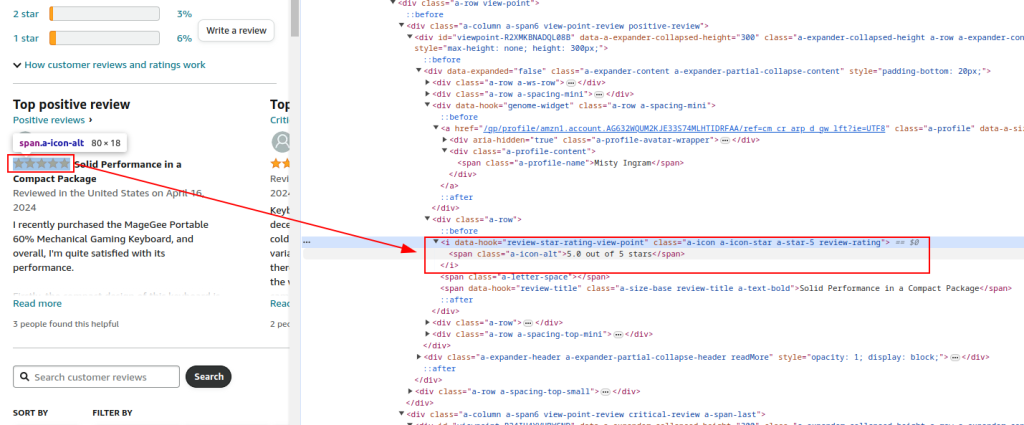

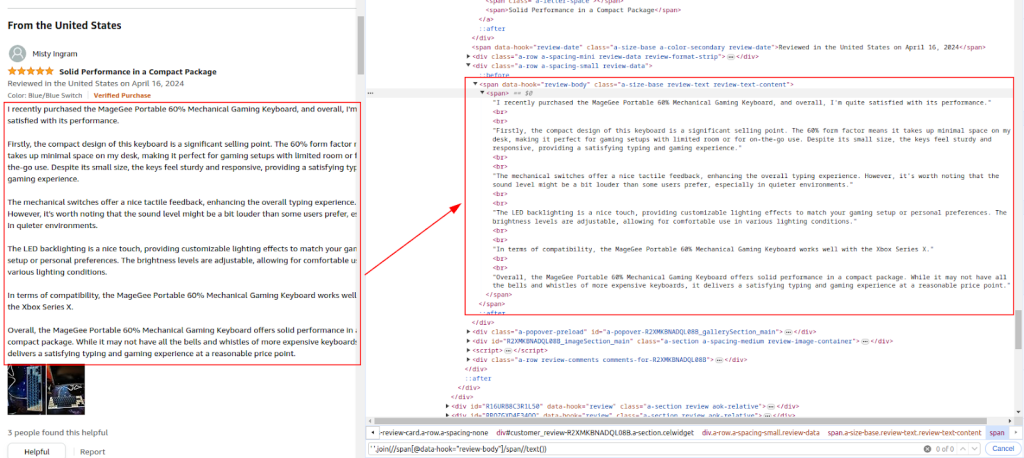

Understanding the site’s HTML structure is essential for identifying review elements. The review section includes fields such as reviewer handle, star rating, and written commentary; these must be located via browser inspection tools.

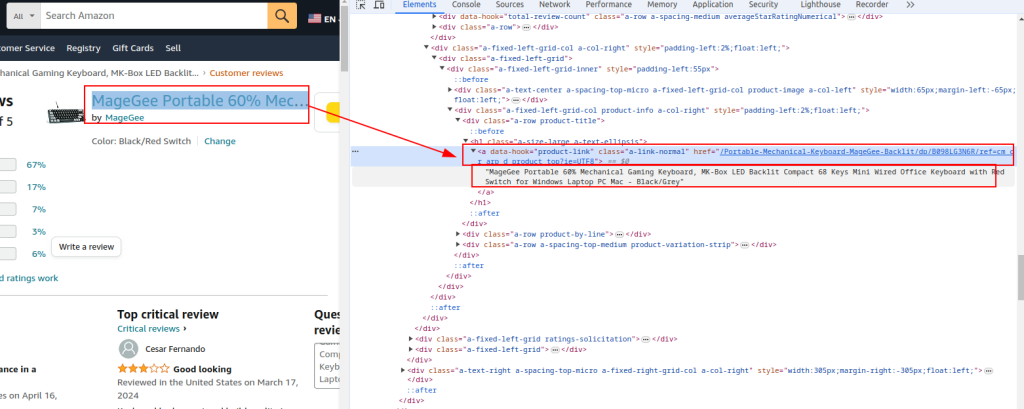

Product title and URL:

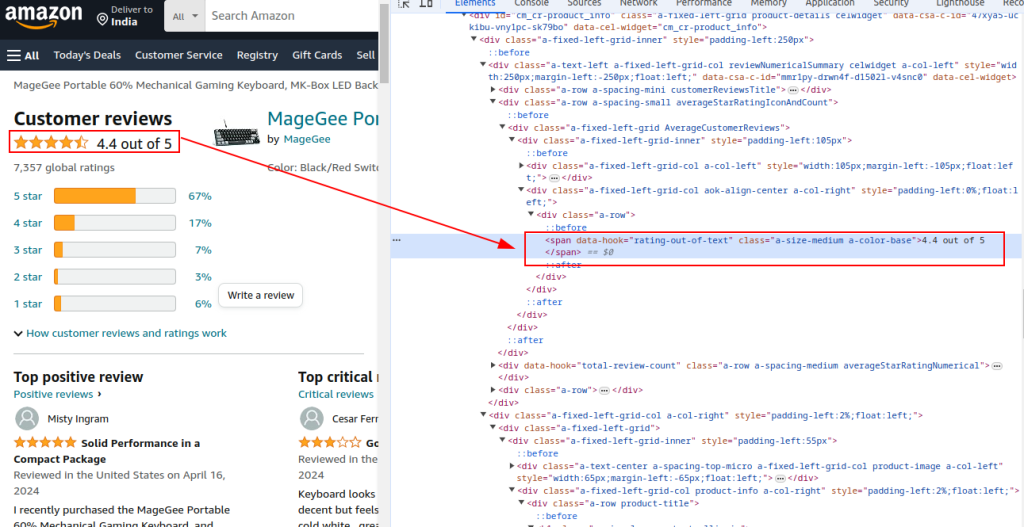

Total rating:

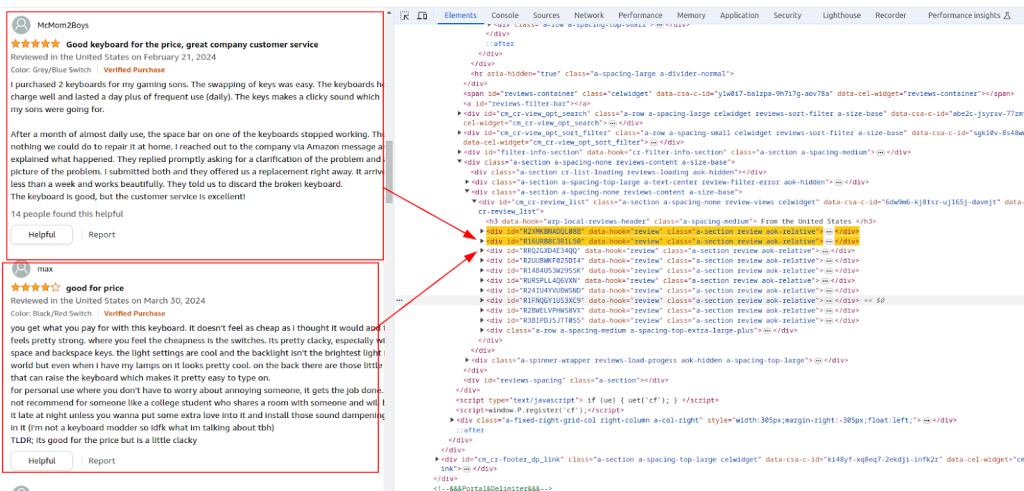

Review section:

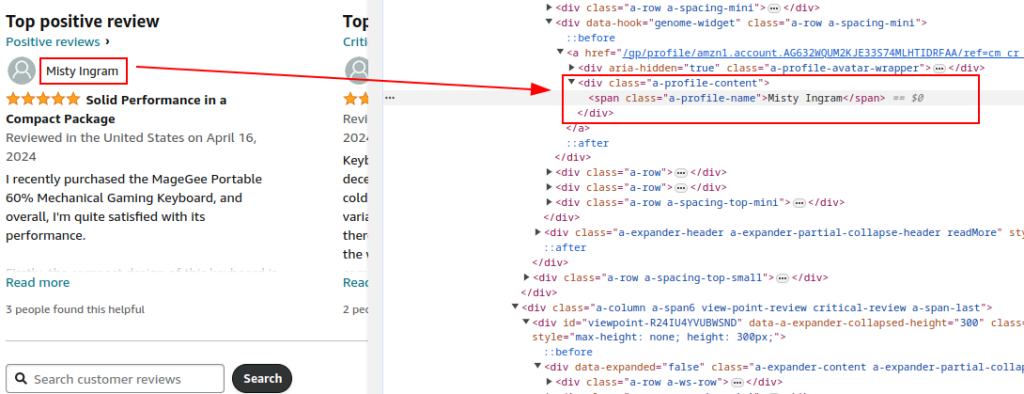

Author name:

Rating:

Comment:

Headers play an important role. User-Agent strings and other headers are set to mimic a regular browser and reduce the chance of detection. If you want to do this right, following an Amazon scraping guide python will show you how to set up these headers along with proxies to keep your requests smooth and under the radar.

Proxies allow IP rotation to reduce the risk of bans and rate limits. They are especially important for large-scale scraping.

Including various headers like Accept-Encoding, Accept-Language, Referer, Connection, and Upgrade-Insecure-Requests mimics a legitimate browser request, reducing the chance of being flagged as a bot.

import requests

url = "https://www.amazon.com/Portable-Mechanical-Keyboard-MageGee-Backlit/product-reviews/B098LG3N6R/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews"

# Example of a proxy provided by the proxy service

proxy = {

'http': 'http://your_proxy_ip:your_proxy_port',

'https': 'https://your_proxy_ip:your_proxy_port'

}

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'no-cache',

'dnt': '1',

'pragma': 'no-cache',

'sec-ch-ua': '"Not/A)Brand";v="99", "Google Chrome";v="91", "Chromium";v="91"',

'sec-ch-ua-mobile': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

}

# Send HTTP GET request to the URL with headers and proxy

try:

response = requests.get(url, headers=headers, proxies=proxy, timeout=10)

response.raise_for_status() # Raise an error if the request failed

except requests.exceptions.RequestException as e:

print(f"Error: {e}")After the page loads, BeautifulSoup turns the raw HTML into a searchable tree. From that structure, the scraper grabs canonical product links, page titles, and any visible rating aggregates.

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.content, 'html.parser')

# Extracting common product details

product_url = soup.find('a', {'data-hook': 'product-link'}).get('href', '')

product_title = soup.find('a', {'data-hook': 'product-link'}).get_text(strip=True)

total_rating = soup.find('span', {'data-hook': 'rating-out-of-text'}).get_text(strip=True)We go back to the same HTML structure, this time focusing on gathering reviewer names, star ratings, and written comments — all done using Python to scrape Amazon reviews efficiently with predefined selectors.

reviews = []

review_elements = soup.find_all('div', {'data-hook': 'review'})

for review in review_elements:

author_name = review.find('span', class_='a-profile-name').get_text(strip=True)

rating_given = review.find('i', class_='review-rating').get_text(strip=True)

comment = review.find('span', class_='review-text').get_text(strip=True)

reviews.append({

'Product URL': product_url,

'Product Title': product_title,

'Total Rating': total_rating,

'Author': author_name,

'Rating': rating_given,

'Comment': comment,

})Python's built-in csv.writer can save the collected review data into a .csv file for later analysis.

import csv

# Define CSV file path

csv_file = 'amazon_reviews.csv'

# Define CSV fieldnames

fieldnames = ['Product URL', 'Product Title', 'Total Rating', 'Author', 'Rating', 'Comment']

# Writing data to CSV file

with open(csv_file, mode='w', newline='', encoding='utf-8') as file:

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

for review in reviews:

writer.writerow(review)

print(f"Data saved to {csv_file}")A block of code that stitches together the request-building, parsing, and file-output steps is presented, encapsulating the entire scraping workflow in a single runnable script:

import requests

from bs4 import BeautifulSoup

import csv

import urllib3

urllib3.disable_warnings()

# URL of the Amazon product reviews page

url = "https://www.amazon.com/Portable-Mechanical-Keyboard-MageGee-Backlit/product-reviews/B098LG3N6R/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews"

# Proxy provided by the proxy service with IP-authorization

path_proxy = 'your_proxy_ip:your_proxy_port'

proxy = {

'http': f'http://{path_proxy}',

'https': f'https://{path_proxy}'

}

# Headers for the HTTP request

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'no-cache',

'dnt': '1',

'pragma': 'no-cache',

'sec-ch-ua': '"Not/A)Brand";v="99", "Google Chrome";v="91", "Chromium";v="91"',

'sec-ch-ua-mobile': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

}

# Send HTTP GET request to the URL with headers and handle exceptions

try:

response = requests.get(url, headers=headers, timeout=10, proxies=proxy, verify=False)

response.raise_for_status() # Raise an error if the request failed

except requests.exceptions.RequestException as e:

print(f"Error: {e}")

# Use BeautifulSoup to parse the HTML and grab the data you need

soup = BeautifulSoup(response.content, 'html.parser')

# Extracting common product details

product_url = soup.find('a', {'data-hook': 'product-link'}).get('href', '') # Extract product URL

product_title = soup.find('a', {'data-hook': 'product-link'}).get_text(strip=True) # Extract product title

total_rating = soup.find('span', {'data-hook': 'rating-out-of-text'}).get_text(strip=True) # Extract total rating

# Extracting individual reviews

reviews = []

review_elements = soup.find_all('div', {'data-hook': 'review'})

for review in review_elements:

author_name = review.find('span', class_='a-profile-name').get_text(strip=True) # Extract author name

rating_given = review.find('i', class_='review-rating').get_text(strip=True) # Extract rating given

comment = review.find('span', class_='review-text').get_text(strip=True) # Extract review comment

# Store each review in a dictionary

reviews.append({

'Product URL': product_url,

'Product Title': product_title,

'Total Rating': total_rating,

'Author': author_name,

'Rating': rating_given,

'Comment': comment,

})

# Define CSV file path

csv_file = 'amazon_reviews.csv'

# Define CSV fieldnames

fieldnames = ['Product URL', 'Product Title', 'Total Rating', 'Author', 'Rating', 'Comment']

# Writing data to CSV file

with open(csv_file, mode='w', newline='', encoding='utf-8') as file:

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

for review in reviews:

writer.writerow(review)

# Print confirmation message

print(f"Data saved to {csv_file}")Reliable proxies improve the chances of bypassing blocks and help reduce detection by anti-bot filters. For scraping, residential proxies are often preferred due to their trust factor, while static ISP proxies provide speed and stability.

Scraping Amazon product reviews using Python is entirely possible, and Python provides the necessary tools to achieve it. With just a few libraries and some careful poking around the page, you can get all sorts of useful info: from what customers really think to spotting where your competitors slip up.

Sure, there are some hurdles: Amazon doesn’t exactly love scrapers. So if you're trying to scrape Amazon product reviews Python-style at scale, you’ll need proxies to stay under the radar. The most reliable options are residential proxies (great trust score, rotating IPs) or static ISP proxies (fast and stable).

Comments: 0