en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski Tracking price data for popular cryptocurrencies can be challenging due to their high volatility. Conducting thorough research and being prepared to capitalize on profit opportunities are essential when dealing with cryptocurrencies. Obtaining accurate pricing data can sometimes be difficult. APIs are commonly used for this purpose, but free subscriptions often come with limitations.

We will explore how to periodically scrape the current prices of the top 150 cryptocurrencies using Python. Our cryptocurrency price tracker will gather the following data:

The first step in our Python script is to import the necessary libraries. We will use the `requests` and `BeautifulSoup` libraries to send requests and extract data from HTML files, respectively.

import requests

from bs4 import BeautifulSoup

import csv

import time

import randomWe will also use `csv` for CSV file operations and `time` and `random` for controlling the frequency of price updates and the rotation of proxies, respectively.

When sending requests without a premium proxy, you might encounter "Access Denied" responses.

You can set up a proxy this way:

proxy = {

"http": "http://Your_proxy_IP_Address:Your_proxy_port",

}

html = requests.get(url, proxies=proxy)For authenticated proxies, use the following format:

proxy = {

"http": "http://username:password@Your_proxy_IP_Address:Your_proxy_port",

}

html = requests.get(url, proxies=proxy)Remember to replace “Your_proxy_IP_Address” and “Your_proxy_port” with the actual proxy address. Also, replace the value of “username” and "password” with your credentials.

Rotating proxies is a very important technique to successfully scrape modern websites as they often block or restrict access to bots and scrapers when they detect multiple requests from the same IP address. To set up proxy rotation, import the random library.

Create a list of proxies for rotation:

# List of proxies

proxies = [

"username:password@Your_proxy_IP_Address:Your_proxy_port1",

"username:password@Your_proxy_IP_Address:Your_proxy_port2",

"username:password@Your_proxy_IP_Address:Your_proxy_port3",

"username:password@Your_proxy_IP_Address:Your_proxy_port4",

"username:password@Your_proxy_IP_Address:Your_proxy_port5",

]Moving on, we define a get_proxy() function to randomly select a proxy from our list for each request.

# method to rotate your proxies

def get_proxy():

# Choose a random proxy from the list

proxy = random.choice(proxies)

return {

"http": f'http://{proxy}',

"https": f'http://{proxy}'

}

This function returns a dictionary with the selected proxy for the HTTP protocol. This setup helps us appear as multiple organic users to the website we're scraping, enhancing our chances of bypassing anti-scraping measures.

The get_crypto_prices() function scrapes cryptocurrency prices from Coindesk. It sends a GET request to the website using the requests.get() function, with our rotating proxies passed as an argument. We pass in the text of the response and the parser "html.parser" to the BeautifulSoup constructor.

def get_crypto_prices():

url = "https://crypto.com/price"

html = requests.get(url, proxies=get_proxy())

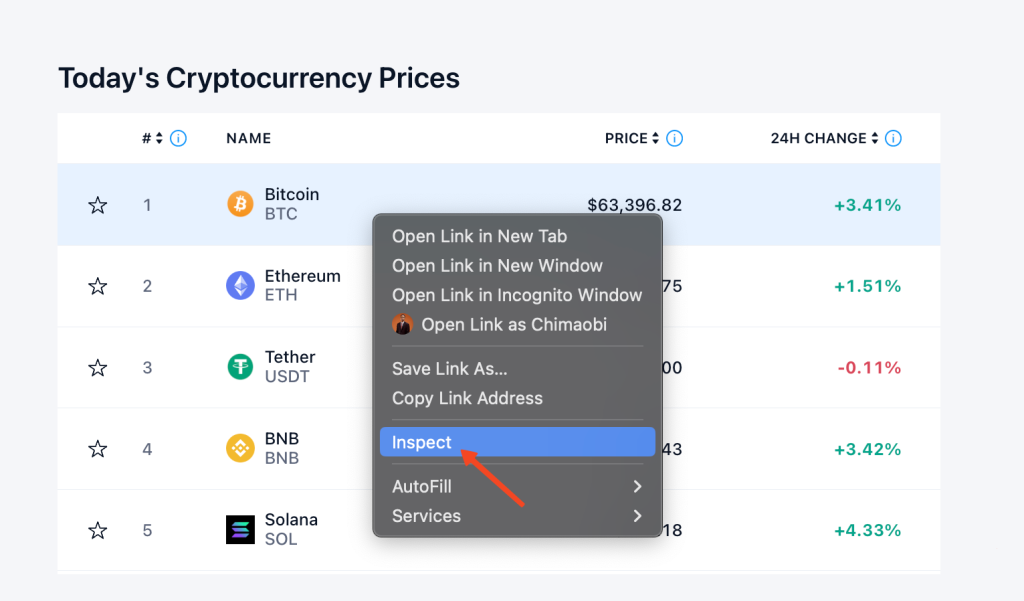

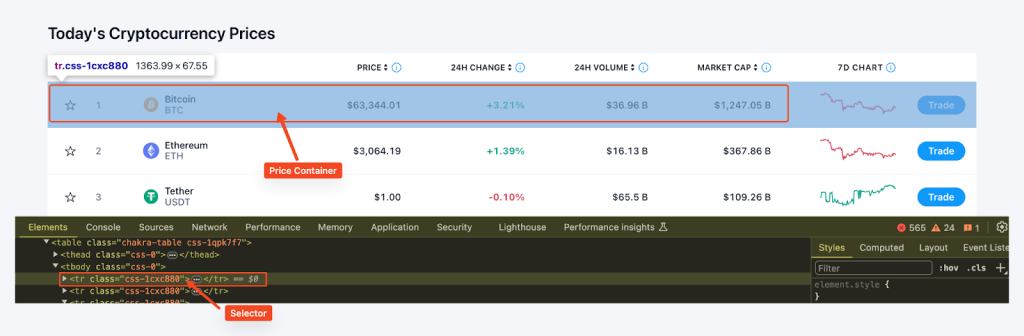

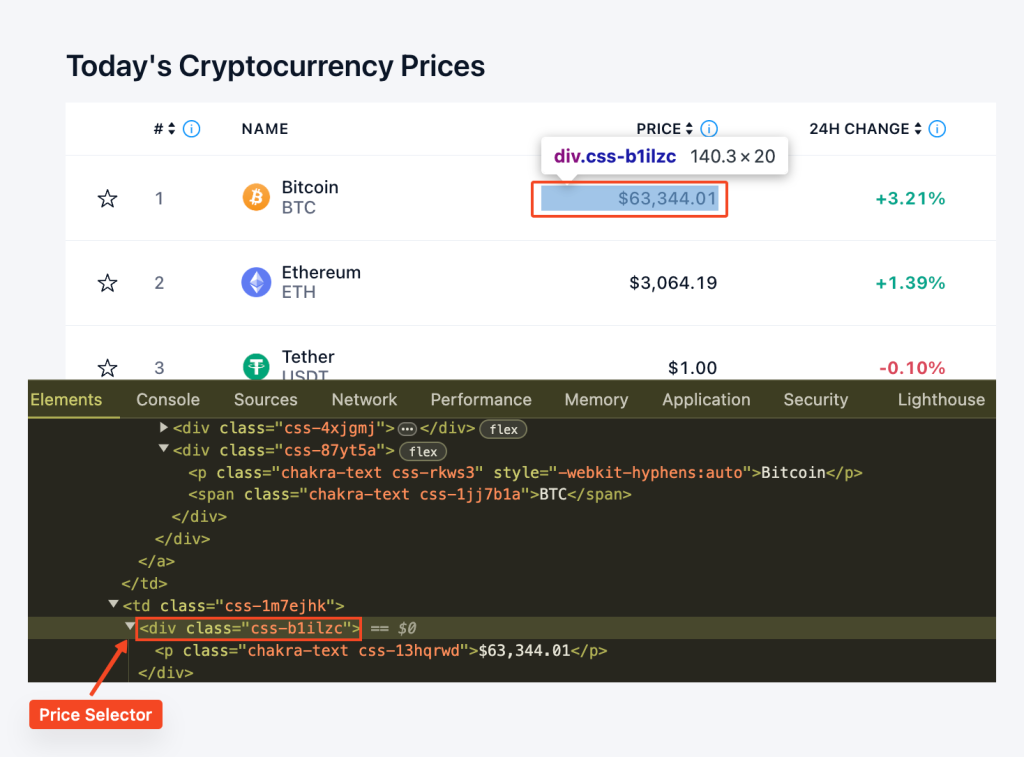

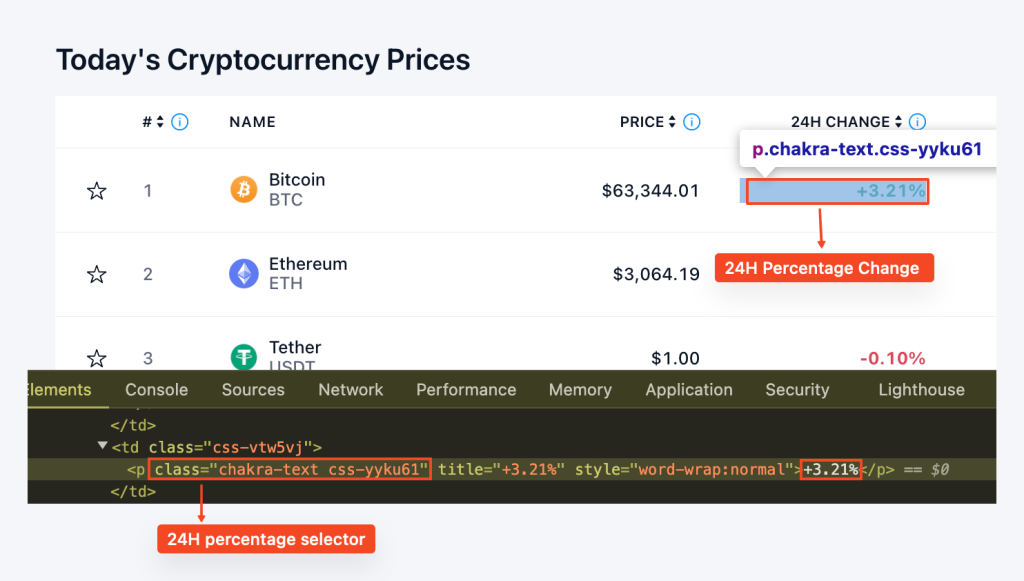

soup = BeautifulSoup(html.text, "html.parser")Before we commence data extraction, we need to understand the site structure. We can use the browser's Developer Tools to inspect the HTML of the webpage. To access Developer Tools, you can right-click on the webpage and select “Inspect”.

We then find all the price containers on the page using the find_all() function of BeautifulSoup and the CSS selector "tr", class_='css-1cxc880' and extract the coin name, ticker, price, and 24 hour percentage change for each container. This data is stored in a dictionary and then appended to the prices list.

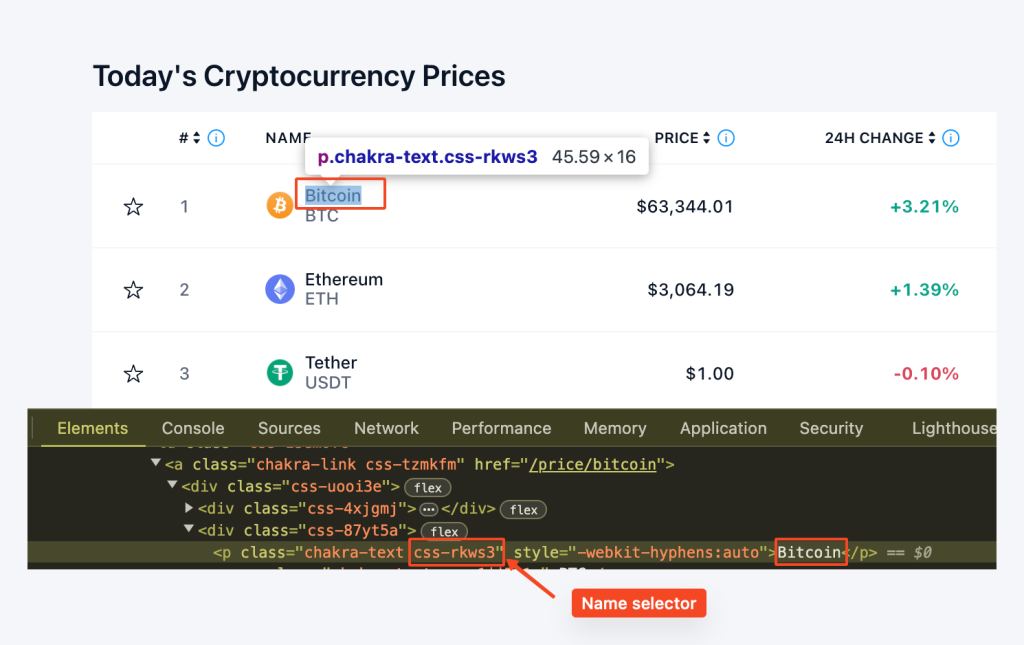

Here, we use row.find('p', class_='css-rkws3') to locate the ‘p’ element with the class "css-rkws3" . Then, we extract the text and store it in a “name” variable.

coin_name_tag = row.find('p', class_='css-rkws3')

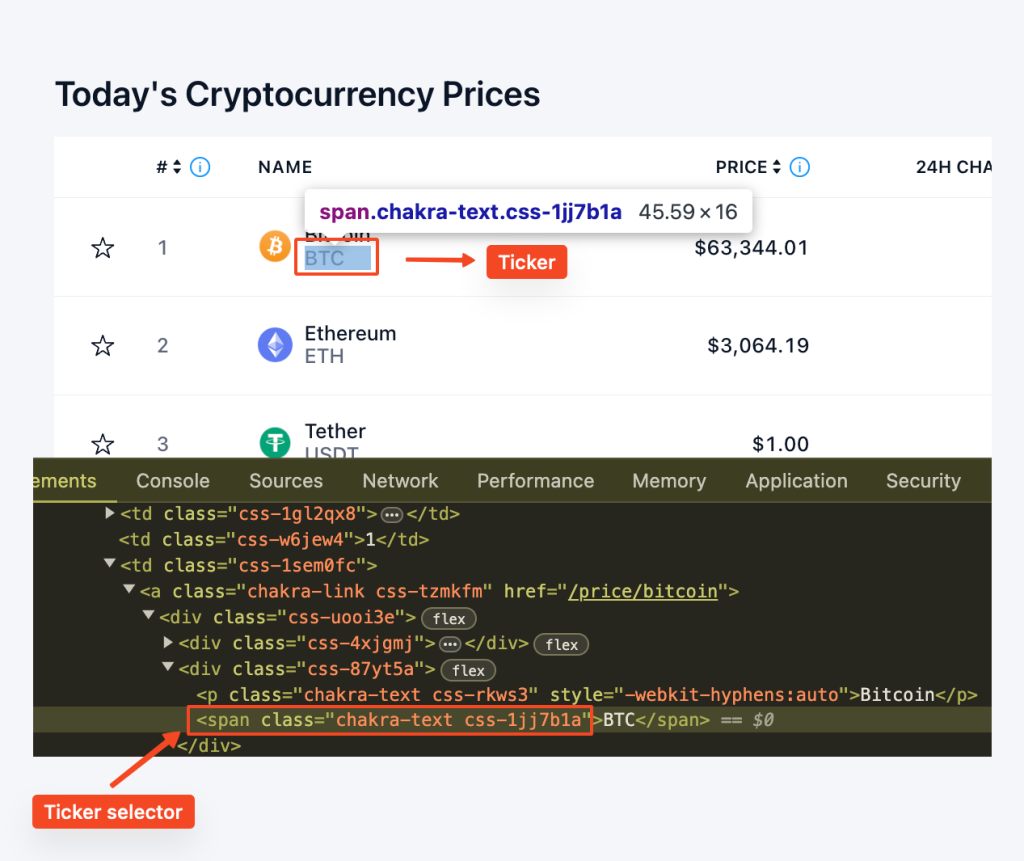

name = coin_name_tag.get_text() if coin_name_tag else "no name entry"Similarly, we use row.find("span", class_="css-1jj7b1a") to locate the span element with the class "css-1jj7b1a". The get_text() method extracts the text content, providing us with the ticker.

coin_ticker_tag = row.find('span', class_='css-1jj7b1a')

ticker = coin_ticker_tag.get_text() if coin_ticker_tag else "no ticker entry"We locate the “div” element with the class "css-b1ilzc". The text content is then stripped and assigned to the price variable. We use a conditional statement to handle cases where the element might not be present.

coin_price_tag = row.find('div', class_='css-b1ilzc')

price = coin_price_tag.text.strip() if coin_price_tag else "no price entry"Similarly, we locate the “p” element with the class "css-yyku61" to extract the percentage change. The text content is stripped, and a conditional statement handles potential absence.

coin_percentage_tag = row.find('p', class_='css-yyku61')

percentage = coin_percentage_tag.text.strip() if coin_percentage_tag else "no percentage entry"Putting it all together, we have a for loop that looks like this:

for row in price_rows:

coin_name_tag = row.find('p', class_='css-rkws3')

name = coin_name_tag.get_text() if coin_name_tag else "no name entry"

coin_ticker_tag = row.find('span', class_='css-1jj7b1a')

ticker = coin_ticker_tag.get_text() if coin_ticker_tag else "no ticker entry"

coin_price_tag = row.find('div', class_='css-b1ilzc')

price = coin_price_tag.text.strip() if coin_price_tag else "no price entry"

coin_percentage_tag = row.find('p', class_='css-yyku61')

percentage = coin_percentage_tag.text.strip() if coin_percentage_tag else "no percentage entry"

prices.append({

"Coin": name,

"Ticker": ticker,

"Price": price,

"24hr-Percentage": percentage

})

return pricesThe export_to_csv() function is defined to export the scraped data to a CSV file. We use the CSV library to write the data in the prices list to the specified CSV file.

def export_to_csv(prices, filename="proxy_crypto_prices.csv"):

with open(filename, "w", newline="") as file:

fieldnames = ["Coin", "Ticker", "Price", "24hr-Percentage"]

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

writer.writerows(prices)In the main part of our script, we call the get_crypto_prices() function to scrape the prices and export_to_csv() to export them to a CSV file. We then wait for 5 minutes (300) before updating the prices again. This is done in an infinite loop, so the prices will keep updating every 5 minutes until the program is stopped.

if __name__ == "__main__":

while True:

prices = get_crypto_prices()

export_to_csv(prices)

print("Prices updated. Waiting for the next update...")

time.sleep(300) # Update prices every 5 minutesHere is the full code which will integrate all the techniques and steps we’ve covered, providing a streamlined approach to build a crypto price tracker as we have done in this project.

import requests

from bs4 import BeautifulSoup

import csv

import time

import random

# List of proxies

proxies = [

"username:password@Your_proxy_IP_Address:Your_proxy_port1",

"username:password@Your_proxy_IP_Address:Your_proxy_port2",

"username:password@Your_proxy_IP_Address:Your_proxy_port3",

"username:password@Your_proxy_IP_Address:Your_proxy_port4",

"username:password@Your_proxy_IP_Address:Your_proxy_port5",

]

# Custom method to rotate proxies

def get_proxy():

# Choose a random proxy from the list

proxy = random.choice(proxies)

# Return a dictionary with the proxy for http protocol

return {"http": f'http://{proxy}',

"https": f'http://{proxy}'

}

def get_crypto_prices():

url = "https://crypto.com/price"

html = requests.get(url, proxies=get_proxy())

print(html.status_code)

soup = BeautifulSoup(html.content, "html.parser")

price_rows = soup.find_all('tr', class_='css-1cxc880')

prices = []

for row in price_rows:

coin_name_tag = row.find('p', class_='css-rkws3')

name = coin_name_tag.get_text() if coin_name_tag else "no name entry"

coin_ticker_tag = row.find('span', class_='css-1jj7b1a')

ticker = coin_ticker_tag.get_text() if coin_ticker_tag else "no ticker entry"

coin_price_tag = row.find('div', class_='css-b1ilzc')

price = coin_price_tag.text.strip() if coin_price_tag else "no price entry"

coin_percentage_tag = row.find('p', class_='css-yyku61')

percentage = coin_percentage_tag.text.strip() if coin_percentage_tag else "no percentage entry"

prices.append({

"Coin": name,

"Ticker": ticker,

"Price": price,

"24hr-Percentage": percentage

})

return prices

def export_to_csv(prices, filename="proxy_crypto_prices.csv"):

with open(filename, "w", newline="") as file:

fieldnames = ["Coin", "Ticker", "Price", "24hr-Percentage"]

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

writer.writerows(prices)

if __name__ == "__main__":

while True:

prices = get_crypto_prices()

export_to_csv(prices)

print("Prices updated. Waiting for the next update...")

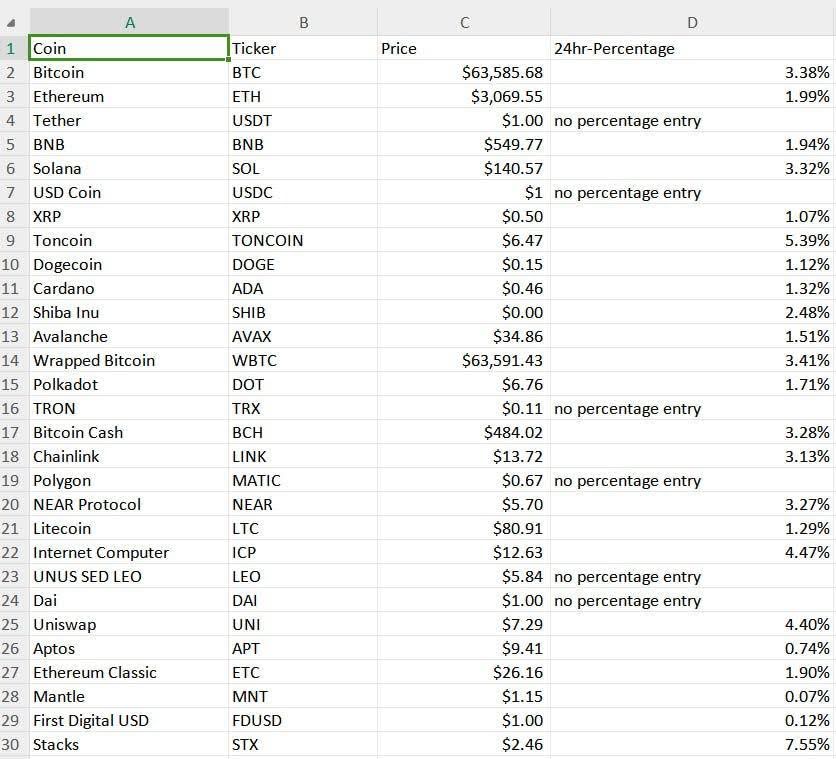

time.sleep(300) # Update prices every 5 minutes (adjust as needed)The results of our crypto price tracker are saved to a CSV file called “proxy_crypto_prices.csv” as seen below:

Python's straightforward syntax makes it the ideal choice for building an automated cryptocurrency price tracker. This programming language facilitates the addition of new features and the expansion of the tracker's capabilities. The example provided demonstrates how to create a basic scraper that can automatically update cryptocurrency rates at specified intervals, collect data through a proxy, and save it in a user-friendly format.

Comments: 0