en

en  Español

Español  中國人

中國人  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Indonesia

Indonesia  Polski

Polski Accessing data from e-commerce giants like Amazon is crucial for market analysis, pricing strategies, and product research. This data can be gathered through web scraping, a method that involves extracting large amounts of information from websites. However, Amazon rigorously protects its data, making traditional scraping techniques often ineffective.

In this comprehensive guide, we'll delve into the methods of collecting product data from Amazon and discuss strategies to circumvent the platform’s robust anti-scraping systems.We'll explore the use of Python, proxies, and advanced scraping techniques that can help overcome these challenges and efficiently harvest the data needed for your business or research purposes.

You’ll need Python 3.12.1 or newer installed. Use virtualenv to isolate your environment and avoid package conflicts.

Essential packages:

Development tools:

Start your Amazon Scraper Python by fetching product page HTML with Requests.

Mimicking a real browser:

Here’s a simple example:

Try sending a GET request to the product URL with custom headers inside a try-except block. Handle connection errors and timeouts gracefully.

Check the HTTP status code after your request:

For retries, implement exponential backoff. That means waiting longer after each failed attempt before retrying. You can use urllib3’s Retry utility or write a custom function. This reduces hammering Amazon’s servers too fast and lowers the risk of bans.

Use realistic User-Agent strings in your headers to fool basic bot detectors. For larger-scale scraping, rely on proxies.

Proxy management:

When Amazon’s anti-bot defenses increase, consider headless browsers like Selenium. Launch Chrome or Firefox in headless mode to render JavaScript-heavy pages fully. Use WebDriverWait to pause until specific elements load, ensuring accurate scraping. For stealth, tools like Selenium Wire or undetected-chromedriver bypass some detection techniques.

Proxy-Seller stands out as a premium proxy provider for your Amazon Web Scraper Python.

Key features:

Proxy types for all needs:

By combining custom headers, proxy rotation, and optionally Selenium, you significantly lower your chances of IP bans and blocked requests on Amazon.

To successfully scrape data from Amazon, you can follow the structured algorithm outlined below. This method ensures you retrieve the needed information efficiently and accurately.

Step 1: Sending HTTP requests to Amazon product pages:

Step 2: Parsing the HTML content:

Step 3: Storing the data:

Amazon employs several measures to hinder scraping efforts, including connection speed limitations, CAPTCHA integration, and IP blocking. Users can adopt countermeasures to circumvent these obstacles, such as utilizing high-quality proxies.

For extensive scraping activities, advanced Python techniques can be employed to harvest substantial amounts of product data. These techniques include header stuffing and TLS fingerprinting, which help in evading detection and ensuring successful data extraction.

These steps are explained in the coming sections of the article, where we will see its practical implementation using Python 3.12.2

To initiate a web scraping project, we will begin by setting up a basic scraper using the lxml library for HTML parsing and the requests library to manage HTTP requests directed at the Amazon web server.

Our focus will be on extracting essential information such as product names, prices, and ratings from Amazon product pages. We will also showcase techniques to efficiently parse HTML and manage requests, ensuring precise and organized extraction of data.

For maintaining project dependencies and avoiding conflicts, it is advisable to create a separate virtual environment for this web scraping endeavor. Using tools like “venv” or “pyenv” is recommended for setting up virtual environments.

You'll need the following third-party Python libraries:

Used to send HTTP requests and retrieve web content. It's often used for web scraping and interacting with web APIs.

Installation:

pip install requests

A library for parsing and manipulating XML and HTML documents. It's frequently used for web scraping and working with structured data from web pages.

Installation:

pip install lxml

Here we need to import the required libraries required for our scraper to run. Which includes a request library for handling HTTP requests, a CSV library for handling CSV file operations, the random library for generating random values and making random choices, the lxml library for parsing the raw HTML content, and Dict and List for type hinting.

import requests

import csv

import random

from lxml import html

from typing import Dict, List

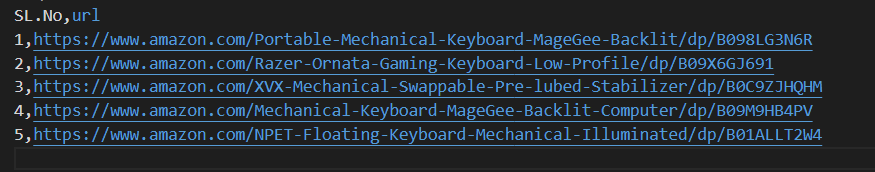

The following code snippet reads a CSV file named amazon_product_urls.csv where each line contains the URL of an Amazon product page. The code iterates over the rows, extracting the URLs from each row and appending them to a list called URL.

with open('amazon_product_urls.csv', 'r') as file:

reader = csv.DictReader(file)

for row in reader:

urls.append(row['url'])

Request headers play an important role in HTTP requests, providing complex client and request information. While scraping, it is important to copy the authorized user titles to avoid detection and easily access the information you want. By mimicking commonly used headers, scrapers can avoid detection techniques, ensuring that data is extracted consistently while maintaining ethical standards.

Proxies act as intermediaries in web scraping, masking the scraper’s IP address to prevent server detection and blocking. A rotating proxy allows you to send each request with a new IP address, avoiding potential blocks. The use of residential or mobile proxies strengthens the resilience to anti-scraping measures due to the real host and provider detection.

Code for integrating request headers and proxy servers with IP address authorization:

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-IN,en;q=0.9',

'dnt': '1',

'sec-ch-ua': '"Google Chrome";v="123", "Not:A-Brand";v="8", "Chromium";v="123"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

}

proxies = {'http': '', 'https': ''}

Here, we will create a list of user agent collections, from which a random user agent will be chosen for each request. Implementing a header rotation mechanism, such as rotating the User-Agent after each request, can further aid in bypassing bot detection measures.

useragents = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4591.54 Safari/537.36",

"Mozilla/5.0 (Windows NT 7_0_2; Win64; x64) AppleWebKit/541.38 (KHTML, like Gecko) Chrome/105.0.1585 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.7863.44 Safari/537.36"

]

headers['user-agent'] = random.choice(useragnets)

Send your requests with User-Agent and Referer headers, just like in the product scraper. Use retry logic with exponential backoff to handle occasional blocks or connection issues. Collect unique product URLs in a list to avoid duplicates.

Save all collected product links with timestamps in a CSV file for easy tracking and future processing. Complete scraping your product listings efficiently by combining extraction and pagination code. Make sure to include error handling for missing selectors or network failures. This ensures your Amazon review scraper Python runs smoothly and reliably.

Sends an HTTP GET request to a specified URL with custom headers, a timeout of 30 seconds, and proxies specified for the request.

response = requests.get(url=url, headers=headers, proxies=proxies, timeout=30)Required data points: title, price, and ratings. Now, let's inspect and identify the corresponding XPath for the elements shown in the screenshots along with their respective data points.

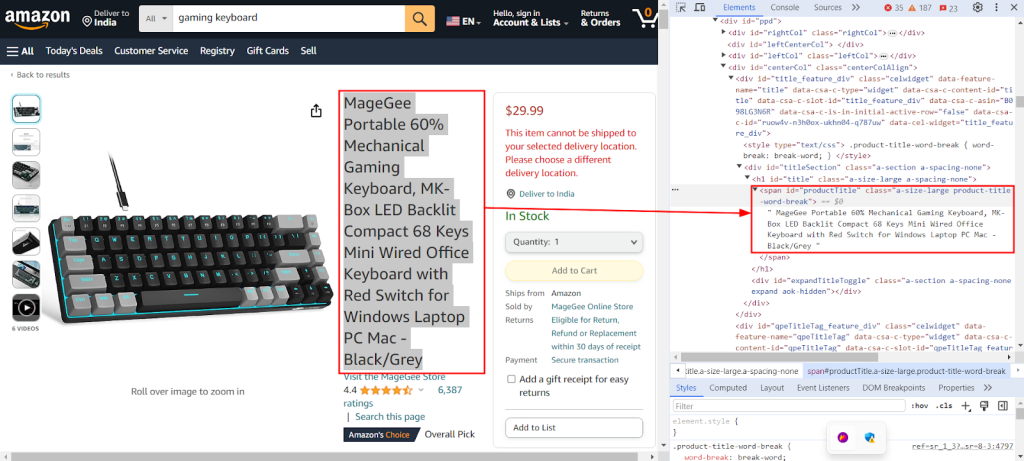

The below screenshot shows the Chrome DevTools "Inspect" feature being used to find the XPath `//span[@id="productTitle"]/text()` for extracting the product title from an Amazon product page.

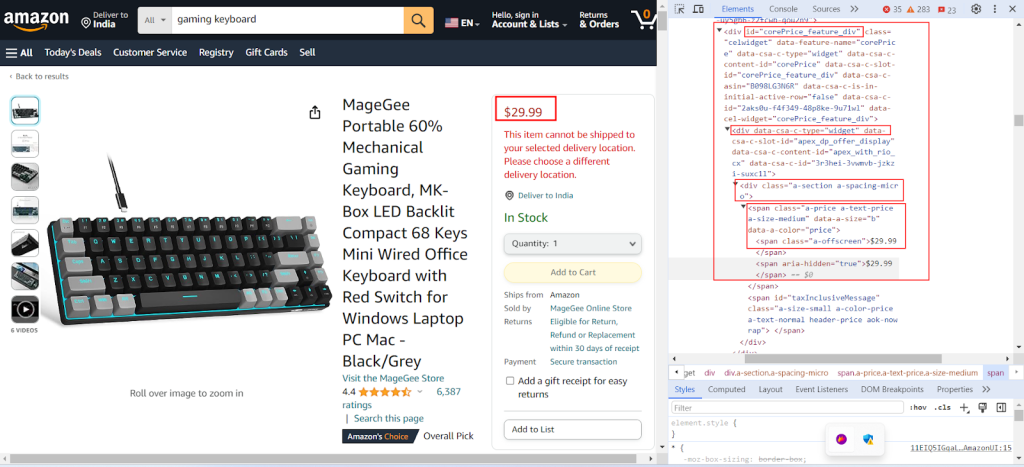

The following screenshot shows finding the respective XPath `//div[@id="corePrice_feature_div"]/div/div/span/span/text()` for extracting the product price from an Amazon product page.

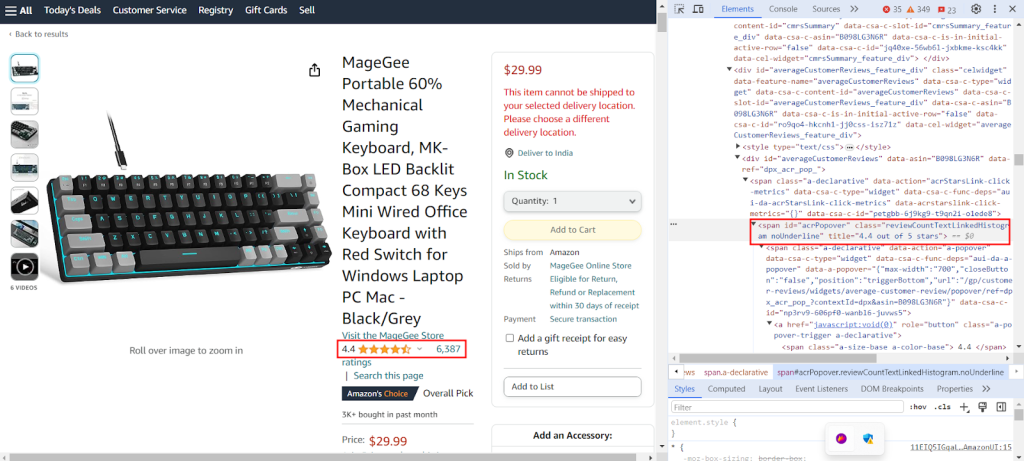

The screenshot shows finding the respective XPath `//span[@id="acrPopover"]/@title'` for extracting the product ratings from an Amazon product page.

Create a dictionary that provides XPath expressions to extract specific information from a webpage: the title, ratings, and price of a product.

xpath_queries = {'title': '//span[@id="productTitle"]/text()', 'ratings': '//span[@id="acrPopover"]/@title', 'price': '//span[@class="a-offscreen"]/text()'}The code below parses the HTML content obtained from the GET request to Amazon server into a structured tree-like format, allowing for easier navigation and manipulation of its elements and attributes.

tree = html.fromstring(response.text)

The following code snippet extracts data from the parsed HTML tree using an XPath query and assigns it to a dictionary with a specified key. strip() is used to remove whitespaces at the beginning and end if any. It retrieves the first result of the XPath query and stores it under the given key in the extracted_data dictionary.

data = tree.xpath(xpath_query)[0].strip()

extracted_data[key] = data

The following code writes data from the extracted_data dictionary to a CSV file called product_data.csv. Ensure that the header line is written only if the file is empty. If the file is not empty, it adds the data as an additional row to the CSV file. This function allows the CSV file to be continuously updated with new extracted data without overwriting existing text.

csv_file_path = 'product_data.csv'

fieldnames = ['title', 'ratings', 'price']

with open(csv_file_path, 'a', newline='') as csvfile:

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

if csvfile.tell() == 0:

writer.writeheader()

writer.writerow(extracted_data)

Please refer to our complete code, which will help you get started quickly. The code is well-structured and documented, making it beginner-friendly. To execute this code, the user must have a CSV file called "amazon_product_urls" in the same directory. Below is the structure of the CSV file:

import requests

import csv

import random

from lxml import html

from typing import Dict, List

def send_requests(

url: str, headers: Dict[str, str], proxies: Dict[str, str]

) -> List[Dict[str, str]]:

"""

Sends HTTP GET requests to multiple URLs with headers and proxies.

Args:

urls (str): URL to send requests to.

headers (Dict[str, str]): Dictionary containing request headers.

proxies (Dict[str, str]): Dictionary containing proxy settings.

Returns:

Response: Response object containing response data for each URL.

"""

try:

response = requests.get(url, headers=headers, proxies=proxies, timeout=30)

# Response validation

if len(response.text)> 10000:

return response

return None

except Exception as e:

print(f"Error occurred while fetching URL {url}: {str(e)}")

def extract_data_from_html(

response, xpath_queries: Dict[str, str]

) -> Dict[str, List[str]]:

"""

Extracts data from HTML content using XPath queries.

Args:

response (Response): Response Object.

xpath_queries (Dict[str, str]): Dictionary containing XPath queries for data extraction.

Returns:

Dict[str, str]: Dictionary containing extracted data for each XPath query.

"""

extracted_data = {}

tree = html.fromstring(response.text)

for key, xpath_query in xpath_queries.items():

data = tree.xpath(xpath_query)[0].strip()

extracted_data[key] = data

return extracted_data

def save_to_csv(extracted_data: Dict[str, any]):

"""

Saves a dictionary as a row in a CSV file using DictWriter.

Args:

extracted_data (Dict[str, any]): Dictionary representing a row of data.

"""

csv_file_path = "product_data.csv"

fieldnames = ["title", "ratings", "price"]

with open(csv_file_path, "a", newline="") as csvfile:

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

if csvfile.tell() == 0:

writer.writeheader() # Write header only if the file is empty

writer.writerow(extracted_data)

def main():

# Reading URLs from a CSV file

urls = []

with open("amazon_product_urls.csv", "r") as file:

reader = csv.DictReader(file)

for row in reader:

urls.append(row["url"])

# Defining request headers

headers = {

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"accept-language": "en-IN,en;q=0.9",

"dnt": "1",

"sec-ch-ua": '"Google Chrome";v="123", "Not:A-Brand";v="8", "Chromium";v="123"',

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": '"Windows"',

"sec-fetch-dest": "document",

"sec-fetch-mode": "navigate",

"sec-fetch-site": "same-origin",

"sec-fetch-user": "?1",

"upgrade-insecure-requests": "1",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36",

}

useragents = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4591.54 Safari/537.36",

"Mozilla/5.0 (Windows NT 7_0_2; Win64; x64) AppleWebKit/541.38 (KHTML, like Gecko) Chrome/105.0.1585 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.7863.44 Safari/537.36"

]

# Defining proxies

proxies = {"http": "IP:Port", "https": "IP:Port"}

# Sending requests to URLs

for url in urls:

# Useragent rotation in headers

headers["user-agent"] = random.choice(useragnets)

response = send_requests(url, headers, proxies)

if response:

# Extracting data from HTML content

xpath_queries = {

"title": '//span[@id="productTitle"]/text()',

"ratings": '//span[@id="acrPopover"]/@title',

"price": '//span[@class="a-offscreen"]/text()',

}

extracted_data = extract_data_from_html(response, xpath_queries)

# Saving extracted data to a CSV file

save_to_csv(extracted_data)

if __name__ == "__main__":

main()

Different proxy solutions, including Datacenter IPv4, rotating mobile, ISP, and residential proxies, are available for uninterrupted data extraction. Proper rotation logic and user agents are used to simulate real user behavior, while special proxies support large-scale scraping with internal rotation and extensive IP pools. Understanding the pros and cons of each proxy option is crucial for uninterrupted data extraction.

| Type | Pros | Cons |

|---|---|---|

| Datacenter Proxies | High speed and performance. Cost-effective. Ideal for large-volume requests. | Can be easily detected and blacklisted. Not reliable against anti-scraping or anti-bot systems. |

| Residential Proxies | High legitimacy due to real residential IPs. Wide global IP availability for location-specific data scraping. IP rotation capabilities. | More expensive than datacenter proxies. |

| Mobile Proxies | Highly legitimate IPs. Effective for avoiding blocks and verification prompts. | More expensive than other proxy types. Slower than datacenter proxies due to mobile network reliance. |

| ISP Proxies | Highly reliable IPs. Faster than residential IPs. | Limited IP availability. IP rotation not available. |

Bypassing these defenses requires advanced methods such as headless browser stealth techniques and CAPTCHA-solving services like 2Captcha or Anti-Captcha APIs.

Amazon updates page structure and CSS selectors often. This breaks scrapers quickly.

Sending too many requests too fast triggers bot defenses.

| Service Type | Benefit/Examples |

|---|---|

| Proxy Management | Proxy-Seller helps you manage geo-diverse proxies across Europe, Asia, North America, and globally. Their fast, dedicated proxies reduce connection drops. APIs and dashboards automate proxy rotation. |

| Specialized APIs | Consider using specialized scraping APIs like ZenRows that handle proxy rotation, headless browser rendering, and CAPTCHA solving automatically. They return structured JSON data. |

Other notable specialized services include ScraperAPI, BrightData, and Oxylabs, offering similar features for large-scale scraping.

By combining proxy management via Proxy-Seller, headless browsers, and smart request pacing, you will make your Python Amazon scraper more robust, efficient, and less likely to get blocked.

Scraping product data from Amazon involves meticulous preparation to navigate the platform's anti-scraping mechanisms effectively. Utilizing proxy servers along with Python enables efficient data processing and targeted extraction of necessary information. When selecting proxies for web scraping, it's crucial to consider factors such as performance, cost, server reliability, and the specific requirements of your project. Employing dynamic proxies and implementing strategies to counteract security measures can minimize the risk of being blocked and enhance the overall efficiency of the scraping process.

Comments: 0