zh

zh  English

English  Español

Español  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Gaeilge

Gaeilge  اردو

اردو  Indonesia

Indonesia  Polski

Polski 尽管这种数据收集方法看起来很好,但许多网站都不赞成这种做法,而且跟进刮擦行为也会带来一些后果,比如禁止我们的 IP。

从积极的方面看,代理服务有助于避免这种后果。它们允许我们在网上收集数据时使用不同的 IP,虽然这看起来很安全,但使用多个代理会更好。刮擦时使用多个代理会使与网站的交互显得随机,并增强安全性。

本指南的目标网站(源)是一家在线书店。它模仿了一个图书电子商务网站。网站上有书籍的名称、价格和可用性。由于本指南的重点不是组织返回的数据,而是旋转代理,因此返回的数据只会在控制台中显示。

安装一些 Python 模块并将其导入我们的文件,然后开始编码有助于旋转代理和刮擦网站的函数。

pip install requests beautifulSoup4 lxml使用上面的命令可以安装该搜索脚本所需的 5 个 Python 模块中的 3 个。Requests 允许我们向网站发送 HTTP 请求,beautifulSoup4 允许我们从请求提供的页面 HTML 中提取信息,而 LXML 是一个 HTML 解析器。

此外,我们还需要内置线程模块,以便多次测试代理是否正常工作,并需要 json 来读取 JSON 文件。

import requests

import threading

from requests.auth import HTTPProxyAuth

import json

from bs4 import BeautifulSoup

import lxml

import time

url_to_scrape = "https://books.toscrape.com"

valid_proxies = []

book_names = []

book_price = []

book_availability = []

next_button_link = ""创建一个可轮换代理的搜索脚本意味着我们需要一个代理列表,以便在轮换过程中进行选择。有些代理需要身份验证,有些则不需要。我们必须创建一个包含代理详细信息的字典列表,如果需要验证,则包括代理用户名和密码。

最好的办法是将代理信息放在一个单独的 JSON 文件中,如下所示:

[

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

},

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

},

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

},

{

"proxy_address": "XX.X.XX.X:XX",

"proxy_username": "",

"proxy_password": ""

}

]在 "proxy_address"(代理地址)字段中,输入 IP 地址和端口,以冒号分隔。在 "proxy_username "和 "proxy_password "字段中,输入授权用的用户名和密码。

以上是一个 JSON 文件的内容,其中包含 4 个代理,供脚本选择。用户名和密码可以为空,表示不需要验证的代理。

def verify_proxies(proxy:dict):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

res = requests.get(

url_to_scrape,

auth = proxy_auth,

proxies={

"http" : proxy['proxy_address']

}

)

else:

res = requests.get(url_to_scrape, proxies={

"http" : proxy['proxy_address'],

})

if res.status_code == 200:

valid_proxies.append(proxy)

print(f"Proxy Validated: {proxy['proxy_address']}")

except:

print("Proxy Invalidated, Moving on")作为预防措施,该函数将确保所提供的代理处于活动和工作状态。为此,我们可以循环查看 JSON 文件中的每个字典,向网站发送 GET 请求,如果返回的状态代码为 200,则将该代理添加到有效代理列表中。如果调用不成功,则继续执行。

由于 beautifulSoup 需要网站的 HTML 代码来提取我们需要的数据,因此我们创建了 request_function(),它接收 URL 和所选代理,并以文本形式返回 HTML 代码。代理变量使我们能够通过不同的代理来路由请求,从而旋转代理。

def request_function(url, proxy):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

response = requests.get(

url,

auth = proxy_auth,

proxies={

"http" : proxy['proxy_address']

}

)

else:

response = requests.get(url, proxies={

"http" : proxy['proxy_address']

})

if response.status_code == 200:

return response.text

except Exception as err:

print(f"Switching Proxies, URL access was unsuccessful: {err}")

return Nonedata_extract() 可从提供的 HTML 代码中提取我们需要的数据。它会收集包含图书信息(如图书名称、价格和可用性)的 HTML 元素。它还会提取下一页的链接。

这一点尤其棘手,因为链接是动态的,所以我们必须考虑到动态性。最后,它会查看图书并提取名称、价格和可用性,然后返回下一个按钮链接,我们将用它来获取下一页的 HTML 代码。

def data_extract(response):

soup = BeautifulSoup(response, "lxml")

books = soup.find_all("li", class_="col-xs-6 col-sm-4 col-md-3 col-lg-3")

next_button_link = soup.find("li", class_="next").find('a').get('href')

next_button_link=f"{url_to_scrape}/{next_button_link}" if "catalogue" in next_button_link else f"{url_to_scrape}/catalogue/{next_button_link}"

for each in books:

book_names.append(each.find("img").get("alt"))

book_price.append(each.find("p", class_="price_color").text)

book_availability.append(each.find("p", class_="instock availability").text.strip())

return next_button_link要把所有事情联系起来,我们必须

with open("proxy-list.json") as json_file:

proxies = json.load(json_file)

for each in proxies:

threading.Thread(target=verify_proxies, args=(each, )).start()

time.sleep(4)

for i in range(len(valid_proxies)):

response = request_function(url_to_scrape, valid_proxies[i])

if response != None:

next_button_link = data_extract(response)

break

else:

continue

for proxy in valid_proxies:

print(f"Using Proxy: {proxy['proxy_address']}")

response = request_function(next_button_link, proxy)

if response is not None:

next_button_link = data_extract(response)

else:

continue

for each in range(len(book_names)):

print(f"No {each+1}: Book Name: {book_names[each]} Book Price: {book_price[each]} and Availability {book_availability[each]}")import requests

import threading

from requests.auth import HTTPProxyAuth

import json

from bs4 import BeautifulSoup

import time

url_to_scrape = "https://books.toscrape.com"

valid_proxies = []

book_names = []

book_price = []

book_availability = []

next_button_link = ""

def verify_proxies(proxy: dict):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

res = requests.get(

url_to_scrape,

auth=proxy_auth,

proxies={

"http": proxy['proxy_address'],

}

)

else:

res = requests.get(url_to_scrape, proxies={

"http": proxy['proxy_address'],

})

if res.status_code == 200:

valid_proxies.append(proxy)

print(f"Proxy Validated: {proxy['proxy_address']}")

except:

print("Proxy Invalidated, Moving on")

# 读取页面的 HTML 元素

def request_function(url, proxy):

try:

if proxy['proxy_username'] != "" and proxy['proxy_password'] != "":

proxy_auth = HTTPProxyAuth(proxy['proxy_username'], proxy['proxy_password'])

response = requests.get(

url,

auth=proxy_auth,

proxies={

"http": proxy['proxy_address'],

}

)

else:

response = requests.get(url, proxies={

"http": proxy['proxy_address'],

})

if response.status_code == 200:

return response.text

except Exception as err:

print(f"Switching Proxies, URL access was unsuccessful: {err}")

return None

# 刮削

def data_extract(response):

soup = BeautifulSoup(response, "lxml")

books = soup.find_all("li", class_="col-xs-6 col-sm-4 col-md-3 col-lg-3")

next_button_link = soup.find("li", class_="next").find('a').get('href')

next_button_link = f"{url_to_scrape}/{next_button_link}" if "catalogue" in next_button_link else f"{url_to_scrape}/catalogue/{next_button_link}"

for each in books:

book_names.append(each.find("img").get("alt"))

book_price.append(each.find("p", class_="price_color").text)

book_availability.append(each.find("p", class_="instock availability").text.strip())

return next_button_link

# 从 JSON 获取代理

with open("proxy-list.json") as json_file:

proxies = json.load(json_file)

for each in proxies:

threading.Thread(target=verify_proxies, args=(each,)).start()

time.sleep(4)

for i in range(len(valid_proxies)):

response = request_function(url_to_scrape, valid_proxies[i])

if response is not None:

next_button_link = data_extract(response)

break

else:

continue

for proxy in valid_proxies:

print(f"Using Proxy: {proxy['proxy_address']}")

response = request_function(next_button_link, proxy)

if response is not None:

next_button_link = data_extract(response)

else:

continue

for each in range(len(book_names)):

print(

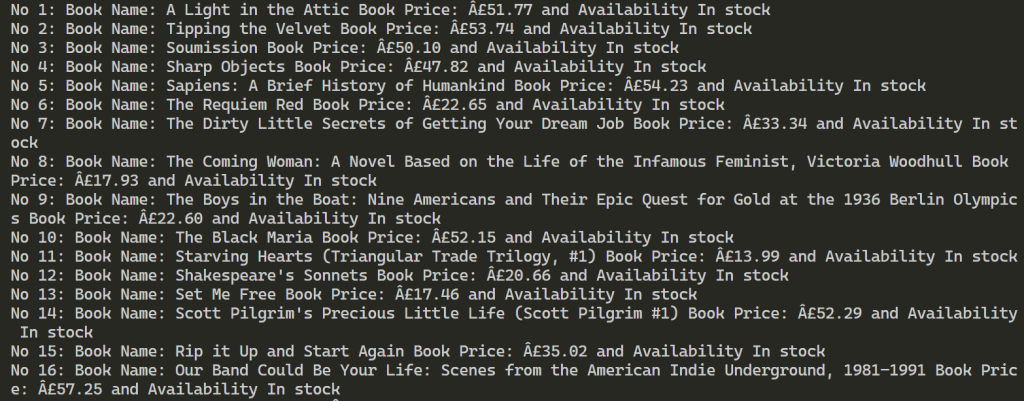

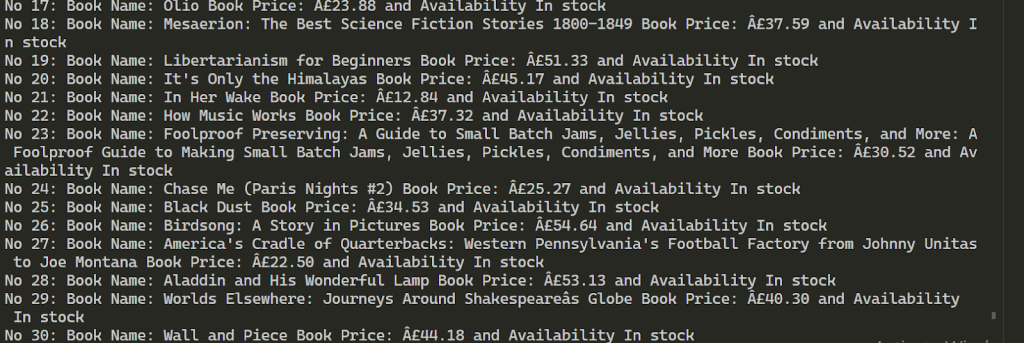

f"No {each + 1}: Book Name: {book_names[each]} Book Price: {book_price[each]} and Availability {book_availability[each]}")成功执行后,结果如下。接着,它使用提供的 2 个代理提取了 100 多本书的信息。

使用多个代理进行网络搜刮可以增加对目标资源的请求数量,并有助于绕过拦截。为保持搜索过程的稳定性,建议使用具有高速度和高信任度的 IP 地址,如静态 ISP 代理服务器和动态住宅代理服务器。此外,所提供脚本的功能可随时扩展,以适应各种数据搜刮要求。

评论: 0