zh

zh  English

English  Español

Español  Tiếng Việt

Tiếng Việt  Deutsch

Deutsch  Українська

Українська  Português

Português  Français

Français  भारतीय

भारतीय  Türkçe

Türkçe  한국인

한국인  Italiano

Italiano  Gaeilge

Gaeilge  اردو

اردو  Indonesia

Indonesia  Polski

Polski 使用 Python 抓取亚马逊评论在进行竞争对手分析、检查评论和市场调研时非常有用。本文演示了如何使用 Python、BeautifulSoup 和 Requests 库高效地抓取亚马逊上的产品评论。

在进入刮擦过程之前,请确保已安装必要的 Python 库:

pip install requests

pip install beautifulsoup4我们将重点从亚马逊页面中提取产品评论,并逐步检查搜索过程的每个阶段。

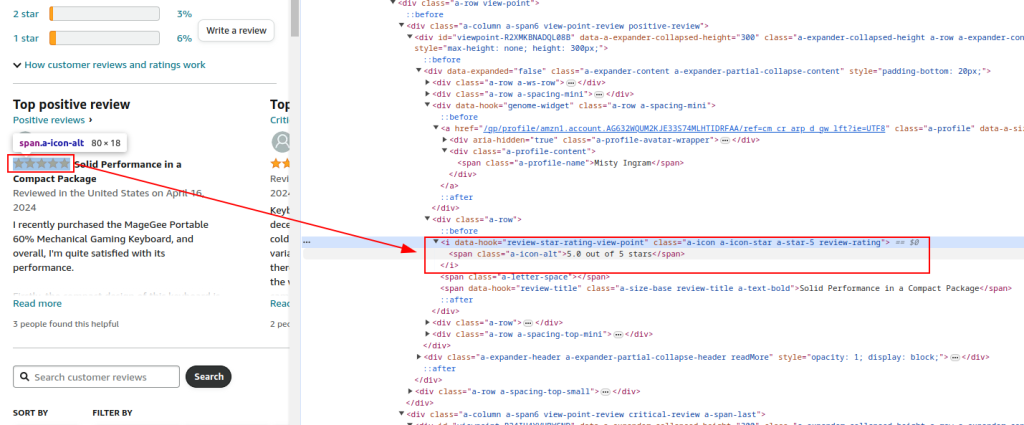

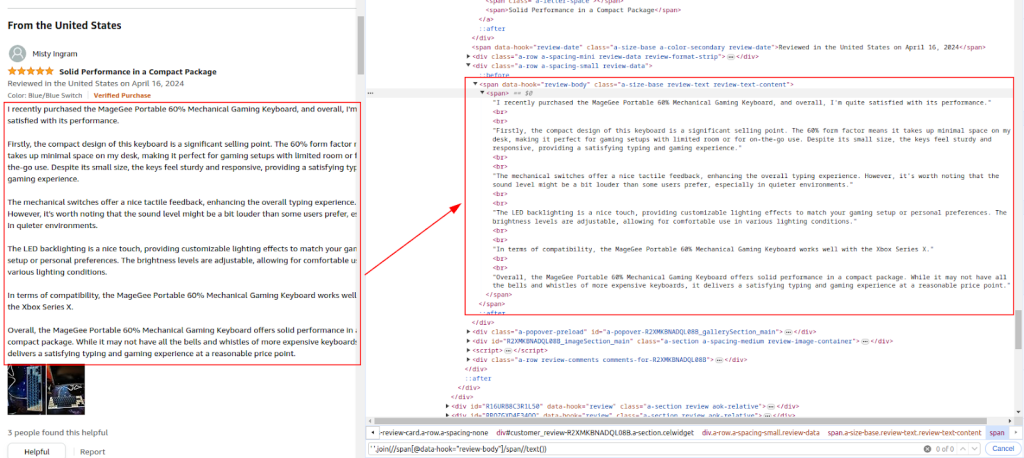

检查亚马逊产品评论页面的 HTML 结构,确定我们要抓取的元素:评论者姓名、评分和评论。

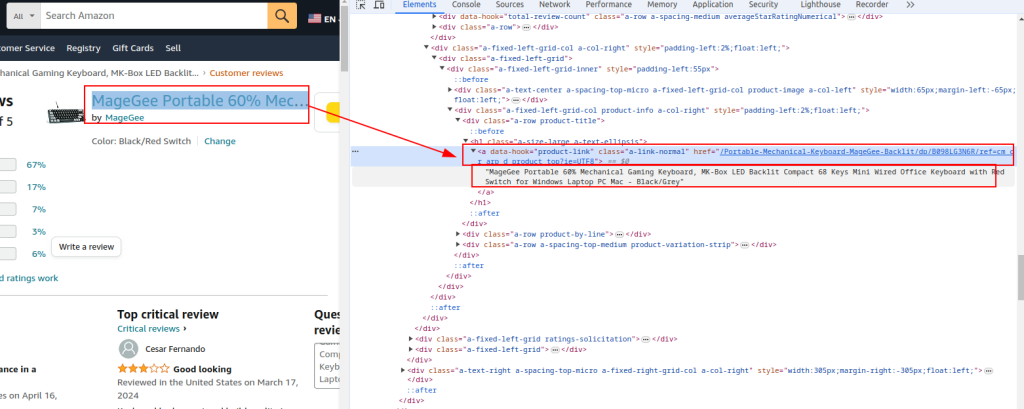

产品标题和 URL:

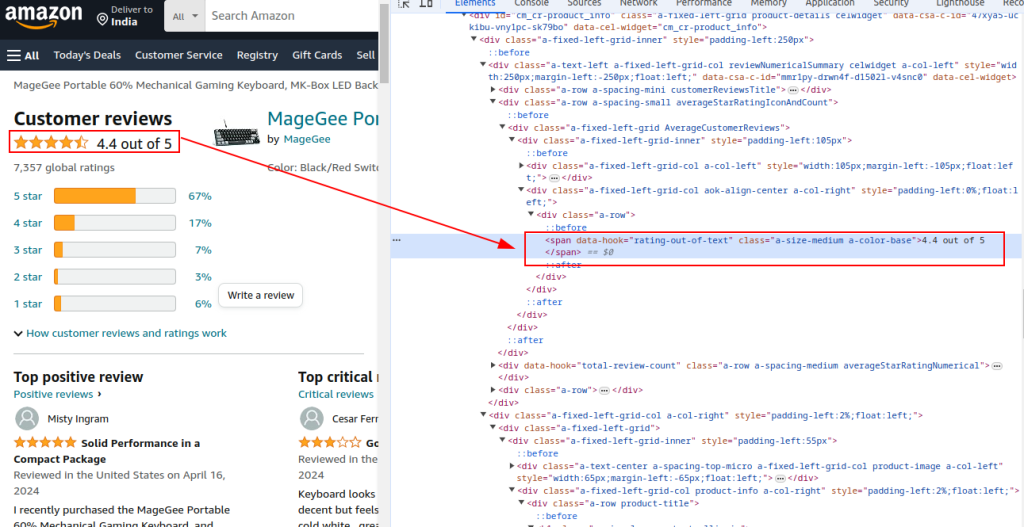

总评分:

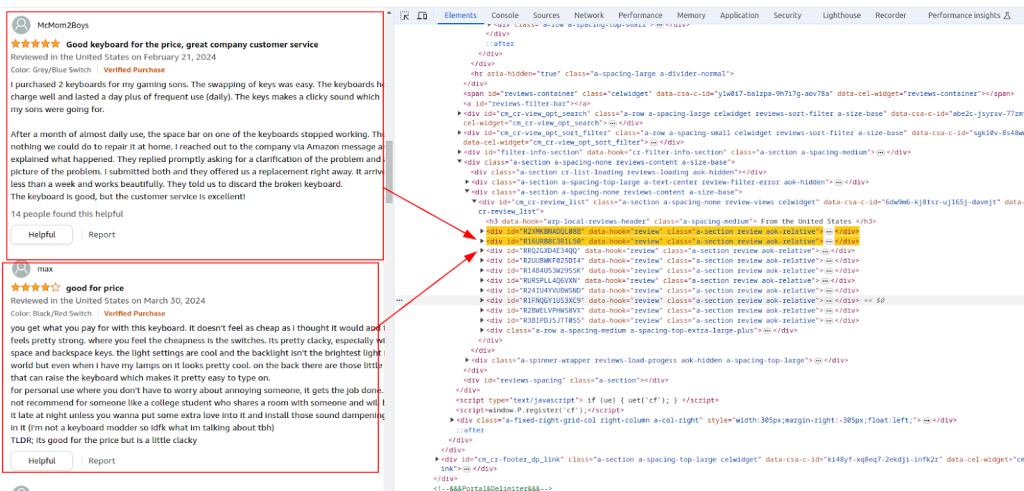

审查部分:

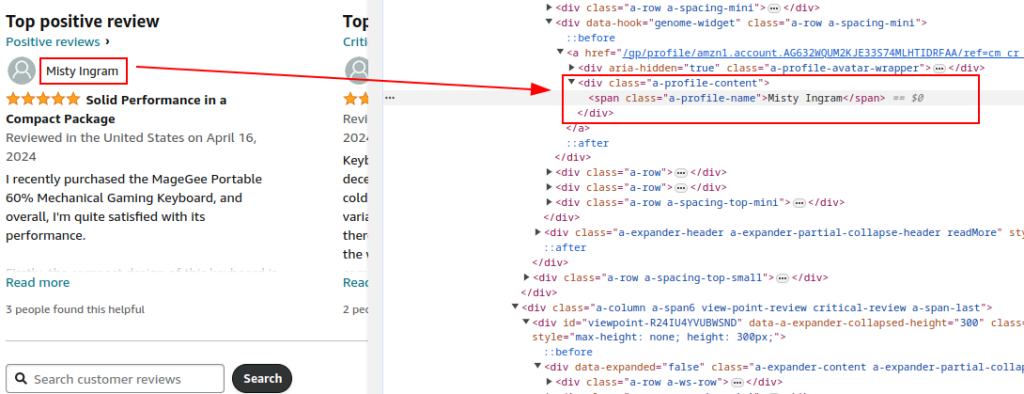

作者姓名:

评级:

评论:

使用请求库向亚马逊产品评论页面发送 HTTP GET 请求。设置标头以模仿合法的浏览器行为,避免被检测到。要避免被亚马逊拦截,代理和完整的请求标头至关重要。

使用代理服务器有助于旋转 IP 地址,以避免亚马逊的 IP 禁止和速率限制。这对于保持匿名性和防止被发现的大规模刮削至关重要。这里,代理服务提供了代理详细信息。

包括各种标头,如 Accept-Encoding、Accept-Language、Referer、Connection 和 Upgrade-Insecure-Requests 等,模仿合法的浏览器请求,减少被标记为僵尸的机会。

import requests

url = "https://www.amazon.com/Portable-Mechanical-Keyboard-MageGee-Backlit/product-reviews/B098LG3N6R/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews"

# 代理服务提供的代理示例

proxy = {

'http': 'http://your_proxy_ip:your_proxy_port',

'https': 'https://your_proxy_ip:your_proxy_port'

}

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'no-cache',

'dnt': '1',

'pragma': 'no-cache',

'sec-ch-ua': '"Not/A)Brand";v="99", "Google Chrome";v="91", "Chromium";v="91"',

'sec-ch-ua-mobile': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

}

# 向 URL 发送 HTTP GET 请求,并附带标头和代理

try:

response = requests.get(url, headers=headers, proxies=proxy, timeout=10)

response.raise_for_status() # Raise an exception for bad response status

except requests.exceptions.RequestException as e:

print(f"Error: {e}")

使用 BeautifulSoup 解析响应的 HTML 内容,提取常见的产品详细信息,如 URL、标题和总评分。

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.content, 'html.parser')

# 提取常见产品的详细信息

product_url = soup.find('a', {'data-hook': 'product-link'}).get('href', '')

product_title = soup.find('a', {'data-hook': 'product-link'}).get_text(strip=True)

total_rating = soup.find('span', {'data-hook': 'rating-out-of-text'}).get_text(strip=True)

继续解析 HTML 内容,根据确定的 XPath 表达式提取评论者姓名、评分和评论。

reviews = []

review_elements = soup.find_all('div', {'data-hook': 'review'})

for review in review_elements:

author_name = review.find('span', class_='a-profile-name').get_text(strip=True)

rating_given = review.find('i', class_='review-rating').get_text(strip=True)

comment = review.find('span', class_='review-text').get_text(strip=True)

reviews.append({

'Product URL': product_url,

'Product Title': product_title,

'Total Rating': total_rating,

'Author': author_name,

'Rating': rating_given,

'Comment': comment,

})

使用 Python 内置的 CSV 模块将提取的数据保存到 CSV 文件中,以便进一步分析。

import csv

# 定义 CSV 文件路径

csv_file = 'amazon_reviews.csv'

# 定义 CSV 字段名

fieldnames = ['Product URL', 'Product Title', 'Total Rating', 'Author', 'Rating', 'Comment']

# 将数据写入 CSV 文件

with open(csv_file, mode='w', newline='', encoding='utf-8') as file:

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

for review in reviews:

writer.writerow(review)

print(f"Data saved to {csv_file}")

以下是完整的代码,用于抓取亚马逊评论数据并将其保存到 CSV 文件:

import requests

from bs4 import BeautifulSoup

import csv

import urllib3

urllib3.disable_warnings()

# 亚马逊产品评论页面的 URL

url = "https://www.amazon.com/Portable-Mechanical-Keyboard-MageGee-Backlit/product-reviews/B098LG3N6R/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews"

# 代理服务提供的 IP 授权代理

path_proxy = 'your_proxy_ip:your_proxy_port'

proxy = {

'http': f'http://{path_proxy}',

'https': f'https://{path_proxy}'

}

# HTTP 请求的标头

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-language': 'en-US,en;q=0.9',

'cache-control': 'no-cache',

'dnt': '1',

'pragma': 'no-cache',

'sec-ch-ua': '"Not/A)Brand";v="99", "Google Chrome";v="91", "Chromium";v="91"',

'sec-ch-ua-mobile': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

}

# 向带标头的 URL 发送 HTTP GET 请求并处理异常情况

try:

response = requests.get(url, headers=headers, timeout=10, proxies=proxy, verify=False)

response.raise_for_status() # Raise an exception for bad response status

except requests.exceptions.RequestException as e:

print(f"Error: {e}")

# 使用 BeautifulSoup 解析 HTML 内容

soup = BeautifulSoup(response.content, 'html.parser')

# 提取常见产品的详细信息

product_url = soup.find('a', {'data-hook': 'product-link'}).get('href', '') # Extract product URL

product_title = soup.find('a', {'data-hook': 'product-link'}).get_text(strip=True) # Extract product title

total_rating = soup.find('span', {'data-hook': 'rating-out-of-text'}).get_text(strip=True) # Extract total rating

# 提取单个评论

reviews = []

review_elements = soup.find_all('div', {'data-hook': 'review'})

for review in review_elements:

author_name = review.find('span', class_='a-profile-name').get_text(strip=True) # Extract author name

rating_given = review.find('i', class_='review-rating').get_text(strip=True) # Extract rating given

comment = review.find('span', class_='review-text').get_text(strip=True) # Extract review comment

# 将每篇评论存储在字典中

reviews.append({

'Product URL': product_url,

'Product Title': product_title,

'Total Rating': total_rating,

'Author': author_name,

'Rating': rating_given,

'Comment': comment,

})

# 定义 CSV 文件路径

csv_file = 'amazon_reviews.csv'

# 定义 CSV 字段名

fieldnames = ['Product URL', 'Product Title', 'Total Rating', 'Author', 'Rating', 'Comment']

# 将数据写入 CSV 文件

with open(csv_file, mode='w', newline='', encoding='utf-8') as file:

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

for review in reviews:

writer.writerow(review)

# 打印确认信息

print(f"Data saved to {csv_file}")

总之,必须强调的是,选择可靠的代理服务器是编写网络搜刮脚本的关键步骤。这可以确保有效绕过拦截和防止反僵尸过滤器。住宅代理服务器和静态 ISP 代理服务器是最合适的网络搜刮选择,前者具有较高的信任度和动态 IP 地址,后者具有较高的速度和运行稳定性。

评论: 0